Enhanced Timer Management

With z/VM 4.3.0, CP timer management scalability has been improved by eliminating master processor serialization and by design changes that reduce large system effects. The performance of CP timer management has been improved for environments where a large number of requests are scheduled, particularly for short intervals, and where timer requests are frequently canceled before they become due. A z/VM system with large numbers of low-usage Linux guest users would be an example of such an environment. Master processor serialization has been eliminated by allowing timer events to be handled on any processor; serialization of timer events is now handled by the scheduler lock component of CP. Also, clock comparator settings are now tracked and managed across all processors to eliminate duplicate or unnecessary timer interruptions.

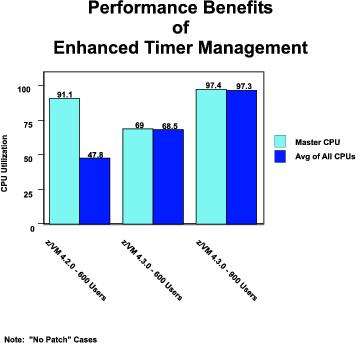

This section summarizes the results of a performance evaluation done to verify that the master processor bottleneck has been relieved. This is accomplished by comparing master processor utilization against the average processor utilization when handling timer events; master processor utilization should be closely aligned with average processor utilization.

Methodology: All performance measurements were done on a 2064-109 system in an LPAR with 7 dedicated processors. The LPAR was configured with 2GB of central storage and 10GB of expanded storage. 1 RVA DASD behind a 3990-6 controller was used for paging and spool space required to support the test environment.

The software configuration was varied to enable the comparisons desired for this evaluation. A z/VM 4.2.0 64-bit system provided the baseline measurements for the comparison with a z/VM 4.3.0 64-bit system. In addition, 2 variations of the SuSE Linux 2.4.7 31-bit distribution were used - one with the On-Demand Timer Patch applied and one without it.

The On-Demand Timer Patch removes the Linux built-in timer request that occurs every 10 milliseconds to look for work to be done. The timer requests cause the Linux guests to appear consistently busy to z/VM when many of them may actually be idle, requiring CP to perform more processing to handle timer requests. This becomes very costly when there are large numbers of Linux guest users and limits the number of Linux guests that z/VM can manage concurrently. The patch removes the automatic timer request from the Linux kernel source. For idle Linux guests with the timer patch, timer events occur much less often.

An internal tool was used to Initial Program Load (IPL) Linux guest users. The Linux users were allowed to reach a steady idle state (indicated by the absence of paging activity to/from DASD) before taking measurements. Hardware instrumentation and CP Monitor data were collected for each scenario.

Workloads of idle Linux guests were measured to gather data about their consumption of CPU time across processors, with a specific focus on the master processor. A baseline measurement workload of 600 idle Linux guest users was selected. This enabled measurement data to be gathered on a z/VM 4.2.0 system while it was still stable. At 615 Linux guest users without the timer patch on z/VM 4.2.0, the master processor utilization reached 100%, and the system became unstable. A comparison workload of 900 idle Linux guest users was chosen based on the amount of central storage and expanded storage allocated in the hardware configuration. This is the maximum number of users that could be supported without significant paging activity out to DASD.

The following scenarios were attempted. All but #3 were achieved. As discussed above, in attempting that scenario, master processor utilization reached 100% at approximately 615 Linux images.

- z/VM 4.2.0 using SuSE Linux 2.4.7 w/o Timer Patch with load of 600 users

- z/VM 4.3.0 using SuSE Linux 2.4.7 w/o Timer Patch with load of 600 users

- z/VM 4.2.0 using SuSE Linux 2.4.7 w/o Timer Patch with load of 900 users

- z/VM 4.3.0 using SuSE Linux 2.4.7 w/o Timer Patch with load of 900 users

- z/VM 4.2.0 using SuSE Linux 2.4.7 w/ Timer Patch with load of 600 users

- z/VM 4.3.0 using SuSE Linux 2.4.7 w/ Timer Patch with load of 600 users

- z/VM 4.2.0 using SuSE Linux 2.4.7 w/ Timer Patch with load of 900 users

- z/VM 4.3.0 using SuSE Linux 2.4.7 w/ Timer Patch with load of 900 users

Results: Figure 1 shows CPU utilization comparisons between z/VM 4.2.0 and z/VM 4.3.0, comparing the master processor with the average processor utilization across all processors.

Figure 1. Performance Benefits of Enhanced Timer Management

|

These comparisons are all using the SuSE Linux 2.4.7 distribution without the On-Demand Timer Patch applied. The dramatic improvement illustrated here shows that the master processor is no longer a bottleneck for handling timer requests. Prior to z/VM 4.3.0, timer requests were serialized on the master processor; with z/VM 4.3.0, multiprocessor serialization is implemented using the CP scheduler lock. In addition, the 900 user case shows that z/VM 4.3.0 can support significantly more users with this new implementation.

Table 1 and Table 2 show total processor utilization and master processor utilization across the scenarios. The first table shows the comparisons for scenarios where the Linux images did not include the On-Demand Timer Patch. The second table includes scenarios where the Linux images did include the timer patch. With the timer patch, there is a dramatic drop in overall CPU utilization due to the major reduction in Linux guest timer requests to be handled by the system.

Throughput data were not available with this experiment, so the number of Linux guests IPLed for a given scenario was used to normalize the data reductions and calculations for comparison across scenarios.

The tables include data concerning the average number of pages used

by a user and an indication of

the time users were waiting on the scheduler lock.

"Spin Time/Request (v)" from the SYSTEM_SUMMARY2_BY_TIME VMPRF report,

is the average time spent waiting on all CP locks in the system.

For this particular workload, the scheduler lock is the main

contributor.

Table 1. Enhanced Timer Management: No Timer Patch

z/VM Version Linux Users Run ID | 4.2.0 600 2600NP | 4.3.0 600 3600NP1 | 4.3.0 900 3900NP1 |

|---|---|---|---|

Total Util/Proc (v) Emul Util/Proc (v) CP Util/Proc (v) | 47.8 13.3 34.5 | 68.5 13.5 55.0 | 97.3 24.7 72.6 |

Master Total Util/Proc(v) Master Emul Util/Proc (v) Master CP Util/Proc (v) | 91.1 24.6 66.50 | 69.0 13.5 55.50 | 97.4 24.5 72.90 |

Total Util/User Emul Util/User CP Util/User | 0.080 0.021 0.059 | 0.119 0.021 0.097 | 0.110 0.028 0.082 |

Xstor Page Rate (v) DASD Page Rate (v) Spin Lock Rate (v) | 33816 0 58387 | 35131 4 82978 | 75380 2 110271 |

Spin Time/Request (v) Pct Spin Time (v) | 8.697 7.254 | 13.752 16.30 | 13.086 20.61 |

Resident Pages/User (v) Xstor Pages/User (v) DASD Pages/User (v) Total Pages/User (v) | 789 4225 8337 13351 | 788 4224 8404 13416 | 519 2812 9848 13179 |

Ratios |

|

|

|

Total Util/Proc (v) Emul Util/Proc (v) CP Util/Proc (v) | 1.000 1.000 1.000 | 1.433 1.015 1.594 | 2.036 1.857 2.104 |

Master Total Util/Proc(v) Master Emul Util/Proc (v) Master CP Util/Proc (v) | 1.000 1.000 1.000 | 0.757 0.549 0.835 | 1.069 0.996 1.096 |

Total Util/User Emul Util/User CP Util/User | 1.000 1.000 1.000 | 1.488 1.000 1.644 | 1.375 1.333 1.390 |

Xstor Page Rate (v) DASD Page Rate (v) Spin Lock Rate (v) | 1.000 - 1.000 | 1.039 - 1.421 | 2.229 - 1.889 |

Spin Time/Request (v) Pct Spin Time (v) | 1.000 1.000 | 1.581 2.247 | 1.505 2.841 |

Resident Pages/User (v) Xstor Pages/User (v) DASD Pages/User (v) Total Pages/User (v) | 1.000 1.000 1.000 1.000 | 0.999 1.000 1.008 1.005 | 0.658 0.666 1.181 0.987 |

| Note: 2064-109; LPAR with 7 dedicated processors; 2GB central storage; 10GB expanded storage; SuSE Linux 2.4.7 31-bit kernel | |||

Table 2. Enhanced Timer Management: Timer Patch

z/VM Version Linux Users Run ID | 4.2.0 600 2600P | 4.3.0 600 3600P1 | 4.2.0 900 2900P | 4.3.0 900 3900P1 |

|---|---|---|---|---|

Total Util/Proc (v) Emul Util/Proc (v) CP Util/Proc (v) | 4.5 2.0 2.5 | 4.6 2.0 2.6 | 15.5 4.3 11.2 | 13.8 4.0 9.8 |

Master Total Util/Proc(v) Master Emul Util/Proc (v) Master CP Util/Proc (v) | 16.2 7.0 9.20 | 11.1 4.8 6.30 | 30.3 8.3 22.00 | 16.6 4.7 11.90 |

Total Util/User Emul Util/User CP Util/User | 0.007 0.003 0.004 | 0.008 0.003 0.004 | 0.017 0.004 0.013 | 0.015 0.004 0.011 |

Xstor Page Rate (v) DASD Page Rate (v) Spin Lock Rate (v) | 30052 0 74 | 30731 0 76 | 101k 38 1385 | 98322 3 905 |

Spin Time/Request (v) Pct Spin Time (v) | 2.328 0.002 | 2.436 0.003 | 4.463 0.088 | 2.990 0.039 |

Resident Pages/User (v) Xstor Pages/User (v) DASD Pages/User (v) Total Pages/User (v) | 787 4223 8344 13354 | 788 4222 8344 13354 | 522 2820 10573 13915 | 522 2805 9920 13247 |

Ratios |

|

|

|

|

Total Util/Proc (v) Emul Util/Proc (v) CP Util/Proc (v) | 1.000 1.000 1.000 | 1.022 1.000 1.040 | 1.000 1.000 1.000 | 0.890 0.930 0.875 |

Master Total Util/Proc(v) Master Emul Util/Proc (v) Master CP Util/Proc (v) | 1.000 1.000 1.000 | 0.685 0.686 0.685 | 1.000 1.000 1.000 | 0.548 0.566 0.541 |

Total Util/User Emul Util/User CP Util/User | 1.000 1.000 1.000 | 1.143 1.000 1.000 | 1.000 1.000 1.000 | 0.882 1.000 0.846 |

Xstor Page Rate (v) DASD Page Rate (v) Spin Lock Rate (v) | 1.000 - 1.000 | 1.023 - 1.027 | 1.000 1.000 1.000 | 0.974 0.079 0.653 |

Spin Time/Request (v) Pct Spin Time (v) | 1.000 1.000 | 1.046 1.500 | 1.000 1.000 | 0.670 0.443 |

Resident Pages/User (v) Xstor Pages/User (v) DASD Pages/User (v) Total Pages/User (v) | 1.000 1.000 1.000 1.000 | 1.001 1.000 1.000 1.000 | 1.000 1.000 1.000 1.000 | 1.000 0.995 0.938 0.952 |

| Note: 2064-109; LPAR with 7 dedicated processors; 2GB central storage; 10GB expanded storage; SuSE Linux 2.4.7 31-bit kernel | ||||

Summary: z/VM 4.3.0 relieves the master processor bottleneck caused when large numbers of idle Linux guests are run on z/VM. As illustrated in Figure 1, the master processor utilization is far less on z/VM 4.3.0 and matches closely with the average utilization across all processors. As a result, z/VM 4.3.0 is able to support more Linux guests using the same hardware configuration.

While z/VM 4.3.0 provides relief for the master processor, the data for the case of 900 Linux images without the timer patch reflects the next constraint that limits the number of Linux guests that can be managed concurrently - namely, the scheduler lock. The data for this scenario (shown in Table 1) indicate that the wait time for the scheduler lock is increasing as the number of Linux images increases.

The data captured in Table 2 indicate that using the On-Demand Timer Patch with idle Linux workloads yields a large increase in the number of Linux images that z/VM can manage. However, the type of Linux workload should be considered when deciding whether or not to use the timer patch. The maximum benefit of the timer patch is realized for environments with large numbers of low-usage Linux guest users. The timer patch is not recommended for environments with high-usage Linux guests because these guests will do a small amount of additional work every time the switch between user mode and kernel mode occurs.

Footnotes:

- 1

- The breakout of central storage and expanded storage for this evaluation was arbitrary. Similar results are expected with other breakouts.