Queued I/O Assist

Introduction

The announcements for the z990 processor family and z/VM Version 4 Release 4.0 discussed performance improvements for V=V guests conducting networking activity via real networking devices that use the Queued Direct I/O (QDIO) facility.

The performance assist, called Queued I/O Assist, applies to the FICON Express card with FCP feature (FCP CHPID type), HiperSockets (IQD CHPID type), and OSA Express features (OSD CHPID type).

The performance improvements centered around replacing the heavyweight PCI interrupt interruption mechanism with a lighter adapter interrupt (AI) mechanism. The z990 was also equipped with AI delivery assists that let IBM remove AI delivery overhead.

There are three main components to the AI support:

- For the OSA Express and FICON Express features on z990 GA1 and later, IBM replaced the heavyweight PCI interrupt with a lightweight adapter interrupt. This requires changes to the OSA Express and FICON Express millicode so as to emit AIs, and it requires corresponding changes in z/VM so as to handle the AIs.

- For HiperSockets, OSA Express, and FICON Express, on z990 GA1 and later, IBM provided a z990 millicode assist that lets the z990 tell z/VM the identity of the nonrunning guest to which a presented AI should be delivered. This replaces z/VM's having to inspect its shadows of all guests' QDIO structures to find the guest to which the AI should be delivered. This portion of the assist is sometimes called the alerting portion of the assist.

- Also for HiperSockets, OSA Express, and FICON Express, on z990 GA1 and later, IBM provided a second z990 millicode assist that lets the z990 deliver an AI to a running guest without the guest leaving SIE. This eliminates traditional z/VM interrupt delivery overhead. This portion of the assist is sometimes called the passthrough portion of the assist.

Together these elements reduce the cost to the z/VM Control Program (CP) of delivering OSA Express, FICON Express, or HiperSockets interrupts to z/VM guests.

Finally, a word about nomenclature. The formal IBM terms for these enhancements are adapter interrupts and Queued I/O Assist. However, in informal IBM publications and dialogues, you might sometimes see adapter interrupts called thin interrupts. You might also see Queued I/O Assist called Adapter Interruption Passthrough. In this report, we will use the formal terms.

Measurement Environment

To measure the benefit of Queued I/O Assist, we set up two Linux guests on a single z/VM 4.4.0 image, running in an LPAR of a z990. We connected the two Linux images to one another via either HiperSockets (MFS 64K) or via a single OSA Express Gigabit Ethernet adapter (three device numbers to one Linux guest, another three device numbers on the same chpid to the other guest). We ran networking loads across these connections, using an IBM-internal version of Application Workload Modeler (AWM). There was no load on the z/VM system except these two Linux guests.

Details of the measured hardware and software configuration were:

Component | Level |

Hardware | An LPAR of a 2084-C24, MCL driver 52G, with EC J12558 at driver level 109, 2 dedicated engines, 2 GB central storage, 4 GB XSTORE. |

z/VM | For the base case, we used z/VM 4.3.0 with all corrective service applied. For the comparison case, we used z/VM 4.4.0 with the GA RSU applied, plus PTF UM31036. |

Linux | We used an IBM-internal Linux development driver, kernel level 2.4.20, with the June 2003 stream from IBM DeveloperWorks applied. |

It is important that customers wishing to use Queued I/O Assist in production, or customers wishing to conduct measurements of Queued I/O Assist benefits, apply all available corrective service for the z990, for z/VM, and for Linux for zSeries. See our z990 Queued I/O Assist page for an explanation of the levels required.

Abstracts of the workloads we ran:

Workload | Explanation |

Connect-Request-Response (CRR) | This workload simulates HTTP. The client connects to the server and sends a 64-byte request. The server responds with an 8192-byte response and closes the connection. The client and server continue exchanging data in this way until the end of the measurement period. |

Request-Response (RR) | This workload simulates Telnet. The client connects to the server and sends a 200-byte request. The server responds with a 1000-byte response, leaving the connection up. The client and server continue exchanging data on this connection until the end of the measurement period. |

Streams Get (STRG) | This workload simulates FTP. The client connects to the server and sends a 20-byte request. The server responds with a 20 MB response and closes the connection. The client and server continue exchanging data in this way until the end of the measurement period. |

For each workload, we ran several different MTU sizes and several different numbers of concurrent connections. Details are available in the charts and tables found below.

For each workload, we assessed performance using the following metrics:

Metric | Meaning |

tx/sec | Transactions performed per wall clock second. |

vCPU/tx | Virtual CPU time (aka emulation time) consumed by the Linux guests, per transaction. (VIRTCPU response field from CP QUERY TIME.) |

cCPU/tx | CP (Control Program) CPU time consumed per transaction. (TOTCPU minus VIRTCPU from CP QUERY TIME.) |

tCPU/tx | Total CPU time consumed per transaction. (TOTCPU from CP QUERY TIME.) |

Summary of Results

For the OSA Express Gigabit Ethernet and HiperSockets adapters, Table 1 summarizes the general changes in the performance metrics, comparing Linux running on z/VM 4.3.0 to Linux running on z/VM 4.4.0 with Queued I/O Assist enabled.

The changes cited in Table 1 represent average results observed across all MTU sizes and all numbers of concurrent connections. They are for planning and estimation purposes only. Precise results for specific measured configurations of interest are available in later sections of this report.

| Device | Workload | tx/sec | vCPU/tx | cCPU/tx | tCPU/tx |

|---|---|---|---|---|---|

| OSA Express Gigabit Ethernet | CRR | +9% | -1% | -28% | -12% |

|

| RR | +13% | -11% | -29% | -18% |

|

| STRG | +2% | -1% | -24% | -8% |

| HiperSockets | CRR | +4% | -1% | -6% | -4% |

|

| RR | +8% | -2% | -7% | -5% |

|

| STRG | +6% | flat | -5% | -2% |

| Note: Linux on z/VM 4.4.0 with Queued I/O Assist, compared to Linux on z/VM 4.3.0. 2084-C24, LPAR with 2 dedicated CPUs, 2 GB real, 4 GB XSTORE, LPAR dedicated to these runs. Linux 2.4.20, 31-bit, IBM-internal development driver. 256 MB Linux virtual uniprocessor machine, no swap partition, Linux DASD is dedicated 3390 volume or RAMAC equivalent. IBM-internal version of Application Workload Modeler (AWM). | |||||

Detailed Results

The tables below present experimental results for each

configuration.

Table 2. OSA Express Gigabit Ethernet, CRR, MTU 1492

| Concurrent Connections | Level | Tx/sec | vCPU/tx (msec) | cCPU/tx (msec) | tCPU/tx (msec) |

|---|---|---|---|---|---|

| 1 | z/VM 4.3.0 | 491.463 | 0.312 | 0.419 | 0.731 |

|

| z/VM 4.4.0 | 519.919 | 0.313 | 0.330 | 0.644 |

|

| delta | 28.456 | 0.001 | -0.088 | -0.087 |

|

| %delta | 5.790 | 0.410 | -21.101 | -11.916 |

| 10 | z/VM 4.3.0 | 1425.943 | 0.241 | 0.210 | 0.451 |

|

| z/VM 4.4.0 | 1591.787 | 0.235 | 0.146 | 0.381 |

|

| delta | 165.844 | -0.006 | -0.064 | -0.070 |

|

| %delta | 11.630 | -2.538 | -30.492 | -15.548 |

| 20 | z/VM 4.3.0 | 2149.476 | 0.206 | 0.125 | 0.331 |

|

| z/VM 4.4.0 | 2354.960 | 0.203 | 0.087 | 0.290 |

|

| delta | 205.484 | -0.004 | -0.038 | -0.041 |

|

| %delta | 9.560 | -1.758 | -30.229 | -12.511 |

| 50 | z/VM 4.3.0 | 3400.647 | 0.181 | 0.059 | 0.239 |

|

| z/VM 4.4.0 | 3620.217 | 0.179 | 0.043 | 0.222 |

|

| delta | 219.570 | -0.001 | -0.016 | -0.017 |

|

| %delta | 6.457 | -0.624 | -27.476 | -7.211 |

| Note: Configuration as described in summary table. | |||||

Table 3. OSA Express Gigabit Ethernet, CRR, MTU 8992

| Concurrent Connections | Level | Tx/sec | vCPU/tx (msec) | cCPU/tx (msec) | tCPU/tx (msec) |

|---|---|---|---|---|---|

| 1 | z/VM 4.3.0 | 762.926 | 0.211 | 0.274 | 0.485 |

|

| z/VM 4.4.0 | 834.195 | 0.210 | 0.209 | 0.419 |

|

| delta | 71.268 | -0.001 | -0.065 | -0.066 |

|

| %delta | 9.341 | -0.454 | -23.630 | -13.557 |

| 10 | z/VM 4.3.0 | 2211.706 | 0.169 | 0.134 | 0.303 |

|

| z/VM 4.4.0 | 2452.266 | 0.165 | 0.093 | 0.258 |

|

| delta | 240.560 | -0.004 | -0.041 | -0.045 |

|

| %delta | 10.877 | -2.481 | -30.409 | -14.848 |

| 20 | z/VM 4.3.0 | 3299.491 | 0.148 | 0.083 | 0.231 |

|

| z/VM 4.4.0 | 3598.624 | 0.146 | 0.057 | 0.203 |

|

| delta | 299.132 | -0.002 | -0.026 | -0.028 |

|

| %delta | 9.066 | -1.665 | -30.943 | -12.167 |

| 50 | z/VM 4.3.0 | 5306.315 | 0.132 | 0.040 | 0.172 |

|

| z/VM 4.4.0 | 5615.214 | 0.130 | 0.029 | 0.159 |

|

| delta | 308.899 | -0.001 | -0.011 | -0.012 |

|

| %delta | 5.821 | -1.077 | -27.007 | -7.087 |

| Note: Configuration as described in summary table. | |||||

Table 4. OSA Express Gigabit Ethernet, RR, MTU 1492

| Concurrent Connections | Level | Tx/sec | vCPU/tx (msec) | cCPU/tx (msec) | tCPU/tx (msec) |

|---|---|---|---|---|---|

| 1 | z/VM 4.3.0 | 1991.802 | 0.070 | 0.062 | 0.132 |

|

| z/VM 4.4.0 | 2240.960 | 0.053 | 0.054 | 0.106 |

|

| delta | 249.158 | -0.017 | -0.008 | -0.026 |

|

| %delta | 12.509 | -24.720 | -13.515 | -19.453 |

| 10 | z/VM 4.3.0 | 6385.912 | 0.049 | 0.052 | 0.101 |

|

| z/VM 4.4.0 | 6987.427 | 0.044 | 0.035 | 0.079 |

|

| delta | 601.516 | -0.005 | -0.017 | -0.022 |

|

| %delta | 9.419 | -9.632 | -32.953 | -21.634 |

| 20 | z/VM 4.3.0 | 9465.164 | 0.042 | 0.034 | 0.076 |

|

| z/VM 4.4.0 | 10852.571 | 0.040 | 0.023 | 0.062 |

|

| delta | 1387.407 | -0.002 | -0.011 | -0.014 |

|

| %delta | 14.658 | -5.577 | -33.333 | -18.049 |

| 50 | z/VM 4.3.0 | 16334.023 | 0.036 | 0.018 | 0.053 |

|

| z/VM 4.4.0 | 18183.309 | 0.034 | 0.011 | 0.046 |

|

| delta | 1849.287 | -0.001 | -0.006 | -0.008 |

|

| %delta | 11.322 | -3.975 | -34.814 | -14.149 |

| Note: Configuration as described in summary table. | |||||

Table 5. OSA Express Gigabit Ethernet, RR, MTU 8992

| Concurrent Connections | Level | Tx/sec | vCPU/tx (msec) | cCPU/tx (msec) | tCPU/tx (msec) |

|---|---|---|---|---|---|

| 1 | z/VM 4.3.0 | 1992.247 | 0.072 | 0.062 | 0.133 |

|

| z/VM 4.4.0 | 2240.208 | 0.054 | 0.054 | 0.108 |

|

| delta | 247.960 | -0.018 | -0.008 | -0.026 |

|

| %delta | 12.446 | -24.592 | -13.337 | -19.371 |

| 10 | z/VM 4.3.0 | 6380.193 | 0.050 | 0.052 | 0.102 |

|

| z/VM 4.4.0 | 7422.396 | 0.046 | 0.035 | 0.081 |

|

| delta | 1042.203 | -0.004 | -0.017 | -0.021 |

|

| %delta | 16.335 | -7.449 | -32.723 | -20.390 |

| 20 | z/VM 4.3.0 | 9457.137 | 0.042 | 0.034 | 0.077 |

|

| z/VM 4.4.0 | 10838.895 | 0.040 | 0.023 | 0.063 |

|

| delta | 1381.758 | -0.002 | -0.011 | -0.014 |

|

| %delta | 14.611 | -5.000 | -33.357 | -17.682 |

| 50 | z/VM 4.3.0 | 16308.725 | 0.036 | 0.018 | 0.054 |

|

| z/VM 4.4.0 | 18151.125 | 0.035 | 0.011 | 0.046 |

|

| delta | 1842.400 | -0.001 | -0.006 | -0.008 |

|

| %delta | 11.297 | -3.728 | -35.248 | -14.018 |

| Note: Configuration as described in summary table. | |||||

Table 6. OSA Express Gigabit Ethernet, STRG, MTU 1492

| Concurrent Connections | Level | Tx/sec | vCPU/tx (msec) | cCPU/tx (msec) | tCPU/tx (msec) |

|---|---|---|---|---|---|

| 1 | z/VM 4.3.0 | 1.558 | 195.181 | 164.223 | 359.404 |

|

| z/VM 4.4.0 | 1.735 | 189.244 | 114.029 | 303.273 |

|

| delta | 0.176 | -5.937 | -50.195 | -56.132 |

|

| %delta | 11.312 | -3.042 | -30.565 | -15.618 |

| 10 | z/VM 4.3.0 | 3.610 | 165.068 | 49.109 | 214.177 |

|

| z/VM 4.4.0 | 3.761 | 163.832 | 38.026 | 201.859 |

|

| delta | 0.151 | -1.236 | -11.083 | -12.319 |

|

| %delta | 4.178 | -0.749 | -22.567 | -5.752 |

| 20 | z/VM 4.3.0 | 3.630 | 172.477 | 46.864 | 219.341 |

|

| z/VM 4.4.0 | 3.799 | 173.370 | 35.609 | 208.978 |

|

| delta | 0.169 | 0.892 | -11.255 | -10.363 |

|

| %delta | 4.652 | 0.517 | -24.016 | -4.724 |

| 50 | z/VM 4.3.0 | 3.632 | 180.960 | 45.671 | 226.631 |

|

| z/VM 4.4.0 | 3.834 | 177.739 | 34.526 | 212.265 |

|

| delta | 0.202 | -3.221 | -11.145 | -14.366 |

|

| %delta | 5.552 | -1.780 | -24.403 | -6.339 |

| Note: Configuration as described in summary table. | |||||

Table 7. OSA Express Gigabit Ethernet, STRG, MTU 8992

| Concurrent Connections | Level | Tx/sec | vCPU/tx (msec) | cCPU/tx (msec) | tCPU/tx (msec) |

|---|---|---|---|---|---|

| 1 | z/VM 4.3.0 | 2.524 | 100.455 | 101.155 | 201.611 |

|

| z/VM 4.4.0 | 2.642 | 100.726 | 85.489 | 186.215 |

|

| delta | 0.118 | 0.270 | -15.666 | -15.396 |

|

| %delta | 4.674 | 0.269 | -15.487 | -7.637 |

| 10 | z/VM 4.3.0 | 5.951 | 79.625 | 39.403 | 119.028 |

|

| z/VM 4.4.0 | 5.532 | 76.036 | 27.340 | 103.376 |

|

| delta | -0.419 | -3.589 | -12.062 | -15.652 |

|

| %delta | -7.046 | -4.508 | -30.613 | -13.150 |

| 20 | z/VM 4.3.0 | 6.380 | 76.610 | 28.833 | 105.444 |

|

| z/VM 4.4.0 | 6.035 | 77.168 | 21.765 | 98.932 |

|

| delta | -0.345 | 0.557 | -7.068 | -6.511 |

|

| %delta | -5.406 | 0.727 | -24.515 | -6.175 |

| 50 | z/VM 4.3.0 | 6.594 | 77.005 | 22.353 | 99.358 |

|

| z/VM 4.4.0 | 6.304 | 75.353 | 17.950 | 93.303 |

|

| delta | -0.290 | -1.653 | -4.403 | -6.055 |

|

| %delta | -4.402 | -2.146 | -19.696 | -6.094 |

| Note: Configuration as described in summary table. | |||||

Table 8. HiperSockets, CRR, MTU 8992

| Concurrent Connections | Level | Tx/sec | vCPU/tx (msec) | cCPU/tx (msec) | tCPU/tx (msec) |

|---|---|---|---|---|---|

| 1 | z/VM 4.3.0 | 3284.418 | 0.188 | 0.228 | 0.416 |

|

| z/VM 4.4.0 | 3520.699 | 0.186 | 0.215 | 0.402 |

|

| delta | 236.281 | -0.002 | -0.012 | -0.014 |

|

| %delta | 7.194 | -0.931 | -5.467 | -3.414 |

| 10 | z/VM 4.3.0 | 4537.796 | 0.172 | 0.189 | 0.361 |

|

| z/VM 4.4.0 | 4721.925 | 0.170 | 0.180 | 0.350 |

|

| delta | 184.129 | -0.002 | -0.009 | -0.012 |

|

| %delta | 4.058 | -1.355 | -4.901 | -3.210 |

| 20 | z/VM 4.3.0 | 4574.064 | 0.172 | 0.186 | 0.358 |

|

| z/VM 4.4.0 | 4717.406 | 0.169 | 0.174 | 0.343 |

|

| delta | 143.342 | -0.004 | -0.012 | -0.015 |

|

| %delta | 3.134 | -2.108 | -6.326 | -4.300 |

| 50 | z/VM 4.3.0 | 4526.479 | 0.173 | 0.186 | 0.360 |

|

| z/VM 4.4.0 | 4493.266 | 0.169 | 0.173 | 0.342 |

|

| delta | -33.213 | -0.004 | -0.014 | -0.017 |

|

| %delta | -0.734 | -2.147 | -7.283 | -4.810 |

| Note: Configuration as described in summary table. | |||||

Table 9. HiperSockets, CRR, MTU 16384

| Concurrent Connections | Level | Tx/sec | vCPU/tx (msec) | cCPU/tx (msec) | tCPU/tx (msec) |

|---|---|---|---|---|---|

| 1 | z/VM 4.3.0 | 3306.553 | 0.188 | 0.228 | 0.417 |

|

| z/VM 4.4.0 | 3511.288 | 0.187 | 0.215 | 0.402 |

|

| delta | 204.736 | -0.002 | -0.013 | -0.015 |

|

| %delta | 6.192 | -0.953 | -5.908 | -3.668 |

| 10 | z/VM 4.3.0 | 4514.058 | 0.173 | 0.189 | 0.361 |

|

| z/VM 4.4.0 | 4629.770 | 0.172 | 0.180 | 0.352 |

|

| delta | 115.712 | -0.001 | -0.008 | -0.009 |

|

| %delta | 2.563 | -0.663 | -4.352 | -2.589 |

| 20 | z/VM 4.3.0 | 4547.080 | 0.172 | 0.186 | 0.358 |

|

| z/VM 4.4.0 | 4691.305 | 0.169 | 0.174 | 0.344 |

|

| delta | 144.225 | -0.003 | -0.012 | -0.015 |

|

| %delta | 3.172 | -1.782 | -6.298 | -4.128 |

| 50 | z/VM 4.3.0 | 4489.159 | 0.173 | 0.186 | 0.359 |

|

| z/VM 4.4.0 | 4534.121 | 0.170 | 0.173 | 0.343 |

|

| delta | 44.962 | -0.004 | -0.013 | -0.016 |

|

| %delta | 1.002 | -2.052 | -6.767 | -4.495 |

| Note: Configuration as described in summary table. | |||||

Table 10. HiperSockets, CRR, MTU 32768

| Concurrent Connections | Level | Tx/sec | vCPU/tx (msec) | cCPU/tx (msec) | tCPU/tx (msec) |

|---|---|---|---|---|---|

| 1 | z/VM 4.3.0 | 3284.260 | 0.190 | 0.228 | 0.418 |

|

| z/VM 4.4.0 | 3521.537 | 0.187 | 0.216 | 0.403 |

|

| delta | 237.276 | -0.003 | -0.012 | -0.016 |

|

| %delta | 7.225 | -1.776 | -5.438 | -3.771 |

| 10 | z/VM 4.3.0 | 4455.185 | 0.173 | 0.188 | 0.362 |

|

| z/VM 4.4.0 | 4649.816 | 0.172 | 0.180 | 0.352 |

|

| delta | 194.631 | -0.002 | -0.008 | -0.010 |

|

| %delta | 4.369 | -0.865 | -4.489 | -2.751 |

| 20 | z/VM 4.3.0 | 4501.635 | 0.173 | 0.186 | 0.359 |

|

| z/VM 4.4.0 | 4486.334 | 0.169 | 0.176 | 0.345 |

|

| delta | -15.301 | -0.004 | -0.010 | -0.014 |

|

| %delta | -0.340 | -2.226 | -5.343 | -3.840 |

| 50 | z/VM 4.3.0 | 4476.254 | 0.174 | 0.186 | 0.360 |

|

| z/VM 4.4.0 | 4591.581 | 0.170 | 0.174 | 0.344 |

|

| delta | 115.327 | -0.004 | -0.012 | -0.016 |

|

| %delta | 2.576 | -2.173 | -6.386 | -4.351 |

| Note: Configuration as described in summary table. | |||||

Table 11. HiperSockets, CRR, MTU 57344

| Concurrent Connections | Level | Tx/sec | vCPU/tx (msec) | cCPU/tx (msec) | tCPU/tx (msec) |

|---|---|---|---|---|---|

| 1 | z/VM 4.3.0 | 3260.237 | 0.190 | 0.225 | 0.415 |

|

| z/VM 4.4.0 | 3497.269 | 0.188 | 0.217 | 0.405 |

|

| delta | 237.033 | -0.001 | -0.009 | -0.010 |

|

| %delta | 7.270 | -0.577 | -3.801 | -2.328 |

| 10 | z/VM 4.3.0 | 4488.407 | 0.174 | 0.188 | 0.362 |

|

| z/VM 4.4.0 | 4646.812 | 0.173 | 0.181 | 0.354 |

|

| delta | 158.405 | -0.001 | -0.007 | -0.008 |

|

| %delta | 3.529 | -0.326 | -3.893 | -2.181 |

| 20 | z/VM 4.3.0 | 4515.199 | 0.174 | 0.186 | 0.359 |

|

| z/VM 4.4.0 | 4688.292 | 0.171 | 0.176 | 0.346 |

|

| delta | 173.093 | -0.003 | -0.010 | -0.013 |

|

| %delta | 3.834 | -1.580 | -5.498 | -3.606 |

| 50 | z/VM 4.3.0 | 4442.913 | 0.174 | 0.186 | 0.360 |

|

| z/VM 4.4.0 | 4521.320 | 0.171 | 0.174 | 0.345 |

|

| delta | 78.408 | -0.003 | -0.012 | -0.015 |

|

| %delta | 1.765 | -1.738 | -6.461 | -4.174 |

| Note: Configuration as described in summary table. | |||||

Table 12. HiperSockets, RR, MTU 8992

| Concurrent Connections | Level | Tx/sec | vCPU/tx (msec) | cCPU/tx (msec) | tCPU/tx (msec) |

|---|---|---|---|---|---|

| 1 | z/VM 4.3.0 | 12659.339 | 0.042 | 0.049 | 0.091 |

|

| z/VM 4.4.0 | 12958.381 | 0.042 | 0.048 | 0.089 |

|

| delta | 299.043 | 0.000 | -0.002 | -0.002 |

|

| %delta | 2.362 | -0.291 | -3.573 | -2.066 |

| 10 | z/VM 4.3.0 | 17085.596 | 0.043 | 0.044 | 0.087 |

|

| z/VM 4.4.0 | 19681.727 | 0.040 | 0.039 | 0.079 |

|

| delta | 2596.131 | -0.003 | -0.005 | -0.008 |

|

| %delta | 15.195 | -7.284 | -11.096 | -9.192 |

| 20 | z/VM 4.3.0 | 18547.824 | 0.042 | 0.041 | 0.083 |

|

| z/VM 4.4.0 | 19728.350 | 0.041 | 0.038 | 0.080 |

|

| delta | 1180.527 | -0.001 | -0.002 | -0.003 |

|

| %delta | 6.365 | -1.482 | -6.023 | -3.717 |

| 50 | z/VM 4.3.0 | 19161.731 | 0.043 | 0.038 | 0.081 |

|

| z/VM 4.4.0 | 19813.819 | 0.043 | 0.036 | 0.079 |

|

| delta | 652.088 | 0.000 | -0.001 | -0.002 |

|

| %delta | 3.403 | -0.243 | -3.764 | -1.894 |

| Note: Configuration as described in summary table. | |||||

Table 13. HiperSockets, RR, MTU 16384

| Concurrent Connections | Level | Tx/sec | vCPU/tx (msec) | cCPU/tx (msec) | tCPU/tx (msec) |

|---|---|---|---|---|---|

| 1 | z/VM 4.3.0 | 12652.137 | 0.042 | 0.049 | 0.091 |

|

| z/VM 4.4.0 | 12903.722 | 0.042 | 0.048 | 0.090 |

|

| delta | 251.584 | 0.000 | -0.002 | -0.001 |

|

| %delta | 1.988 | 0.405 | -3.313 | -1.606 |

| 10 | z/VM 4.3.0 | 17225.963 | 0.043 | 0.044 | 0.087 |

|

| z/VM 4.4.0 | 19242.936 | 0.041 | 0.039 | 0.079 |

|

| delta | 2016.973 | -0.002 | -0.005 | -0.007 |

|

| %delta | 11.709 | -5.766 | -11.355 | -8.576 |

| 20 | z/VM 4.3.0 | 18558.169 | 0.042 | 0.041 | 0.083 |

|

| z/VM 4.4.0 | 21767.563 | 0.041 | 0.036 | 0.077 |

|

| delta | 3209.394 | -0.001 | -0.005 | -0.006 |

|

| %delta | 17.294 | -3.372 | -12.231 | -7.751 |

| 50 | z/VM 4.3.0 | 19325.624 | 0.043 | 0.038 | 0.081 |

|

| z/VM 4.4.0 | 19497.133 | 0.042 | 0.036 | 0.079 |

|

| delta | 171.510 | 0.000 | -0.002 | -0.002 |

|

| %delta | 0.887 | -1.037 | -4.691 | -2.758 |

| Note: Configuration as described in summary table. | |||||

Table 14. HiperSockets, RR, MTU 32768

| Concurrent Connections | Level | Tx/sec | vCPU/tx (msec) | cCPU/tx (msec) | tCPU/tx (msec) |

|---|---|---|---|---|---|

| 1 | z/VM 4.3.0 | 12631.402 | 0.042 | 0.049 | 0.091 |

|

| z/VM 4.4.0 | 12936.554 | 0.042 | 0.048 | 0.089 |

|

| delta | 305.153 | 0.000 | -0.002 | -0.002 |

|

| %delta | 2.416 | 0.433 | -3.615 | -1.761 |

| 10 | z/VM 4.3.0 | 17114.653 | 0.043 | 0.044 | 0.087 |

|

| z/VM 4.4.0 | 20077.202 | 0.040 | 0.039 | 0.079 |

|

| delta | 2962.549 | -0.003 | -0.005 | -0.008 |

|

| %delta | 17.310 | -7.099 | -10.865 | -8.988 |

| 20 | z/VM 4.3.0 | 18418.905 | 0.042 | 0.041 | 0.084 |

|

| z/VM 4.4.0 | 21571.289 | 0.040 | 0.036 | 0.076 |

|

| delta | 3152.385 | -0.002 | -0.006 | -0.007 |

|

| %delta | 17.115 | -4.244 | -13.371 | -8.757 |

| 50 | z/VM 4.3.0 | 19163.273 | 0.043 | 0.038 | 0.081 |

|

| z/VM 4.4.0 | 19707.818 | 0.043 | 0.036 | 0.079 |

|

| delta | 544.545 | 0.000 | -0.002 | -0.002 |

|

| %delta | 2.842 | -0.072 | -4.112 | -1.970 |

| Note: Configuration as described in summary table. | |||||

Table 15. HiperSockets, RR, MTU 57344

| Concurrent Connections | Level | Tx/sec | vCPU/tx (msec) | cCPU/tx (msec) | tCPU/tx (msec) |

|---|---|---|---|---|---|

| 1 | z/VM 4.3.0 | 12678.343 | 0.042 | 0.049 | 0.091 |

|

| z/VM 4.4.0 | 12979.411 | 0.042 | 0.047 | 0.089 |

|

| delta | 301.068 | 0.000 | -0.002 | -0.002 |

|

| %delta | 2.375 | -0.090 | -3.647 | -2.014 |

| 10 | z/VM 4.3.0 | 17104.270 | 0.043 | 0.043 | 0.087 |

|

| z/VM 4.4.0 | 18995.322 | 0.040 | 0.039 | 0.079 |

|

| delta | 1891.052 | -0.003 | -0.004 | -0.007 |

|

| %delta | 11.056 | -6.705 | -10.353 | -8.530 |

| 20 | z/VM 4.3.0 | 18405.769 | 0.042 | 0.041 | 0.083 |

|

| z/VM 4.4.0 | 19798.269 | 0.041 | 0.038 | 0.080 |

|

| delta | 1392.501 | -0.001 | -0.003 | -0.003 |

|

| %delta | 7.566 | -1.740 | -6.432 | -4.054 |

| 50 | z/VM 4.3.0 | 19264.102 | 0.043 | 0.038 | 0.081 |

|

| z/VM 4.4.0 | 19604.354 | 0.042 | 0.036 | 0.078 |

|

| delta | 340.252 | 0.000 | -0.002 | -0.002 |

|

| %delta | 1.766 | -0.956 | -4.793 | -2.760 |

| Note: Configuration as described in summary table. | |||||

Table 16. HiperSockets, STRG, MTU 8992

| Concurrent Connections | Level | Tx/sec | vCPU/tx (msec) | cCPU/tx (msec) | tCPU/tx (msec) |

|---|---|---|---|---|---|

| 1 | z/VM 4.3.0 | 8.800 | 91.936 | 90.799 | 182.735 |

|

| z/VM 4.4.0 | 9.730 | 86.445 | 78.041 | 164.486 |

|

| delta | 0.930 | -5.490 | -12.758 | -18.249 |

|

| %delta | 10.567 | -5.972 | -14.051 | -9.986 |

| 10 | z/VM 4.3.0 | 10.747 | 86.325 | 78.230 | 164.555 |

|

| z/VM 4.4.0 | 10.974 | 86.514 | 74.311 | 160.824 |

|

| delta | 0.227 | 0.189 | -3.920 | -3.731 |

|

| %delta | 2.113 | 0.219 | -5.011 | -2.267 |

| 20 | z/VM 4.3.0 | 10.492 | 86.294 | 77.635 | 163.929 |

|

| z/VM 4.4.0 | 10.850 | 86.913 | 74.332 | 161.245 |

|

| delta | 0.358 | 0.619 | -3.303 | -2.684 |

|

| %delta | 3.411 | 0.717 | -4.255 | -1.637 |

| 50 | z/VM 4.3.0 | 10.311 | 87.396 | 77.557 | 164.954 |

|

| z/VM 4.4.0 | 10.558 | 87.756 | 74.087 | 161.844 |

|

| delta | 0.247 | 0.360 | -3.470 | -3.110 |

|

| %delta | 2.397 | 0.412 | -4.474 | -1.885 |

| Note: Configuration as described in summary table. | |||||

Table 17. HiperSockets, STRG, MTU 16384

| Concurrent Connections | Level | Tx/sec | vCPU/tx (msec) | cCPU/tx (msec) | tCPU/tx (msec) |

|---|---|---|---|---|---|

| 1 | z/VM 4.3.0 | 10.630 | 76.909 | 66.497 | 143.406 |

|

| z/VM 4.4.0 | 11.930 | 70.779 | 53.602 | 124.381 |

|

| delta | 1.300 | -6.130 | -12.895 | -19.025 |

|

| %delta | 12.227 | -7.970 | -19.392 | -13.266 |

| 10 | z/VM 4.3.0 | 12.150 | 74.404 | 60.463 | 134.867 |

|

| z/VM 4.4.0 | 12.843 | 73.457 | 55.045 | 128.503 |

|

| delta | 0.693 | -0.947 | -5.417 | -6.364 |

|

| %delta | 5.707 | -1.272 | -8.960 | -4.719 |

| 20 | z/VM 4.3.0 | 12.020 | 74.345 | 60.075 | 134.420 |

|

| z/VM 4.4.0 | 12.702 | 74.039 | 55.285 | 129.324 |

|

| delta | 0.681 | -0.306 | -4.790 | -5.095 |

|

| %delta | 5.667 | -0.411 | -7.973 | -3.791 |

| 50 | z/VM 4.3.0 | 11.952 | 75.360 | 60.459 | 135.819 |

|

| z/VM 4.4.0 | 12.584 | 74.285 | 54.609 | 128.894 |

|

| delta | 0.631 | -1.076 | -5.850 | -6.925 |

|

| %delta | 5.283 | -1.427 | -9.675 | -5.099 |

| Note: Configuration as described in summary table. | |||||

Table 18. HiperSockets, STRG, MTU 32768

| Concurrent Connections | Level | Tx/sec | vCPU/tx (msec) | cCPU/tx (msec) | tCPU/tx (msec) |

|---|---|---|---|---|---|

| 1 | z/VM 4.3.0 | 5.927 | 57.809 | 34.667 | 92.477 |

|

| z/VM 4.4.0 | 16.307 | 58.629 | 34.768 | 93.396 |

|

| delta | 10.380 | 0.819 | 0.100 | 0.919 |

|

| %delta | 175.148 | 1.417 | 0.289 | 0.994 |

| 10 | z/VM 4.3.0 | 15.612 | 59.836 | 35.985 | 95.821 |

|

| z/VM 4.4.0 | 15.969 | 60.331 | 35.567 | 95.899 |

|

| delta | 0.356 | 0.496 | -0.418 | 0.078 |

|

| %delta | 2.282 | 0.828 | -1.160 | 0.081 |

| 20 | z/VM 4.3.0 | 14.993 | 60.152 | 35.799 | 95.951 |

|

| z/VM 4.4.0 | 15.210 | 60.777 | 35.382 | 96.159 |

|

| delta | 0.218 | 0.625 | -0.417 | 0.208 |

|

| %delta | 1.452 | 1.040 | -1.165 | 0.217 |

| 50 | z/VM 4.3.0 | 15.206 | 61.264 | 36.655 | 97.919 |

|

| z/VM 4.4.0 | 15.415 | 61.786 | 35.894 | 97.680 |

|

| delta | 0.209 | 0.523 | -0.761 | -0.239 |

|

| %delta | 1.373 | 0.853 | -2.077 | -0.244 |

| Note: Configuration as described in summary table. | |||||

Table 19. HiperSockets, STRG, MTU 57344

| Concurrent Connections | Level | Tx/sec | vCPU/tx (msec) | cCPU/tx (msec) | tCPU/tx (msec) |

|---|---|---|---|---|---|

| 1 | z/VM 4.3.0 | 13.072 | 59.347 | 34.576 | 93.924 |

|

| z/VM 4.4.0 | 15.476 | 60.047 | 35.453 | 95.501 |

|

| delta | 2.404 | 0.700 | 0.877 | 1.577 |

|

| %delta | 18.387 | 1.180 | 2.536 | 1.679 |

| 10 | z/VM 4.3.0 | 15.183 | 60.635 | 35.247 | 95.882 |

|

| z/VM 4.4.0 | 15.434 | 61.551 | 35.480 | 97.030 |

|

| delta | 0.251 | 0.916 | 0.233 | 1.148 |

|

| %delta | 1.653 | 1.510 | 0.660 | 1.198 |

| 20 | z/VM 4.3.0 | 14.708 | 61.168 | 35.471 | 96.639 |

|

| z/VM 4.4.0 | 15.047 | 61.844 | 35.429 | 97.274 |

|

| delta | 0.339 | 0.676 | -0.041 | 0.635 |

|

| %delta | 2.303 | 1.105 | -0.116 | 0.657 |

| 50 | z/VM 4.3.0 | 14.974 | 62.025 | 36.183 | 98.209 |

|

| z/VM 4.4.0 | 15.104 | 62.983 | 35.908 | 98.891 |

|

| delta | 0.130 | 0.957 | -0.275 | 0.682 |

|

| %delta | 0.869 | 1.543 | -0.760 | 0.695 |

| Note: Configuration as described in summary table. | |||||

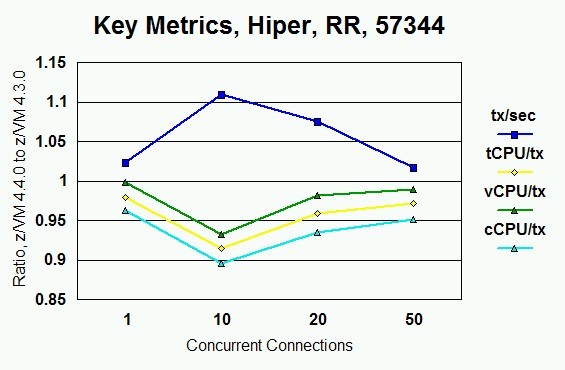

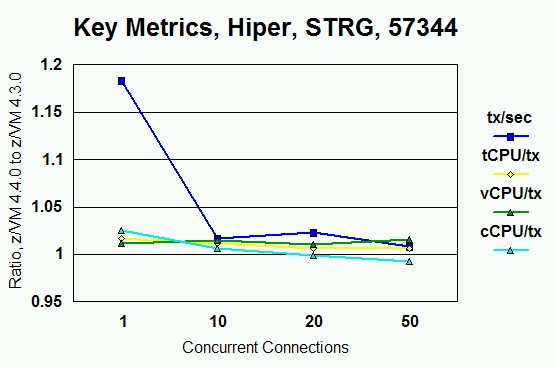

Representative Charts

So as to illustrate trends and typical results found in the experiments, we include here some charts that illustrate certain selected experimental results.

Each chart illustrates the

ratio of the comparison case to

the base case, for the four key measures,

for a certain device type, workload type, and MTU size.

A ratio less than 1 indicates that the metric

decreased in the comparison case. A ratio greater than

1 indicates that the metric increased in the

comparison case.

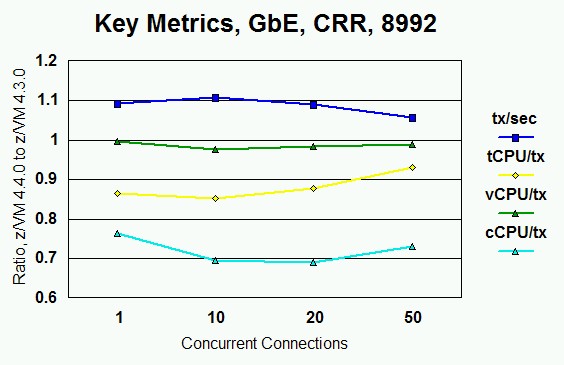

Table 20. OSA Express Gigabit Ethernet CRR

|

Ratio of Linux on z/VM 4.4.0 to Linux on z/VM 4.3.0,

OSA Express Gigabit Ethernet, CRR

|

|

| Note: Ratio of Linux on z/VM 4.4.0 to Linux on z/VM 4.3.0, OSA Express Gigabit Ethernet, CRR workload, MTU 8992, 1, 10, 20, and 50 concurrent connections, over four key metrics. |

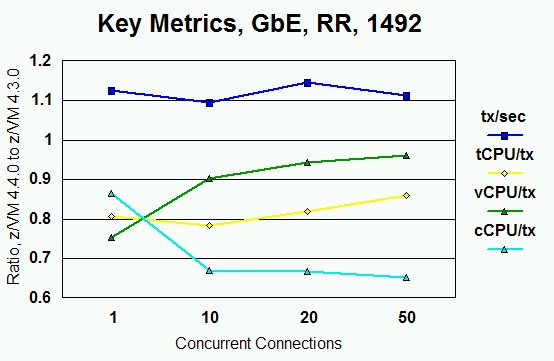

Table 21. OSA Express Gigabit Ethernet RR

|

Ratio of Linux on z/VM 4.4.0 to Linux on z/VM 4.3.0,

OSA Express Gigabit Ethernet, RR

|

|

| Note: Ratio of Linux on z/VM 4.4.0 to Linux on z/VM 4.3.0, OSA Express Gigabit Ethernet, RR workload, MTU 1492, 1, 10, 20, and 50 concurrent connections, over four key metrics. |

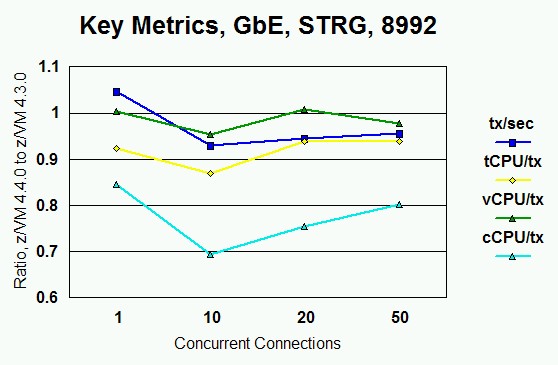

Table 22. OSA Express Gigabit Ethernet STRG

|

Ratio of Linux on z/VM 4.4.0 to Linux on z/VM 4.3.0,

OSA Express Gigabit Ethernet, STRG

|

|

| Note: Ratio of Linux on z/VM 4.4.0 to Linux on z/VM 4.3.0, OSA Express Gigabit Ethernet, STRG workload, MTU 8992, 1, 10, 20, and 50 concurrent connections, over four key metrics. |

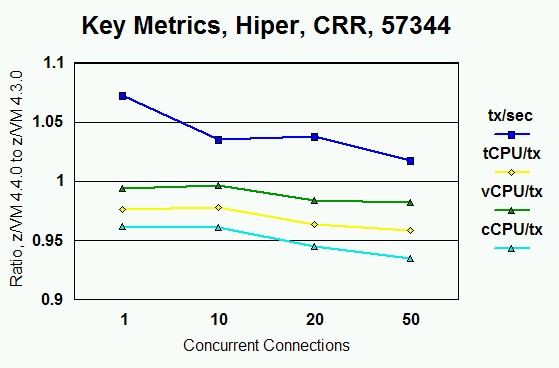

|

Ratio of Linux on z/VM 4.4.0 to Linux on z/VM 4.3.0,

HiperSockets, CRR

|

|

| Note: Ratio of Linux on z/VM 4.4.0 to Linux on z/VM 4.3.0, HiperSockets (MFS 64K), CRR workload, MTU 57344, 1, 10, 20, and 50 concurrent connections, over four key metrics. |

|

Ratio of Linux on z/VM 4.4.0 to Linux on z/VM 4.3.0,

HiperSockets, RR

|

|

| Note: Ratio of Linux on z/VM 4.4.0 to Linux on z/VM 4.3.0, HiperSockets (MFS 64K), RR workload, MTU 57344, 1, 10, 20, and 50 concurrent connections, over four key metrics. |

|

Ratio of Linux on z/VM 4.4.0 to Linux on z/VM 4.3.0,

HiperSockets, STRG

|

|

| Note: Ratio of Linux on z/VM 4.4.0 to Linux on z/VM 4.3.0, HiperSockets (MFS 64K), STRG workload, MTU 57344, 1, 10, 20, and 50 concurrent connections, over four key metrics. |

Discussion of Results

IBM's intention for Queued I/O Assist was to reduce host (z/VM Control Program) processing time per transaction by removing some of the overhead and complexity associated with interrupt delivery. The measurements here show that the assist did its work:

- For OSA Express Gigabit Ethernet, the assist removed about 27% of the CP processing time per transaction, considered over all transaction types.

- For HiperSockets, the assist removed about 6% of the CP processing time per transaction, considered over all transaction types.

We saw, and were pleased by, attendant rises in transaction rates, though the intent of the assist was to attack host processor time per transaction, not necessarily transaction rate. (Other factors, notably the z990 having to wait on the OSA Express card, limit transaction rate.)

We did not expect the assist to have much of an effect on virtual time per transaction. By and large this turned out to be the case. The OSA Express Gigabit Ethernet RR workload is somewhat of an unexplained anomaly in this regard.

The reader will notice we published only Linux numbers for evaluating this assist. The CP time induced by a Linux guest in these workloads is largely confined to the work CP does to shadow the guest's QDIO tables and interact with the real QDIO device. This means Linux is an appropriate guest to use for assessing the effect of an assist such as this, because the runs are not polluted with other work CP might be doing on behalf of the guests.

We did perform Queued I/O Assist experiments for the VM TCP/IP stack. We saw little to no effect on the VM stack's CP time per transaction. One reason for this is that the work CP does for the VM stack is made up of lots of other kinds of CP work (namely, IUCV to the CMS clients) on which the assist has no effect. Another reason for this is that CP actually does less QDIO device management work for the VM stack than it does for Linux, because the VM stack uses Diag X'98' to drive the adapter and thereby has no shadow tables. Thus there is less opportunity to remove CP QDIO overhead from the VM stack case.

Similarly, a Linux QDIO workload in a customer environment might not experience the percentage changes in CP time that we experienced in these workloads. If the customer's Linux guest is doing CP work other than QDIO (e.g., paging, DASD I/O), said other work will tend to dilute the percentage changes offered by these QDIO improvements.

These workloads used only two Linux guests, running networking benchmark code flat-out, in a two-way dedicated LPAR. This environment is particularly well-disposed toward keeping the guests in SIE and thus letting the passthrough portion of the assist have its full effect. In workloads where there are a large number of diverse guests, it is less likely that a guest using a QDIO device will happen to be in SIE at the moment its interrupt arrives from the adapter. Thus it can be expected that the effect of the passthrough portion of the assist will be diluted in such an environment. Note that the alerting portion of the assist still applies.

CP does offer a NOQIOASSIST option on the ATTACH command, so as to turn off the alerting portion of the assist. When ATTACH NOQIOASSIST is in effect, z/VM instructs the z990 not to issue AI alerts; rather, z/VM handles the AIs the "old way", that is, for each arriving AI, z/VM searches all guests' QDIO shadow queues to find the guest to which the AI should be presented. This option is intended for debugging or for circumventing field problems discovered in the alerting logic.

Similarly, CP offers a SET QIOASSIST OFF command. This

command turns off the passthrough portion of the assist.

When the passthrough assist is turned off, z/VM fields the

AI and stacks the interrupt for the guest, in

the same manner as it would stack other kinds of interrupts

for the guest.

Again, this command is

intended for debugging or for circumventing field problems in

z990 millicode.