z/VM Performance Report

IBM Corporation

z/VM Performance Evaluation

Generated 2016-11-17 15:12:58 EST from this online edition:

http://www.vm.ibm.com/perf/reports/zvm/html/

This PDF version is intended for those who wish to save a complete report locally, for offline viewing later.

To send us feedback about this PDF version, visit the VM Feedback Page.

Table of Contents

Notices

Programming Information

Trademarks

Acknowledgements

Introduction

Update Log

Referenced Publications

z/VM 6.4

Summary of Key Findings

Changes That Affect Performance

Performance Improvements

Performance Considerations

Performance Management

New Functions

Memory Management Serialization Contention Relief and 2 TB Central Storage Support

z/VM Paging

CP Scheduler Improvements

RSCS TCPNJE Encryption

TLS/SSL Server Changes

z/VM for z13

Summary of Key Findings

Changes That Affect Performance

Performance Improvements

Performance Considerations

Performance Management

New Functions

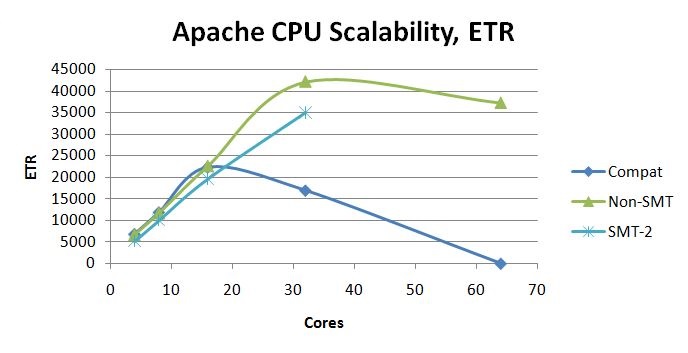

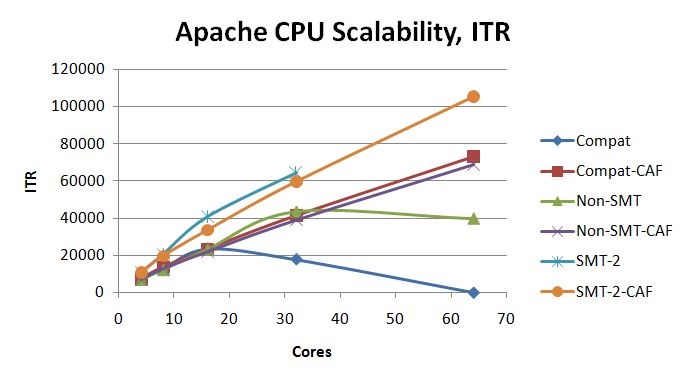

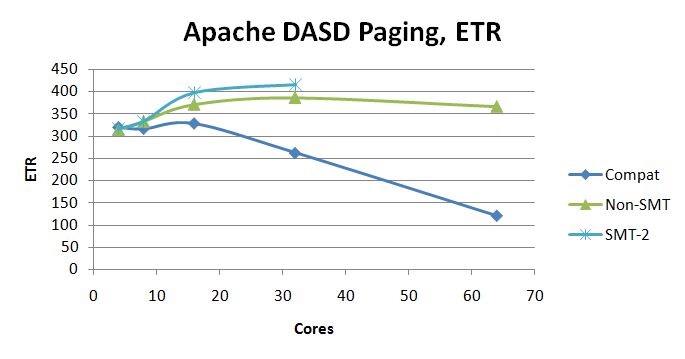

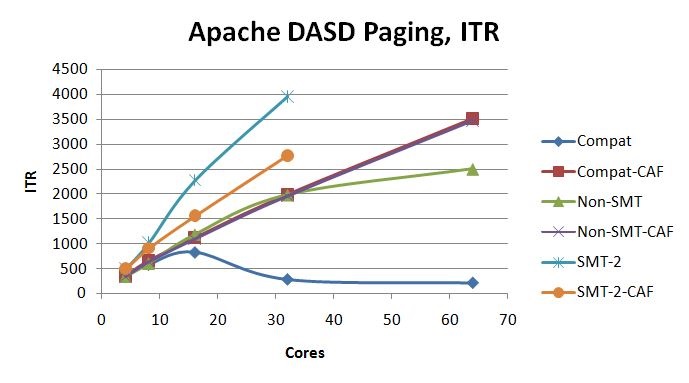

Simultaneous Multithreading (SMT)

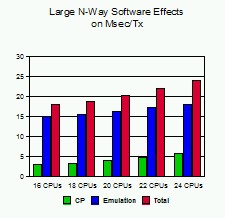

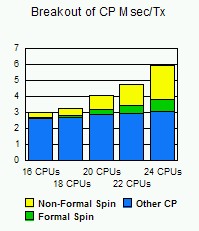

System Scaling Improvements

z/VM Version 6 Release 3

Summary of Key Findings

Changes That Affect Performance

Performance Improvements

Performance Considerations

Performance Management

New Functions

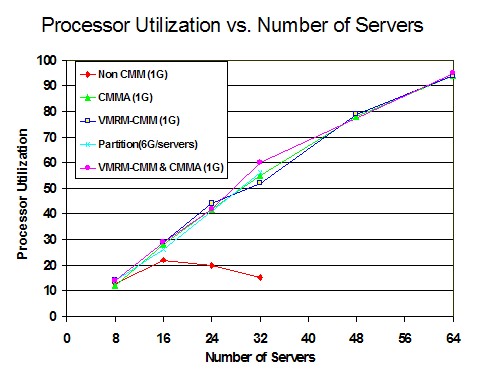

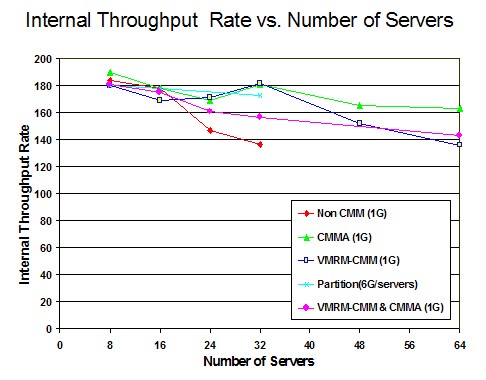

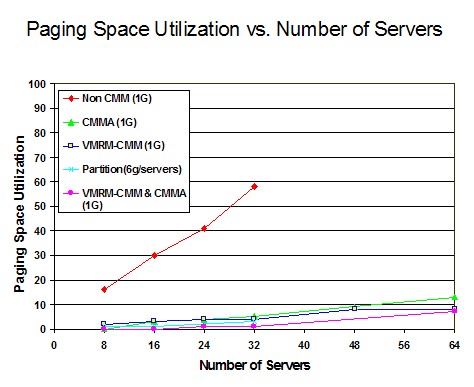

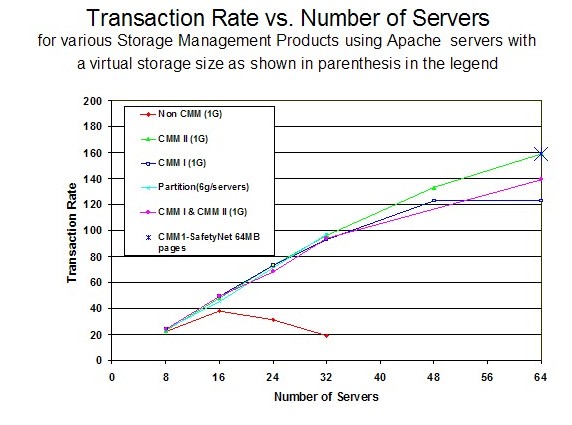

Storage Management Scaling Improvements

z/VM HiperDispatch

System Dump Improvements

CPU Pooling

z/VM Version 6 Release 2

Summary of Key Findings

Changes That Affect Performance

Performance Improvements

Performance Considerations

Performance Management

New Functions

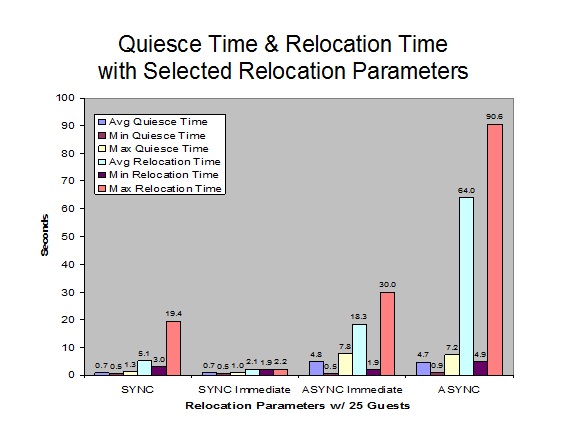

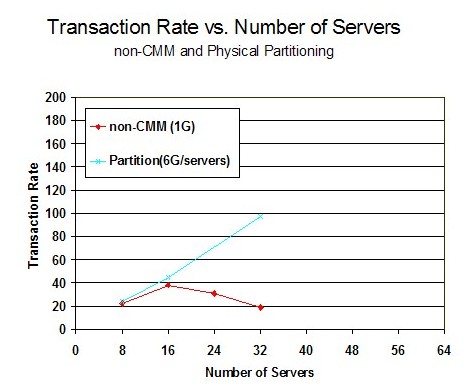

Live Guest Relocation

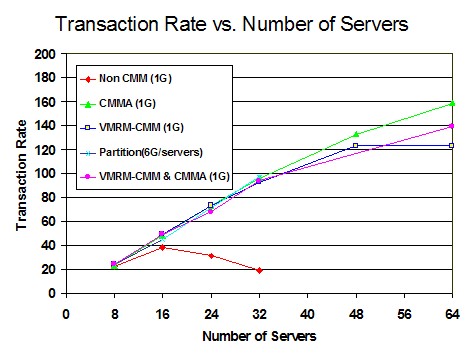

Workload and Resource Distribution

ISFC Improvements

Storage Management Improvements

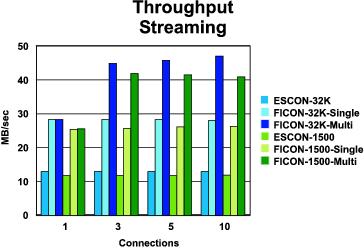

High Performance FICON

z/VM Version 5 Release 4

Summary of Key Findings

Changes That Affect Performance

Performance Improvements

Performance Considerations

Performance Management

New Functions

Dynamic Memory Upgrade

Specialty Engine Enhancements

DCSS Above 2 GB

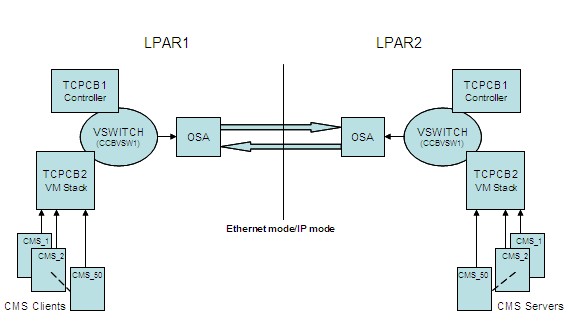

z/VM TCP/IP Ethernet Mode

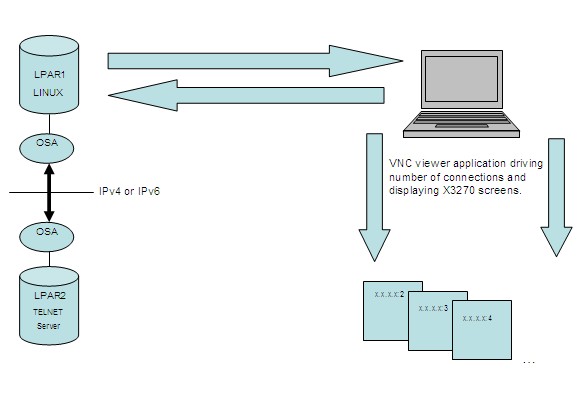

z/VM TCP/IP Telnet IPv6 Support

Update

z/VM Version 5 Release 3

Summary of Key Findings

Changes That Affect Performance

Performance Improvements

Performance Considerations

Performance Management

New Functions

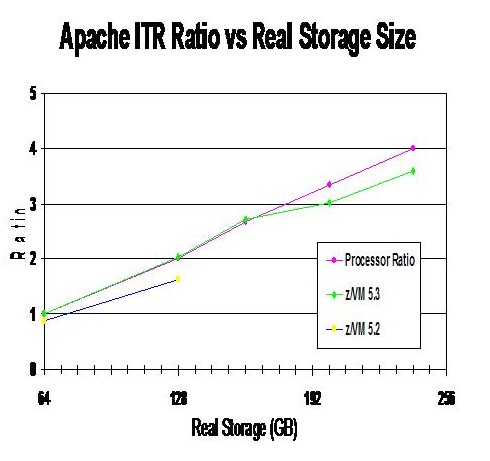

Improved Real Storage Scalability

Memory Management: VMRM-CMM and CMMA

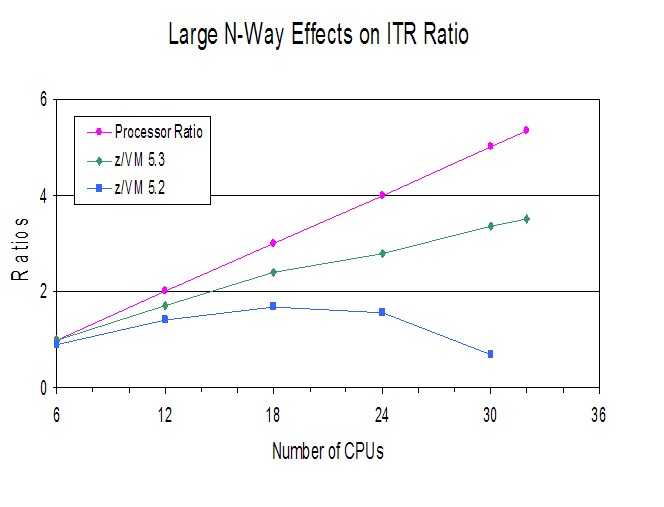

Improved Processor Scalability

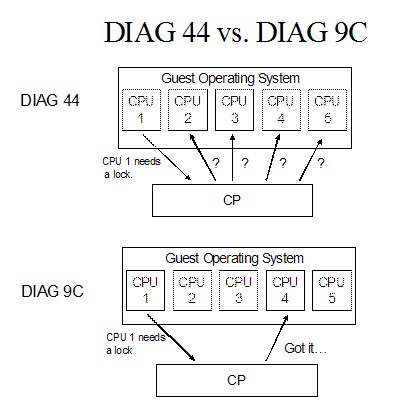

Diagnose X'9C' Support

Specialty Engine Support

SCSI Performance Improvements

z/VM HyperPAV Support

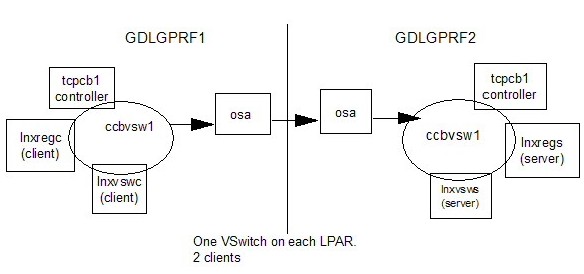

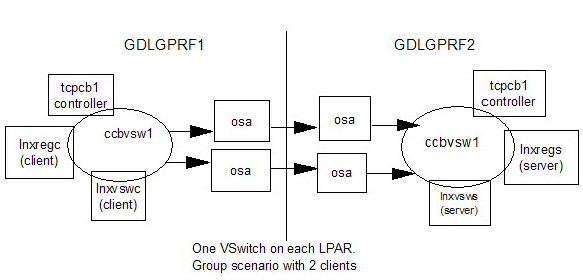

Virtual Switch Link Aggregation

z/VM Version 5 Release 2.0

Summary of Key Findings

Changes That Affect Performance

Performance Improvements

Performance Considerations

Performance Management

Migration from z/VM 5.1.0

CP Regression Measurements

CP Disk I/O Performance

New Functions

Enhanced Large Real Storage Exploitation

Extended Diagnose X'44' Fast Path

QDIO Enhanced Buffer State Management

z/VM PAV Exploitation

Additional Evaluations

Linux Disk I/O Alternatives

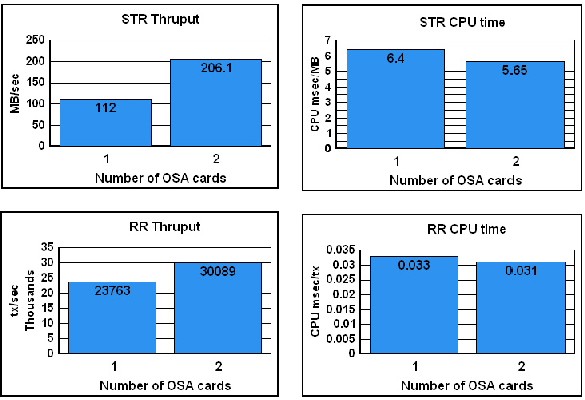

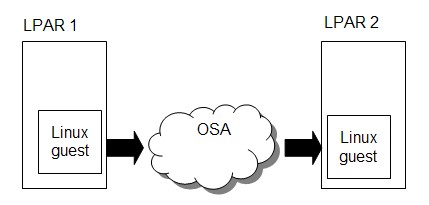

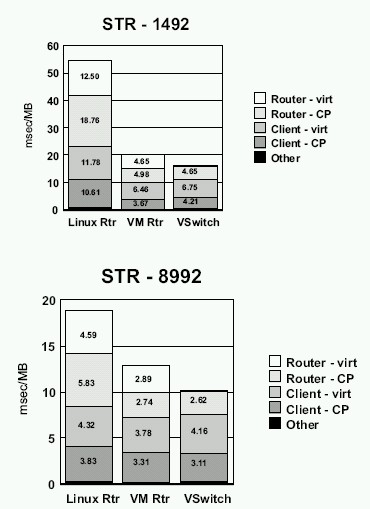

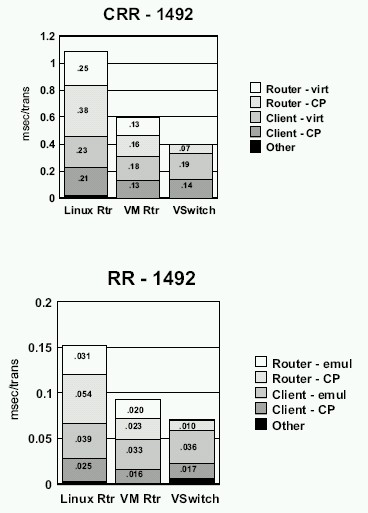

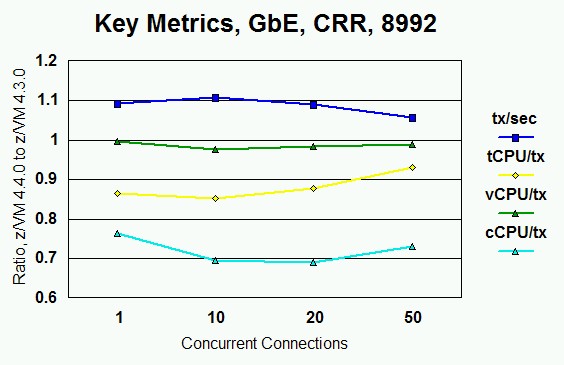

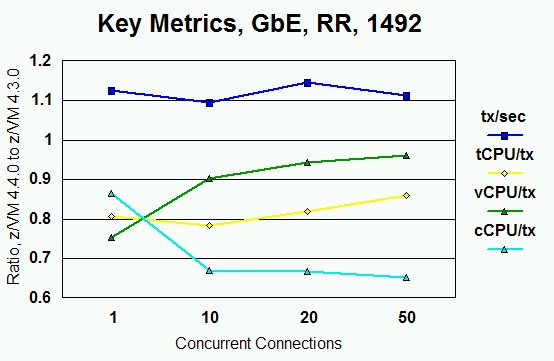

Dedicated OSA vs. VSWITCH

Layer 3 and Layer 2 Comparisons

Guest Cryptographic Enhancements

z/VM Version 5 Release 1.0

Summary of Key Findings

Changes That Affect Performance

Performance Improvements

Performance Considerations

Performance Management

New Functions

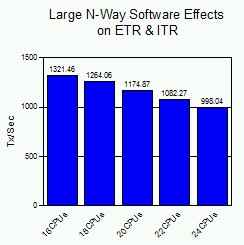

24-Way Support

Emulated FBA on SCSI

Internet Protocol Version 6 Support

Virtual Switch Layer 2 Support

Additional Evaluations

z990 Guest Crypto Enhancements

z/VM Version 4 Release 4.0

Summary of Key Findings

Changes That Affect Performance

Performance Improvements

Performance Management

New Functions

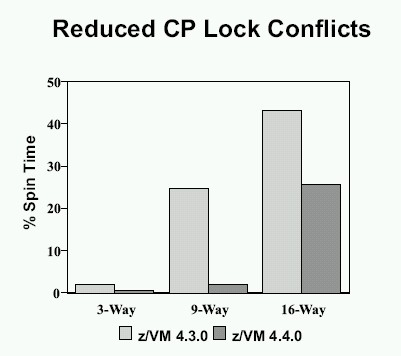

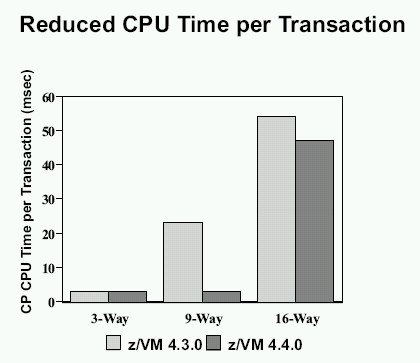

Scheduler Lock Improvement

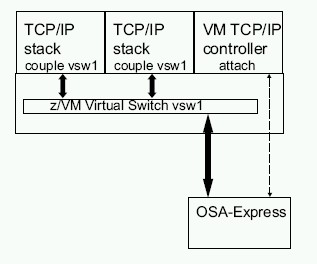

z/VM Virtual Switch

Queued I/O Assist

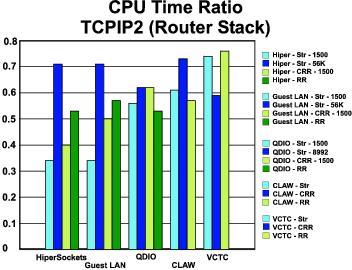

TCP/IP Stack Improvement Part 2

TCP/IP Device Layer MP Support

Additional Evaluations

Linux Guest Crypto on z990

z/VM Version 4 Release 3.0

Summary of Key Findings

Changes That Affect Performance

Performance Improvements

Performance Considerations

Performance Management

New Functions

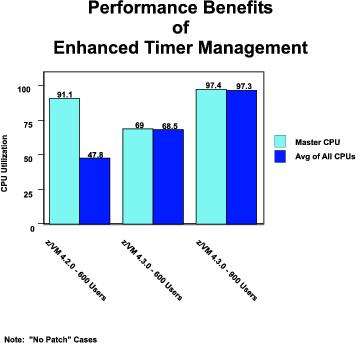

Enhanced Timer Management

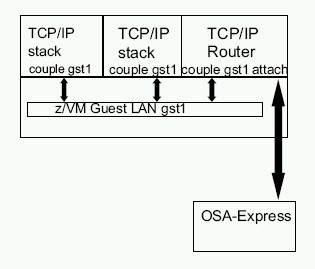

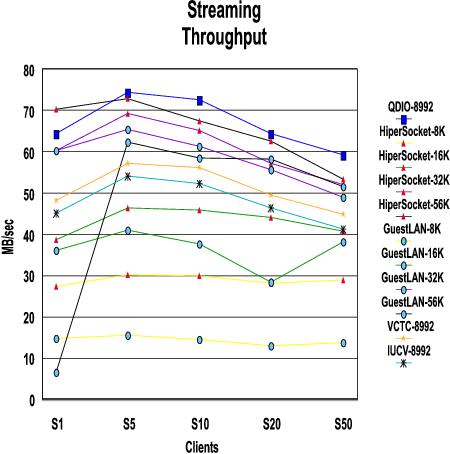

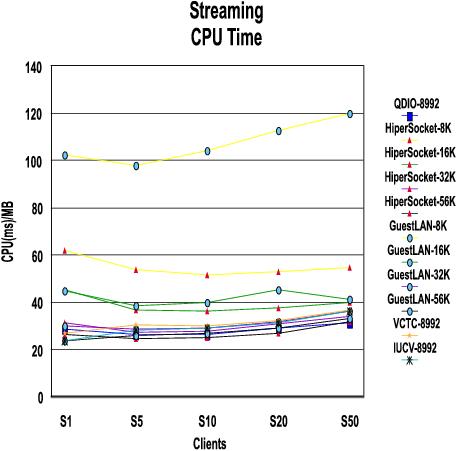

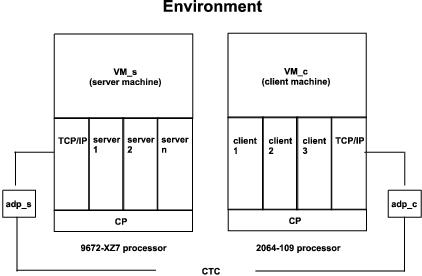

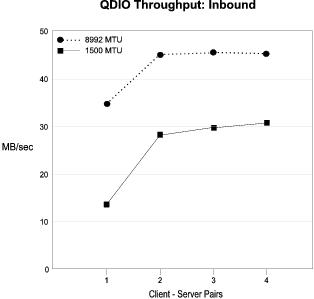

VM Guest LAN: QDIO Simulation

Linux Guest Crypto Support

Improved Utilization of Large Real Storage

Accounting for Virtualized Network Devices

Large Volume CMS Minidisks

TCP/IP Stack Performance Improvements

z/VM Version 4 Release 2.0

Summary of Key Findings

Changes That Affect Performance

Performance Improvements

Performance Management

New Functions

HiperSockets

64-bit Fast CCW Translation

64-bit Asynchronous Page Fault Service (PFAULT)

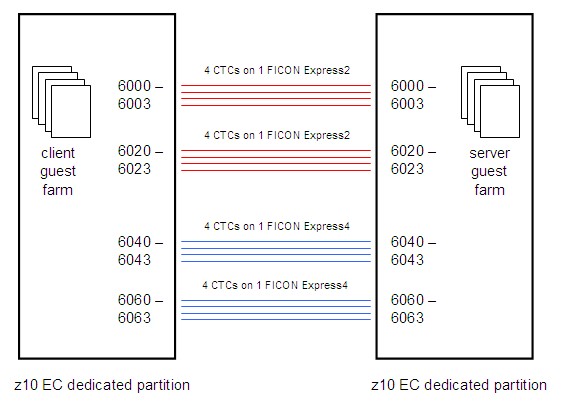

Guest Support for FICON CTCA

DDR LZCOMPACT Option

IMAP Server

Additional Evaluations

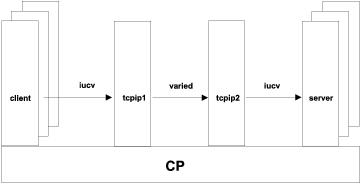

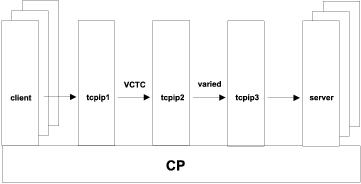

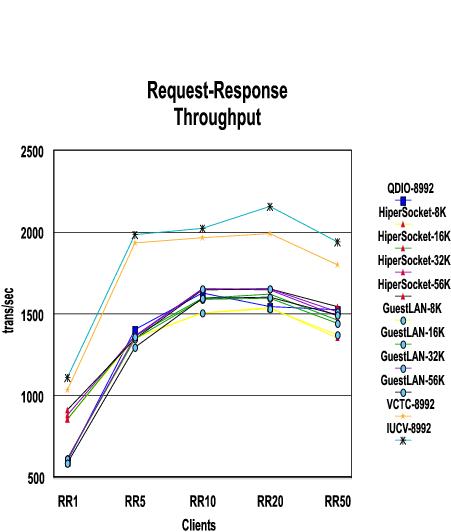

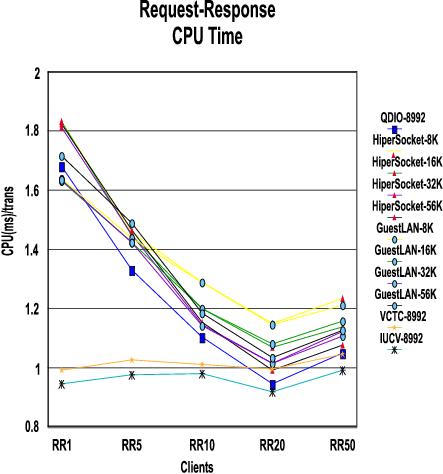

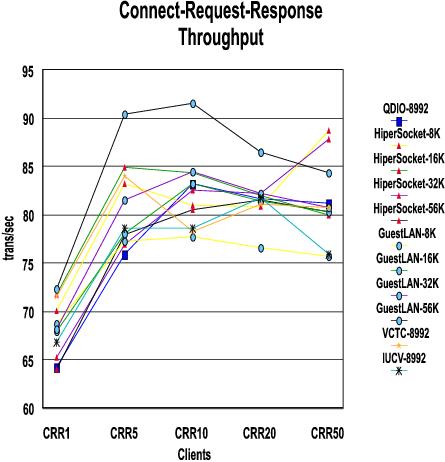

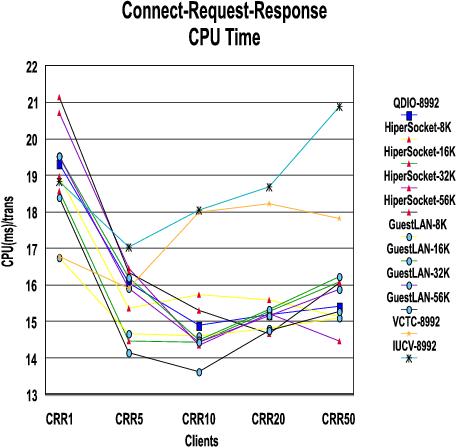

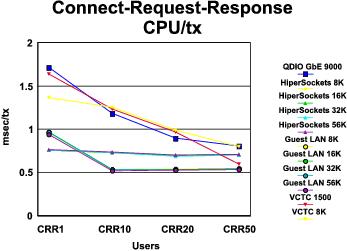

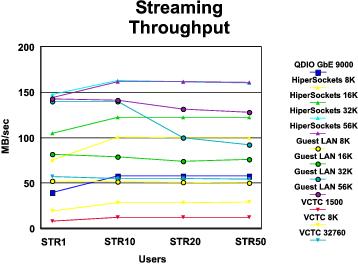

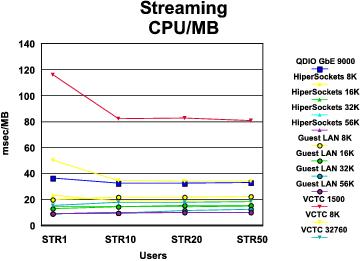

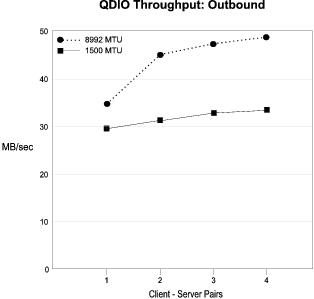

Linux Connectivity Performance

Linux Guest DASD Performance

z/VM Version 4 Release 1.0

Summary of Key Findings

Changes That Affect Performance

Performance Improvements

Performance Management

New Functions

Fast CCW Translation for Network I/O

z/VM Version 3 Release 1.0

Summary of Key Findings

Changes That Affect Performance

Performance Improvements

Performance Considerations

Performance Management

Migration from VM/ESA 2.4.0 and TCP/IP FL 320

CMS-Intensive

2064 2-Way LPAR, 1G/2G

2064-1C8, 2G/6G

2064-1C8, 2G/10G

VSE/ESA Guest

TCP/IP

Telnet

FTP

Migration from Other VM Releases

New Functions

CP 64-Bit Support

Real Storage Sizes above 2G

Minidisk Cache with Large Real Storage

The 2G Line

Queued Direct I/O Support

Secure Socket Layer Support

Additional Evaluations

Linux Guest IUCV Driver

Virtual Channel-to-Channel Performance

Migration from VTAM to Telnet

Comparison of CMS1 to FS8F

Workloads

AWM Workload

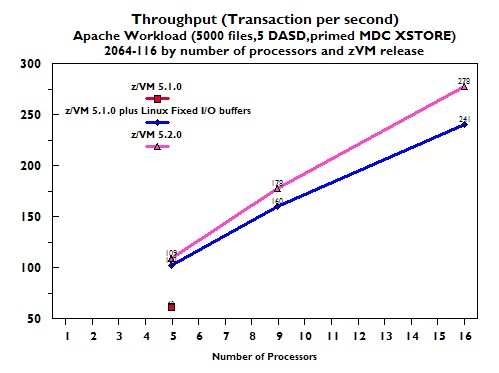

Apache Workload

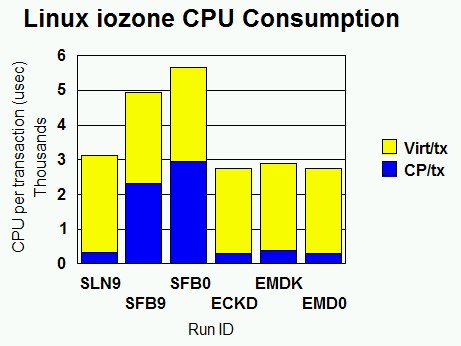

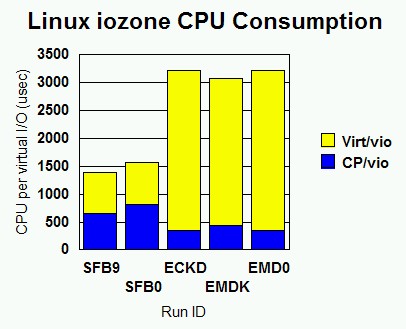

Linux IOzone Workload

Linux OpenSSL Exerciser

z/OS Secure Sockets Layer (System SSL) Performance Workload

z/OS DB2 Utility Workload

z/OS Java Encryption Performance Workload

z/OS Integrated Cryptographic Service Facility (ICSF) Performance Workload

CMS-Intensive (FS8F)

CMS-Intensive (CMS1)

VSE Guest (DYNAPACE)

z/OS File System Performance Tool

z/OS IP Security (IPSec) Performance Workload

Virtual Storage Exerciser (VIRSTOEX or VIRSTOCX)

PING Workload

PFAULT Workload

BLAST Workload

ISFC Workloads

IO3390 Workload

z/VM HiperDispatch Workloads

Middleware Workload DayTrader (DT)

Master Processor Exerciser (VIRSTOMP)

Glossary of Performance Terms

Footnotes

Notices

The information contained in this document has not been submitted to any formal IBM test and is distributed on an as is basis without any warranty either expressed or implied. The use of this information or the implementation of any of these techniques is a customer responsibility and depends on the customer's ability to evaluate and integrate them into the customer's operational environment. While each item may have been reviewed by IBM for accuracy in a specific situation, there is no guarantee that the same or similar results will be obtained elsewhere. Customers attempting to adapt these techniques to their own environments do so at their own risk.

Performance data contained in this document were determined in various controlled laboratory environments and are for reference purposes only. Customers should not adapt these performance numbers to their own environments as system performance standards. The results that may be obtained in other operating environments may vary significantly. Users of this document should verify the applicable data for their specific environment.

This publication refers to some specific APAR numbers that have an effect on performance. The APAR numbers included in this report may have prerequisites, corequisites, or fixes in error (PEs). The information included in this report is not a replacement for normal service research.

References in this publication to IBM products, programs, or services do not imply that IBM intends to make these available in all countries in which IBM operates. Any reference to an IBM licensed program in this publication is not intended to state or imply that only IBM's program may be used. Any functionally equivalent product, program, or service that does not infringe any of the intellectual property rights of IBM may be used instead of the IBM product, program, or service. The evaluation and verification of operation in conjunction with other products, except those expressly designated by IBM, are the responsibility of the user.

IBM may have patents or pending patent applications covering subject matter in this document. The furnishing of this document does not give you license to these patents. You can send inquiries, in writing, to the IBM Director of Licensing, IBM Corporation, North Castle Drive, Armonk, NY, 10594-1785 USA.

Back to Table of Contents.

Programming Information

This publication is intended to help the customer understand the performance of z/VM on various IBM processors. The information in this publication is not intended as the specification of any programming interfaces that are provided by z/VM. See the IBM Programming Announcement for the z/VM releases for more information about what publications are considered to be product documentation.

Back to Table of Contents.

Trademarks

The following terms are trademarks of the IBM Corporation in the United States or other countries or both:

- AIX

- ACF/VTAM

- CICS

- DS6000

- DS8000

- ECKD

- ES/9000

- ESCON

- FICON

- GDPS

- HiperSockets

- HyperSwap

- IBM

- MVS

- OMEGAMON

- OS/2

- PR/SM

- Performance Toolkit for VM

- Processor Resource/Systems Manager

- PR/SM

- RACF

- RAMAC

- RS/6000

- System/390

- S/390

- System z

- System z9

- System z10

- Tivoli

- Virtual Machine/Enterprise Systems Architecture

- VM/ESA

- VM/XA

- VSE/ESA

- VTAM

- z Systems

- z13

- zSeries

- z/Architecture

- z/VM

- 3090

Linux is a trademark of Linus Torvalds in the United States, other countries, or both.

Windows NT is a trademark of Microsoft Corporation in the United States and other countries.

Pentium is a trademark of Intel Corporation in the United States and other countries.

Cisco is a trademark of Cisco Systems Inc., in the United States and other countries.

SUSE is a trademark of Novell, Inc., in the United States and other countries.

Other company, product, and service names might be trademarks or service marks of others.

Back to Table of Contents.

Acknowledgements

The following people contributed to this report:

Cherie Barnes

Bill Bitner

Victor Boyarintsev

Bill Cosnett

Dean DiTommaso

Tom Doster

Wes Ernsberger

Bill Guzior

Steve Jones

Virg Meredith

Bob Neill

Bernd Nitsch

Dorin Pascar

Bailey (Bei) Peng

Patty Rando

Walter Roth

Ann Shepherd

Dave Spencer

Marek Szermutzky

Joe Tingley

Xenia Tkatschow

Brian Wade

Editor's Note for the z/VM 5.4 Edition

In June 2008 our long-time z/VM Performance Report editor, Wes Ernsberger, retired after more than 39 years of faithful service to IBM, many of which he gave to z/VM and its predecessors. Starting with z/VM 5.4, the responsibility of coordinating, editing, and publishing the report falls to me.

In 1785 Benjamin Franklin retired from his six-year post as American Minister to France. Appointed to fill the position was none other than Thomas Jefferson. When Jefferson arrived in France, the French Foreign Minister, Charles Gravier, comte de Vergennes, asked him: "Is it you who replace Dr. Franklin?" Jefferson, himself a genius statesman, replied, "Sir, I am only his successor. No one can replace Dr. Franklin."

And so it is with you, Wes. Best wishes in your retirement.

Brian Wade

September 19, 2008

Editor's Note for the z/VM 6.4 Edition

During the development of z/VM 6.4 our longtime mentor, colleague, and friend Virg Meredith retired after 52 years of faithful service to the performance of IBM's mainframes. Virg spent many of those years helping both the z/VM product and literally generations of z/VMers to become the best they could be. Virg, we miss you and wish you the very best in your retirement.

Brian Wade

November 11, 2016

Other Notes

In memoriam, Joe Tingley, May 10, 2012.

Back to Table of Contents.

Introduction

The z/VM Performance Report summarizes the z/VM performance evaluation results. For each z/VM release, discussion covers the performance changes in that release, the performance effects of migrating from the prior release, measurement results for performance-related new functions, and additional evaluations that occurred during the time frame of that release.

Back to Table of Contents.

Update Log

This document is refreshed as additional z/VM performance information becomes available. These updates are listed below:

Date |

Action |

11 Nov 2016 | z/VM 6.4 |

27 Mar 2015 | z/VM for z13 |

12 Jun 2014 | Added section "CPU Pooling" |

09 Aug 2013 | z/VM 6.3 |

11 May 2012 | Added section "High Performance FICON" |

02 Dec 2011 | z/VM 6.2 |

16 Dec 2008 | Added section "CMS-Based SSL Server" |

19 Sep 2008 | z/VM 5.4 |

19 Feb 2008 | Added section "Memory Management: VMRM-CMM and CMMA" |

08 Nov 2007 | Added section "Virtual Switch Link Aggregation" |

01 Nov 2007 | Added section "Diagnose X'9C' Support" |

29 Jun 2007 | z/VM 5.3 |

07 Aug 2006 | Added section "z/VM PAV Exploitation" |

01 Feb 2006 | Added section "QDIO Enhanced Buffer State Management" |

12 Jan 2006 | Added section "Layer 3 and Layer 2 Comparisons" |

16 Dec 2005 | z/VM 5.2 |

05 Jan 2005 | Added section "Virtual Switch Layer 2 Support" |

24 Sep 2004 | z/VM 5.1 |

23 Feb 2004 | "Queued I/O Assist" and "TCP/IP Device Layer MP Support" sections added |

15 Aug 2003 | z/VM 4.4 |

18 Jun 2002 | "Enhanced Timer Management" and "VM Guest LAN: QDIO Simulation" sections added |

31 May 2002 | z/VM 4.3 |

03 May 2002 | Added section "Guest Support for FICON CTCA" |

27 Mar 2002 | z/VM 4.2 |

22 Jun 2001 | z/VM 4.1 |

16 Mar 2001 | Added section "Linux Guest IUCV Driver" |

22 Feb 2001 | z/VM 3.1 (base document) |

Back to Table of Contents.

Referenced Publications

The following publications and documents are referred to in this report.

- z/VM: Saved Segments Planning and Administration

- z/VM: Getting Started with Linux on System z

- z/VM: Performance

- z/VM: CP Planning and Adminstration

- z/VM: CP Commands and Utilities Reference

- z/VM: Running Guest Operating Systems

- z/VM: CMS Commands and Utilities Reference

- z/VM: Connectivity

- VM Performance Reporting Facility

- z/VM Performance Toolkit

These can be found on our publications page.

The following publications are performance reports for earlier VM releases:

- VM/ESA Version 2 Release 4.0 Performance Report

- VM/ESA Version 2 Release 3.0 Performance Report

- VM/ESA Version 2 Release 2.0 Performance Report

- VM/ESA Version 2 Release 1.0 Performance Report

- VM/ESA Release 2.2 Performance Report

- VM/ESA Release 2.1 Performance Report

- VM/ESA Release 2 Performance Report

- VM/ESA Release 1.1 Performance Report

- VM/ESA Release 1.0 Performance Report

These are available as PDF files at our performance reports page. Much additional VM performance information is available on our performance page.

Back to Table of Contents.

z/VM 6.4

z/VM 6.4 brings increased memory scalability, increased paging scalability, and improvements to the CP scheduler. It also brings encryption of RSCS TCPNJE connections and changes to the SSL default cipher strength.

The following sections discuss the performance characteristics of z/VM 6.4 and the results of the performance evaluation.

Back to Table of Contents.

Summary of Key Findings

This section summarizes key z/VM 6.4 performance items and contains links that take the reader to more detailed information about each one.

Further, the Performance Improvements article gives information about other performance enhancements in z/VM 6.4.

For descriptions of other performance-related changes, see the z/VM 6.4 Performance Considerations and Performance Management sections.

To compare the performance of z/VM 6.4 to the performance of previous releases, IBM ran a variety of workloads on the two systems. For the base case, IBM used z/VM 6.3 plus all Control Program (CP) PTFs available as of March 31, 2016. For the comparison case, IBM used z/VM 6.4 at the "code freeze" level of August 15, 2016. The runs were done on a mix of zEC12 and z13.

Regression measurements comparing these two z/VM levels showed improvement on z/VM 6.4 compared to z/VM 6.3. ETRR had mean 1.10 and standard deviation 0.25. ITRR had mean 1.15 and standard deviation 0.37.

Runs showing large improvements tended to be either memory-constrained workloads that got the benefit of the memory management and paging work or networking runs that got the benefit of repairs to the handling of jumbo frames. Most of the rest of the runs showed ratios in the neighborhood of 1.

Key Performance Improvements

z/VM 6.4 contains the following enhancements that offer performance improvements compared to previous z/VM releases:

Memory Constraint Relief and 2 TB Exploitation: z/VM can now use a central storage size of 2 TB (2048 GB). This is due in part to serialization constraint relief that was done in the memory management subsystem. For more information, read the chapter.

HyperPAV and zHPF Paging: z/VM can now use HyperPAV aliases for paging. It also can now use High Performance FICON, aka zHPF, channel programs for paging. For more information, read the chapter.

Other Functional Enhancements

These additional functional enhancements since z/VM for z13 are also notable:

CP Scheduler Improvements: In 2014 a customer reported z/VM's scheduler did not observe or enforce share setting correctly in certain situations. z/VM 6.4 made repairs to the scheduler. For more information, read the chapter.

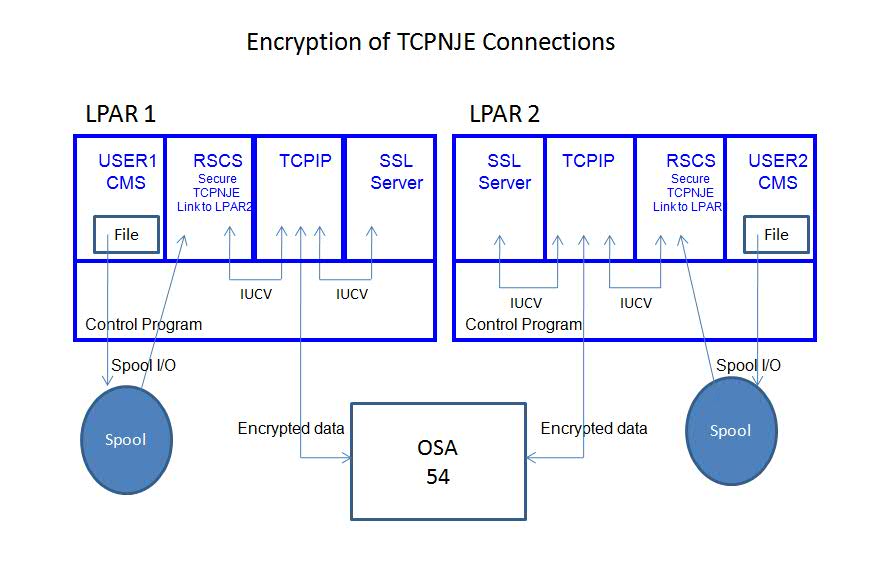

Encryption of RSCS TCPNJE Connections: In z/VM 6.3 with the PTF for APAR VM65788 IBM shipped service for RSCS that will let it encrypt the traffic that flows across a TCPNJE link. To accomplish this RSCS exploits z/VM TCP/IP's Secure Sockets Layer or SSL. Though there is some performance impact compared to running the TCPNJE link unencrypted, some customers might wish to make the tradeoff. For more information, read the chapter.

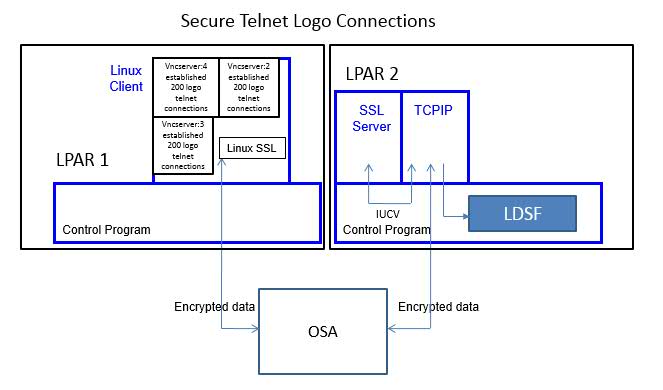

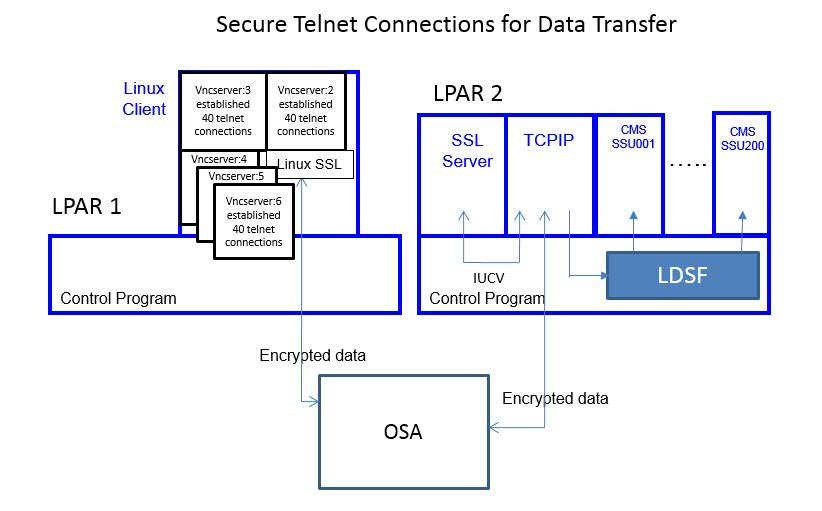

TCP/IP Encryption Uplift: In z/VM 6.4 certain TCP/IP encryption defaults are strengthened and a new version of System SSL is present. Using two Telnet workloads IBM evaluated all of these changes. For more information, read the chapter.

Back to Table of Contents.

Changes That Affect Performance

This chapter contains descriptions of various changes in z/VM 6.4 that affect performance. It is divided into three sections -- Performance Improvements, Performance Considerations, and Performance Management.

Back to Table of Contents.

Performance Improvements

Large Enhancements

In Summary of Key Findings this report gives capsule summaries of the performance notables in z/VM 6.4.

Small Enhancements

z/VM 6.4 contains several small functional enhancements related to performance.

VM65733: Support for the new z Systems Vector Facility (SIMD).

VM65716: Support for the z13 Driver D27, including LPAR Group Absolute Capacity Capping. Also included were exploitation of Single Increment Assign Control to make dynamic memory upgrade more efficient and decreasing CP's real crypto adapter polling interval to benefit APVIRT users.

VM65680: Support for prorated core time. This in turn lets ILMT work correctly.

VM65583: Support for multi-VSWITCH link aggregation.

Service Since z/VM for z13

z/VM 6.4 also contains a number of performance repairs that were first shipped as service to earlier releases. Because IBM refreshes this report only occasionally, IBM has not yet had a chance to describe these PTFs.

VM64587: VDISK pages were not being stolen aggressively enough.

VM64770: The read-in of guest PGMBKs at guest logoff was inefficient.

VM64890: A bad loop counter caused excessive CPU consumption in Minidisk Cache (MDC).

VM64941: In some situations the guest change-bit could end up being wrongly set for an IBR page. This could in turn cause excess processing in the guest.

VM65097: PGMBK prefetch can slow the system when Diag x'10' requests are single-page.

VM65101: Invalid-but-resident (IBR) pages on the global aging list were being rewritten to DASD even though they had never been changed since having been read from DASD.

VM65189: An error in memory management was causing excessive and useless PGMBK reclaim work to be stacked on SYSTEMMP.

VM65199: Errors in the dispatcher were causing failures in how SYSTEMMP work was being handled. One failure was that once the master CPU started processing SYSTEMMP work, it would not process anything else until the SYSTEMMP queue was empty. Another failure was that some CPUs eligible to handle SYSTEMMP work were not being awakened when SYSTEMMP work needed to be done.

VM65420: Frames that should have been stolen from MDC were not being stolen.

VM65692: A defect in HCPARD was causing a certain interception control bit to remain on when it should not. This was causing excessive simulation overhead in CP.

VM65709: MDC processing was being done even though the MDIMDCP flag, which is supposed to inhibit MDC, was set. This useless processing caused waste of CPU time.

VM65748: Certain zHPF (High Performance FICON) features were unavailable to guests even though the hardware supported them.

VM65762: Under some circumstances CP might fail to deliver PCI thin interrupts to guests.

VM65794: MDC might fail to work for RDEVs whose device number is greater than or equal to x'8000'.

VM65801: Even though its OSA uplink port had reached its capacity, VSWITCH continued to redrive the uplink port, causing excessive CPU consumption.

VM65820: Because of a PE in VM65189, PGMBK reclaim could exit without releasing an important lock. This stopped all future PGMBK reclaim, which in turn made PGMBKs into a memory leak, which in turn consumed memory unnecessarily.

VM65824: The administrator had set Minidisk Cache (MDC) off for a given real volume, but if a user then logged on and said user had a DEVNO minidisk defined on that real volume, the system would turn on MDC for that volume.

VM65837: If a DASD recovery I/O gets queued at an inopportune moment, I/O to the DASD device can stall.

VM65845: If a hyperswap occurs at an inopportune moment, I/O to the swapped device can stall.

VM65869: Remove excessive LOGOFF delay for QDIO-exploitive guests.

VM65886: CCW fast-trans wrongly marked some minidisk I/Os as ineligible to be performed on HyperPAV aliases.

Miscellaneous Repairs

IBM continually improves z/VM in response to customer-reported or IBM-reported defects or suggestions. In z/VM 6.4 the following small improvements or repairs are notable:

Excessive SCSI retries: When IPLing the LPAR from a SCSI LUN, if one or more of the paths to the LUN were offline, the IPL would take a long time. It was found there were excessive retries of Exchange Config Data. The retry limit was decreased.

Lock hierarchy violation: A violation of a lock acquisition hierarchy slowed down processing in VSWITCH. The violation was repaired.

Memory leak in QUERY PROCESSORS: The handler for the CP QUERY PROCESSORS command contained a memory leak. If the leak were hit enough times the system could slow down. The leak was repaired.

Unnecessary VSWITCH real device redrives: Under some circumstances HCPIQRRD unnecessarily redrove an uplink or bridge port real device. The unnecessary redrives were removed.

Unnecessary emergency replenishment scans: Memory management would do unnecessary emergency replenishment scans in some situations. The scans could hang the system. The reason for the unnecessary scans was found and repaired.

Incorrect SMT dispatcher adjustments: In z/VM 6.3 the dispatcher behaved slightly differently for SMT than for non-SMT. This difference was in play for SMT-1 when it should not have been. The result of the error was that SMT-1 performance was worse than non-SMT performance. z/VM 6.4 repairs the system so that the difference is in play for only SMT-2. This change makes SMT-1 perform the same as non-SMT.

Unnecessary calls to MDC steal: Memory management was found to be making calls to steal MDC frames even when it was known MDC was using no frames. The unnecessary calls were removed.

Back to Table of Contents.

Performance Considerations

As customers begin to deploy z/VM 6.4, they might wish to give consideration to the following items.

Regression Behavior

Our regression findings included results from both memory-rich and memory-constrained workloads. The memory-constrained workloads tended to get improvements in ETR and ITR, especially if they were large N-core configurations. This was because of the compounding of the effects of the paging improvements and the memory scalability improvements. If your workloads are memory-constrained and large N-core, IBM would appreciate hearing your experience; please send us a feedback.

Dynamic SMT

z/VM 6.4 lets the system administrator switch the system between SMT-1 mode and SMT-2 mode without an IPL. In this way the administrator can try SMT-2, measure its behavior, and then return to SMT-1 mode if SMT-2 mode is found unsuitable.

Customers must remain mindful that SMT-1 mode is not the same as non-SMT mode. IBM did measure the performance of z/VM 6.4 in SMT-1 mode compared to non-SMT mode, core counts being equal. Differences were slight if visible at all. However, the total computing capacity achievable in non-SMT mode still exceeds, and will forever exceed, what can be achieved in SMT-1 mode or SMT-2 mode. In non-SMT mode z/VM can make use of 64 cores in the LPAR whereas in SMT-1 mode or SMT-2 mode z/VM can make use of only 32 cores. Customers running z/VM in non-SMT mode in LPARs having more than 32 cores will have to give up some cores to get to SMT-1 or to SMT-2. Switching z/VM between non-SMT mode and one of the SMT modes still requires an IPL.

In using Dynamic SMT to audition SMT-2, please remember to collect reliable measurement data and to use your data to drive your decision about how to proceed. While you are in SMT-1 mode, collect both application-specific performance data, such as transaction rates, and MONWRITE data. Be sure to collect CPU MF data as part of your MONWRITE collection. Then switch to SMT-2 and collect the very same data. Then compare the two sets of results. Try to compare results collected during times when workload was about the same. For example, for your situation it might make sense to compare Tuesday at 2 PM this week to Tuesday at 2 PM last week.

IBM is interested to hear the experiences of customers who use Dynamic SMT to audition SMT-2. Please take time to send us a feedback. We will be grateful if you will let us have copies of your measurement data and of your comparison analysis.

HyperPAV and zHPF Paging

In our article we discuss the z/VM 6.4 changes that let CP use HyperPAV aliases for paging I/O. We also discuss the changes that let CP use High Performance FICON (zHPF) for paging I/O.

Achieving paging I/O concurrency is one reason customers have been asking for paging I/O to exploit HyperPAV aliases. Customers who have defined large numbers of paging volumes only for the purpose of achieving I/O concurrency can now use HyperPAV aliases to achieve the concurrency. Doing this will let them return the excess paging DASD to other uses. In doing this remember not to decrease the total amount of paging space below a safe level for your workload.

Performance Toolkit for VM has not yet been enhanced to depict what the monitor records report about CP's use of HyperPAV aliases. In the meantime there is a VM Download Library package called HPALIAS you can use.

Memory Scalability

The work done in the memory scalability improvement let IBM increase the central storage support limit from 1 TB to 2 TB. The increase will help customer who are feeling memory constraints. The heart of the work was to split the memory manager state data from one monolithic structure into a structure that could be used concurrently by multiple logical CPUs without undue spin lock contention.

Customers wanting to increase memory need to remember that very often a system can grow effectively only when all resource types are increased in proportion to one another. CPUs, memory, channels, networking, paging DASD space, and paging DASD exposures are some examples of resources that need to be considered together when planning system growth.

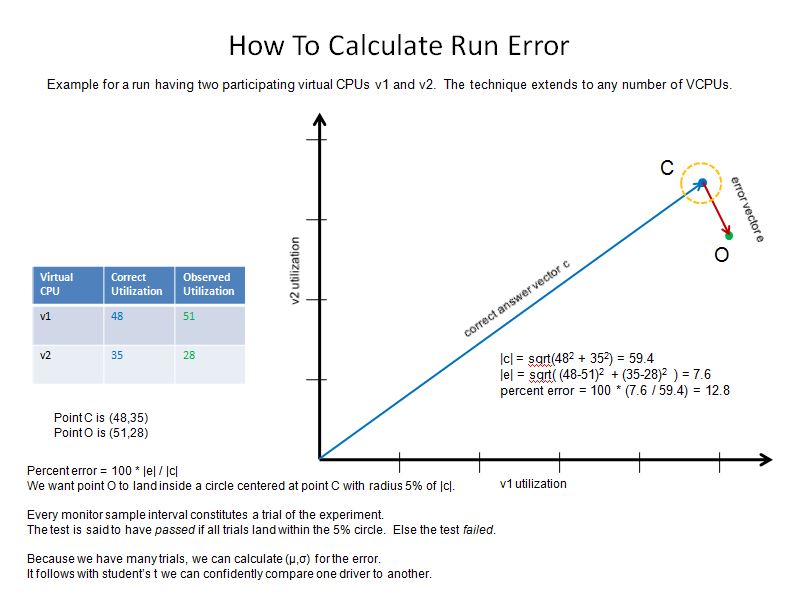

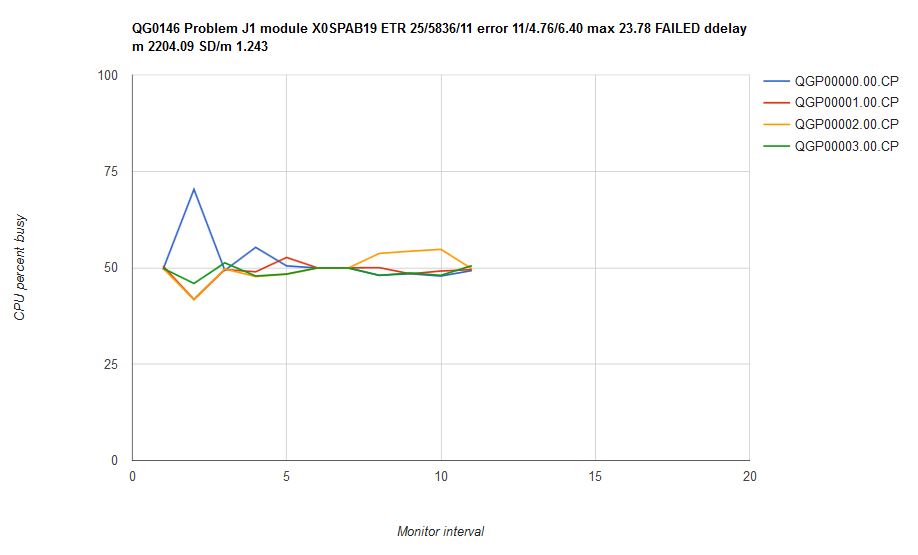

CP Scheduler Improvements

In our article we discuss the improvements made in the CP scheduler in z/VM 6.4. The purpose of the improvements was to address VM65288, in which a customer demonstrated CP did not honor share settings in certain situations. In the tests we tried, CP now honors share settings more accurately than it did in previous z/VM releases.

Some customers might have finely tuned their systems by adjusting share settings until the system behaved just the way they wanted. Customers who have done so might find they need to retune now that the scheduler has been repaired.

On systems that are not completely busy, in a very large, macro sense, share settings in theory do not matter. As a consequence, unused capacity in the LPAR is often taken as a sign that all users are getting all of the CPU time they want. During our study of z/VM 6.4, though, we found that when there are only a few users and their demands add up to a good fraction of the LPAR's capacity, there can still be unfulfilled demand even though the LPAR is not completely busy. The Perfkit reports FCX114 USTAT, FCX114 INTERIM USTAT, FCX164 USTATLOG, and FCX315 USTMPLOG can help you to find users who want more CPU power than they are being given. FCX135 USTLOG is less useful because it does not cite users individually.

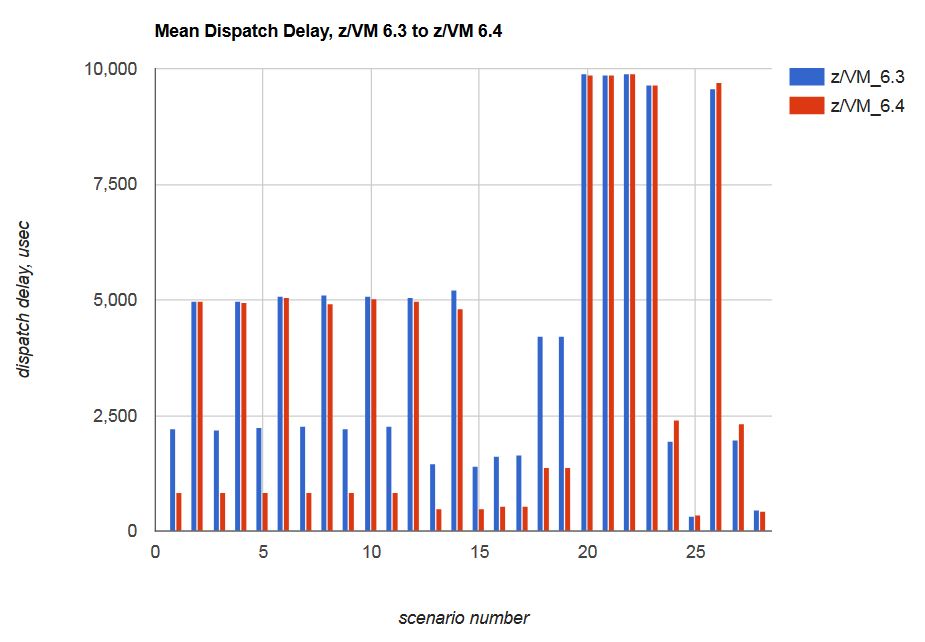

In the scenarios we tried, z/VM 6.4 often dispatched users with less delay than did the previous release. In other words, the amount of time between the instant a virtual CPU became ready for dispatch and the instant CP dispatched the virtual CPU tended to be less on z/VM 6.4 than it was on the previous release. This bodes well for workloads where response time is important or for situations where a physical phenomenon, such as a network device interrupt, must be serviced quickly to avoid a problem such as a timeout or a data overrun.

During our study we also evaluated the LIMITSOFT and RELATIVE LIMITHARD features of the CP scheduler. We found those two features not to work correctly in the scenarios we tried. Customers depending upon LIMITSOFT or RELATIVE LIMITHARD might wish to evaluate whether their use of those features is having the intended effect.

Back to Table of Contents.

Performance Management

These changes in z/VM 6.4 affect the performance management of z/VM:

- Monitor Changes

- Command or Output Changes

- Effects on Accounting Data

- Performance Toolkit for VM Changes

- Omegamon XE Changes

Monitor Changes

Several z/VM 6.4 enhancements affect CP monitor data. The changes are described below. The detailed monitor record layouts are found on the control blocks page.

z/VM 6.4 enhancements enable hypervisor intialization and termination, the Stand-Alone Program Loader (SAPL), DASD Dump Restore (DDR), Stand-Alone Dump, and other stand-alone utilities to run entirely in z/Architecture mode.

The following monitor records have been updated for this support:

| Monitor Record | Record Name |

|---|---|

| Domain 0 Record 1 | System Data (Per Processor) |

| Domain 0 Record 15 | Logical Partition Configuration |

| Domain 4 Record 2 | User Logoff Data |

| Domain 4 Record 3 | User Activity Data |

| Domain 4 Record 9 | User Activity Data at Transaction End |

z/VM is enhanced to provide support for the Enhanced-DAT Facility, which allows a guest to exploit 1 MB pages in addition to the supported 4 KB pages.

The following monitor record is updated for this support:

| Monitor Record | Record Name |

|---|---|

| Domain 5 Record 11 | Instruction Counts (per processor) |

Support for Simultaneous Multithreading (SMT) is enhanced with the addition of the SET MULTITHREAD command. Once z/VM 6.4 has been IPLed with multithreading enabled in the system configuration file, this command can be used to switch non-disruptively between one and two activated threads per IFL core.

The following new monitor record has been created for this support:

| Monitor Record | Record Name |

|---|---|

| Domain 5 Record 21 | SMT Configuration Change Event |

The following monitor records have been updated for this support:

| Monitor Record | Record Name |

|---|---|

| Domain 0 Record 2 | Processor Data (Per Processor) |

| Domain 1 Record 4 | System Configuration Data |

| Domain 5 Record 1 | Vary on processor |

| Domain 5 Record 2 | Vary off processor |

| Domain 5 Record 20 | MT CPUMF Counters |

IBM z13 and z13s are the last z Systems servers to support expanded storage (XSTORE). z/VM 6.4 does not support XSTORE for either host or guest usage.

The following monitor records are no longer generated:

| Monitor Record | Record Name |

|---|---|

| Domain 0 Record 5 | Expanded Storage Data (per processor) |

| Domain 1 Record 17 | Expanded Storage Data |

| Domain 3 Record 9 | Expanded Storage Data |

| Domain 3 Record 10 | Expanded Storage Data (per user) |

The following monitor records have been updated for the removal of this support:

| Monitor Record | Record Name |

|---|---|

| Domain 0 Record 14 | Minidisk Cache Data (Global) |

| Domain 1 Record 16 | Scheduler Settings |

| Domain 2 Record 4 | Add User to Dispatch List |

| Domain 2 Record 5 | Drop User from Dispatch List |

| Domain 2 Record 6 | Add User to Eligible List |

| Domain 2 Record 7 | SET SRM Changes |

| Domain 3 Record 3 | Shared Storage Management (per NSS or DCSS) |

| Domain 3 Record 14 | Address Space Information Record |

| Domain 3 Record 16 | NSS/DCSS/SSP Removed From Storage |

| Domain 4 Record 2 | User Logoff Data |

| Domain 4 Record 3 | User Activity Data |

| Domain 4 Record 9 | User Activity Data at Transaction End |

A z/VM storage administrator can now use FlashSystem storage as a z/VM-system-attached DASD, directly attached to the host without the need for an intermediate SAN Volume Controller (SVC). Previously, though FlashSystem could be used by a Linux virtual machine without an SVC, to use it for z/VM system volumes or EDEVs for virtual machines, an external or internal SVC was required. This enhancement removes that requirement.

The following monitor records have been updated for this support:

| Monitor Record | Record Name |

|---|---|

| Domain 1 Record 6 | Device Configuration Data |

| Domain 6 Record 1 | Vary on Device |

| Domain 6 Record 3 | Device Activity |

The IBM z Unified Resource Manager (zManager) is no longer supported by z/VM. The virtual switch types of IEDN and INMN have been removed from CP and TCP/IP commands and other externals.

The following monitor records have been updated for this support:

| Monitor Record | Record Name |

|---|---|

| Domain 1 Record 4 | System Configuration Data |

| Domain 1 Record 19 | QDIO Device Configuration |

| Domain 6 Record 23 | Virtual Switch Recovery |

| Domain 6 Record 25 | QDIO Device Activation Event |

| Domain 6 Record 27 | QDIO Device Deactivation Event |

| Domain 8 Record 1 | Virtual NIC Session Activity |

| Domain 8 Record 2 | Virtual NIC Guest Link State - Link Up |

| Domain 8 Record 3 | Virtual NIC Guest Link State - Link Down |

Improvements to memory management algorithms provide a basis for future enhancements that can increase the performance of workloads that experience available list spin lock contention.

The following monitor records have been updated for this support:

| Monitor Record | Record Name |

|---|---|

| Domain 0 Record 4 | Real Storage Data (Per Processor) |

| Domain 0 Record 23 | Formal Spin Lock Data |

| Domain 3 Record 1 | Real Storage Management (Global) |

Virtual machines that do not consume all of their entitled CPU power, as determined by their share setting, generate surplus CPU power. This enhancement distributes the surplus to other virtual machines in proportion to their share setting. This is managed independently for each processor type (General Purpose, IFL, zIIP, and so on) across virtual machines.

The following monitor records have been updated for this support:

| Monitor Record | Record Name |

|---|---|

| Domain 2 Record 4 | Add User to Dispatch List |

| Domain 2 Record 5 | Drop User from Dispatch List |

| Domain 2 Record 6 | Add User to Eligible List |

| Domain 2 Record 13 | Add VMDBK to the Limit List |

| Domain 2 Record 14 | Drop VMDBK from the Limit List |

z/VM paging now exploits the ability for an IBM DS8000 device to execute multiple I/O requests to an ECKD volume in parallel from a single z/VM image. In HyperPAV mode, I/O resources can be assigned on demand as needed. If the base volume is busy, z/VM selects a free alias device from a pool, binds the alias device to the base device, and starts the I/O. When the I/O completes, the alias device is returned to the pool to be used for another I/O to the same logical subsystem (LSS).

The following monitor records have been updated for this support:

| Monitor Record | Record Name |

|---|---|

| Domain 1 Record 7 | Memory Configuration Data |

| Domain 1 Record 20 | HyperPAV Pool Definition |

| Domain 3 Record 1 | Real Storage Management (Global) |

| Domain 3 Record 4 | Auxiliary Storage Management |

| Domain 3 Record 8 | Block Paging Data |

| Domain 3 Record 11 | Auxiliary Shared Storage Management |

| Domain 6 Record 3 | Device Activity |

| Domain 6 Record 28 | HyperPAV Pool Activity |

| Domain 6 Record 32 | Indicates an HPF Feature Change |

To provide additional debug information for system and performance problems, z/VM 6.4 added or changed these monitor records:

| Monitor Record | Record Name |

|---|---|

| Domain 0 Record 15 | Logical Partition Configuration |

| Domain 0 Record 21 | System Execution Space (Global) |

| Domain 1 Record 31 | CP Service Configuration |

| Domain 5 Record 18 | Dispatch Vector High Frequency Data |

| Domain 6 Record 4 | Cache Activity Data |

| Domain 6 Record 10 | Automated Tape Library Statistics |

| Domain 6 Record 22 | Virtual Switch Failover |

| Domain 6 Record 30 | LSS PAV Transition |

| Domain 6 Record 34 | Virtual Switch Bridge Port Deactivation |

| Domain 6 Record 40 | Guest Disables a PCI Function |

| Domain 6 Record 42 | PCI Function Added to the System |

| Domain 6 Record 45 | Real PCI Function varied on |

Command or Output Changes

This section cites new or changed commands or command outputs that are relevant to the task of performance management. It is not an inventory of every new or changed command.

The section does not give syntax diagrams, sample command outputs, or the like. Current copies of z/VM publications can be found in the online library.

Related to VSWITCH

- SET VSWITCH adds COUNTERS CLEAR to clear debug counters.

Related to HyperPAV Paging

- SET PAGING and QUERY PAGING work with the new paging driver.

- SET CU and QUERY CU work with alias shares.

- SET AGELIST and QUERY AGELIST work with the new paging driver.

- SET IPLPARMS controls the new PAGING63 IPL parameter.

Related to Installed Service

- QUERY CPSERVICE displays the service table.

Related to EDEV and DASD Management

- QUERY EDEVICE returns inquiry pages and vital product data pages.

- QUERY DASD returns device configuration and characteristics information.

- IOEXPLOR returns device information.

Related to EDEV RAS

- SET MITIME no longer applies to EDEVs.

- SET CPTRACE adds trace codes for the SCSI container.

- SET EDEVICE adds a usage note on best practices for configuration.

Related to Support of SCSI Flash Systems

- SET EDEVICE adds the keyword FLASH.

- QUERY EDEVICE adds the output FLASH.

Related to System Shutdown

- QUERY SHUTDOWN adds command output.

- QUERY SHUTDOWNTIME is a new command.

Related to Dynamic SMT

- SET MULTITHREAD is a new command.

- QUERY MULTITHREAD has new output.

- INDICATE MULTITHREAD has documentation changes.

- VARY CORE has documentation changes.

- SET CPTRACE has documentation changes.

Related to RSCS TCPNJE Encryption

- RSCS DEFINE can now specify TLSLABEL=.

- RSCS START can now specify TLSLABEL=.

Related to Perfkit Using Memory > 2 GB

- FCONTROL HMA is new.

- FCONTROL STORUSED is new.

- FCONTROL LIMIT has new threshold variables.

Related to the Removal of XSTORE

All of the following commands were hit by the removal of XSTORE:

- ATTACH XSTORE

- DETACH XSTORE

- FOR

- INDICATE LOAD

- INDICATE NSS

- INDICATE PAGING

- INDICATE SPACES

- INDICATE USER

- LOGOFF

- LOGON

- MONITOR SAMPLE

- QUERY AGELIST

- QUERY MDCACHE

- QUERY RESERVED

- QUERY SRM

- QUERY VIRTUAL ALL

- QUERY VIRTUAL XSTORE

- QUERY XSTORE

- RETAIN XSTORE

- SET AGELIST

- SET CPTRACE

- SET MDCACHE

- SET RESERVED

- SET SRM

- VMRELOCATE

Effects on Accounting Data

z/VM 6.4 did not change accounting.

Performance Toolkit for VM Changes

Performance Toolkit for VM has been enhanced since z/VM for z13. Find below descriptions of the enhancements.

VM65656: Pipelines Input Stage

With VM65656 Performance Toolkit for VM now includes a CMS Pipelines stage called PERFKIT. This stage constitutes a Pipelines input interface through which Perfkit can read blocks of MONWRITE files.

VM65528: Multi-VSWITCH Link Aggregation

With VM65528 Performance Toolkit for VM includes support for Multi-VSWITCH Link Aggregation.

The following reports are new:

Performance Toolkit for VM: New Reports

| Name | Number | Title | Description |

| GLONACT | FCX317 | Global Networking Object Activity | Displays activity data related to global VSWITCHes. |

The following reports have been changed:

Performance Toolkit for VM: Changed Reports

| Name | Number | Title | What Changed |

| MONDATA | FCX155 | Monitor Data Statistics | Added information related to global VSWITCHes. |

| IOCHANGE | FCX185 | I/O Configuration Changes | Added information related to global VSWITCHes. |

| VSWITCH | FCX240 | Virtual Switch Activity | Added information related to global VSWITCHes. |

| GVSWITCH | FCX266 | General Virtual Switch Description | Added information related to global VSWITCHes. |

| EVSWITCH | FCX267 | Extended Virtual Switch Description | Added information related to global VSWITCHes. |

VM65699: New and Repaired Function

With VM65699 Performance Toolkit for VM includes several improved reports and numerous internal repairs.

The following reports have been changed:

Performance Toolkit for VM: Changed Reports

| Name | Number | Title | What Changed |

| FCHANNEL | FCX215 | FICON Channel Load | Changed to add channel read and write speeds. |

| MONDATA | FCX155 | Monitor Data Statistics | Displays all event records that arrived after the last sample interval. |

| USTAT | FCX114 | User Wait States | In headers, %Time spent in was changed to %Samples showing. |

| USTLOG | FCX135 | User Wait States Log | In headers, %Time spent in was changed to %Samples showing. |

| USTATLOG | FCX164 | User Wait States Log | In headers, %Time spent in was changed to %Samples showing. |

| USTMPLOG | FCX315 | Multiprocessor User Wait States Log | In headers, %Time spent in was changed to %Samples showing. |

| LPAR | FCX126 | LPAR Load | In headers, LPU was changed to Core. |

| LPARLOG | FCX202 | LPAR Load Log | In headers, LPU was changed to Core. |

| PROCCONF | FCX234 | Processor/Core Configuration Log | In headers, LPU was changed to Core. |

| LPARCONF | FCX235 | LPAR Configuration Log | In headers, LPU was changed to Core. |

| TOPOLOG | FCX287 | System Topology Machine Organization | In headers, LPU was changed to Core. |

| PUORGLOG | FCX298 | Logical Core Organization Log | In headers, LPU was changed to Core. |

| PHYSLOG | FCX302 | Real Core Utilization Log | In headers, LPU was changed to Core. |

| LSHARACT | FCX306 | Logical Partition Share | In headers, LPU was changed to Core. |

| LPARLOGM | FCX307 | Logical Partition Logs Menu | In headers, LPU was changed to Core. |

| SYSCONF | FCX180 | System Configuration | Displays CPC type-model and Model-Capacity Identifier when available. |

| PUCFGLOG | FCX299 | Logical PU (Core and Threads) Configuration Log | Uses "Core" in description. |

| SYSLOG | FCX179 | System Facilities Log | User exits columns now show %Busy, and calculation of existing usec is changed. |

VM65698: IBM z13 GA2 and z13s

With VM65698 Performance Toolkit for VM includes support for the IBM z13 2964 GA2 and the IBM z13s 2965.

The following reports are new:

Performance Toolkit for VM: New Reports

| Name | Number | Title | Description |

| PCIACT | FCX322 | PCI Function Activity, Format 3 | Displays activity for format-3 PCI functions. |

| PCILOG | FCX323 | PCI Function Activity Log, Format 3 | Displays a log of activity for a selected format-3 PCI function. |

The following reports have been changed:

Performance Toolkit for VM: Changed Reports

| Name | Number | Title | What Changed |

| PCIMENU | FCX310 | PCI Function Menu | Added entry for format-3 PCI functions. |

| PCICONF | FCX311 | PCI Function Configuration | Added information for format-3 PCI functions. |

VM65697: CPU Pooling, LPAR Group Capping, and Prorated Core Time

With VM65697 Performance Toolkit for VM includes support for CPU Pooling, LPAR Group Capping, and Prorated Core Time.

The following reports are new:

Performance Toolkit for VM: New Reports

| Name | Number | Title | Description |

| CPLMENU | FCX324 | CPU Pool Menu | Displays a menu of choices related to CPU Pooling. |

| CPLCONF | FCX308 | CPU Pool Configuration | Displays configuration information related to CPU Pooling. |

| CPLACT | FCX309 | CPU Pool Activity Data | Displays activity data related to CPU Pooling. |

The following reports have been changed:

Performance Toolkit for VM: Changed Reports

| Name | Number | Title | What Changed |

| MENU | FCX124 | Performance Data Selection Menu | Added selection 2A. |

| UCONF | FCX226 | Performance Data Selection Menu | Added column for CPU pool name. |

| LPAR | FCX126 | LPAR Load Screen |

|

| LPARLOG | FCX202 | LPAR Load Screen |

|

| LSHARACT | FCX306 | Logical Partition Share | Added GrpCapNm and GrpCap columns. |

z/VM 6.4: New Function

With z/VM 6.4 Performance Toolkit for VM includes support for a new report, LOCKACT.

The following reports are new:

Performance Toolkit for VM: New Reports

| Name | Number | Title | Description |

| CPUMENU | FCX325 | CPU Data Menu | The CPU Data Menu Screen shows a selection menu of CPU options, namely, CPU, DSVBKACT, and LOCKACT. |

| LOCKACT | FCX326 | Spin Lock Collision Activity | The Spin Lock Collision Activity Screen shows mean lock behavior. To see the interval-to-interval lock statistics, use INTERIM LOCKACT. These are available when z/VM 6.4 Performance Toolkit reduces data from any supported z/VM system. |

The following reports have been changed:

Performance Toolkit for VM: Changed Reports

| Name | Number | Title | What Changed |

| MENU | FCX124 | Performance Screen Selection Menu | Changed number 1 to go to menu CPUMENU instead of to report CPU. |

| LOCKLOG | FCX265 | Spin Lock Log | This data screen can not be produced when z/VM 6.4 Performance Toolkit reduces data from a z/VM 6.4 or later system. |

Take note: The z/VM 6.4 version of Performance Toolkit must run on z/CMS.

With z/VM 6.4 Performance Toolkit for VM now provides support for using memory above the 2 GB line (called High Memory Area or HMA). To have a HMA and use memory above 2 GB, the PERFSVM directory entry needs to include memory above 2 GB. It is recommended that PERFSVM includes the entire 2 GB to 4 GB range of memory. With this support, z/VM 6.4 Performance Toolkit has two changed commands:

- FCONTROL HMA was added to query the current HMA usage.

- FCONTROL LIMIT was changed to add three new threshold limits; namely, HMAPAGE, HMAPRINT, and STORB2G.

Performance Toolkit for VM has not yet been enhanced to depict what the monitor records report about CP's use of HyperPAV aliases. In the meantime there is a VM Download Library package called HPALIAS you can use.

Omegamon XE Changes

OMEGAMON XE has added a new workspace so as to expand and enrich its ability to comment on z/VM system performance. OMEGAMON XE will now display data on any CPU pools that you have defined for your z/VM system. It will allow you to see the usage of your CPU pools and determine which pools are near capacity and which ones are under-utilized.

To support these OMEGAMON XE endeavors, Performance Toolkit for VM now puts additional CP Monitor data into the PERFOUT DCSS.

Back to Table of Contents.

New Functions

This section contains discussions of the following performance evaluations:

- Memory Management Serialization Contention Relief

- z/VM Paging Improvements

- CP Scheduler Improvements

- RSCS TCPNJE Encryption

- TLS/SSL Server Changes

Back to Table of Contents.

Memory Management Serialization Contention Relief and 2 TB Central Storage Support

Abstract

Memory Management Serialization Contention Relief (hence, the enhancement) provides performance improvements to the memory management subsystem. It enables workload scaling up to the new z/VM 6.4 maximum supported central storage size of 2 TB.

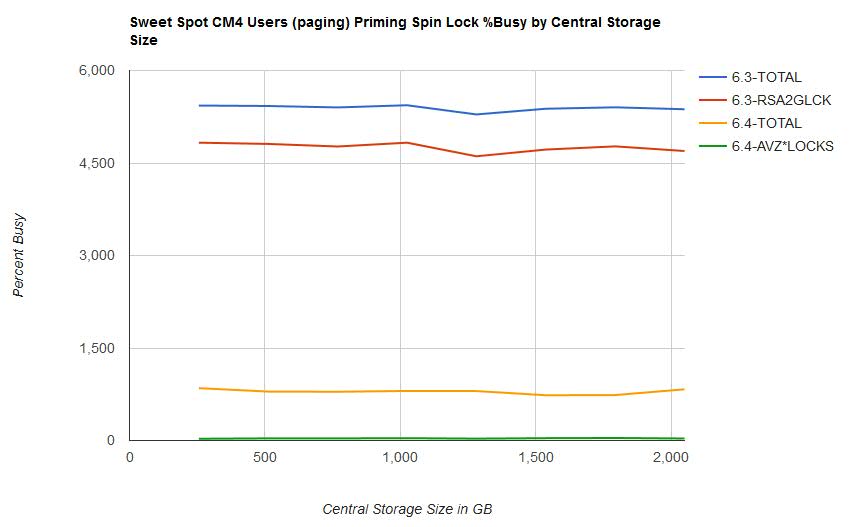

Spin lock contention in the memory management subsystem has been a barrier to supporting central storage sizes above 1 TB. With z/VM 6.4 extensive changes were made to lock structures resulting in reduced spin lock contention. With these lock structure changes, along with other memory management subsystem changes, IBM measurements demonstrate the ability to scale workloads up to the new z/VM 6.4 maximum supported central storage size of 2 TB.

Background

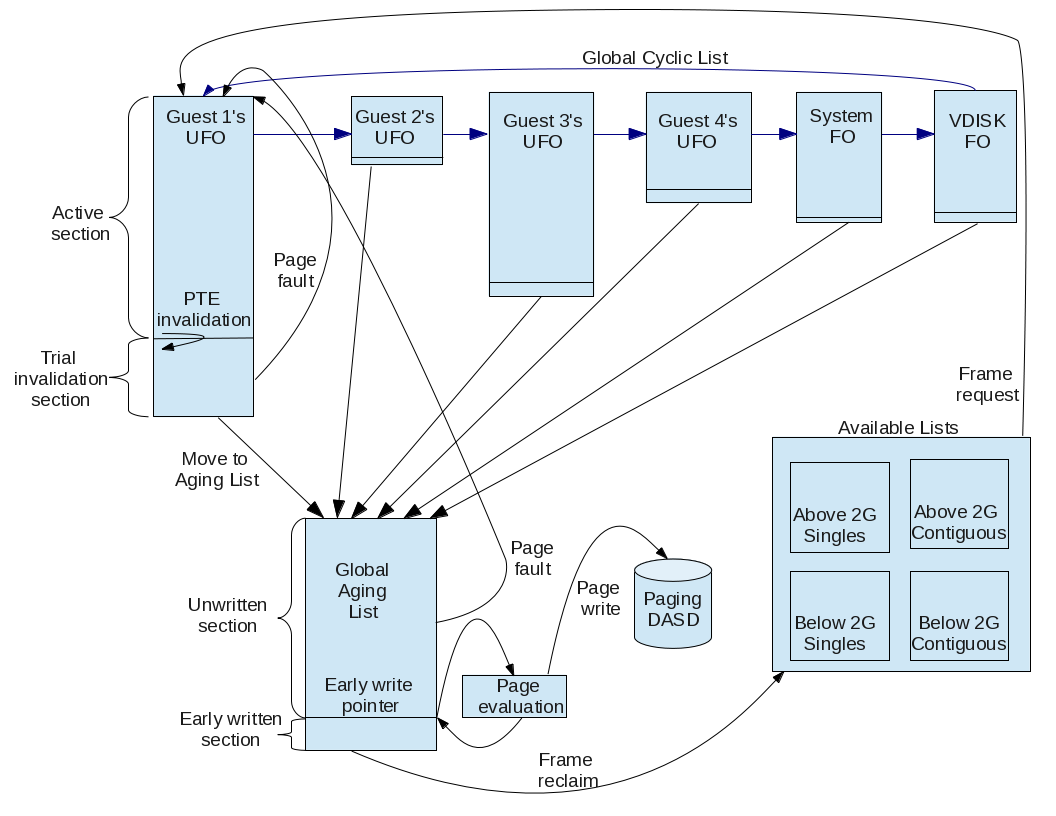

As systems increase in memory size and number of processors, workloads can grow, putting more demand on the frame manager. The frame manager is the collection of modules in the z/VM memory management subsystem that maintains lists of available frames, manages requests for frames, and coalesces frames as they are returned.

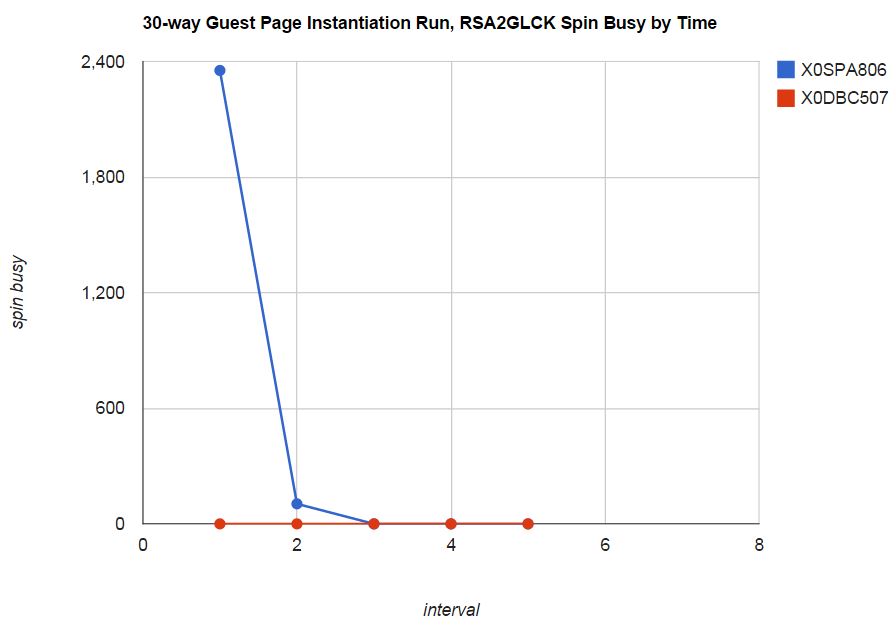

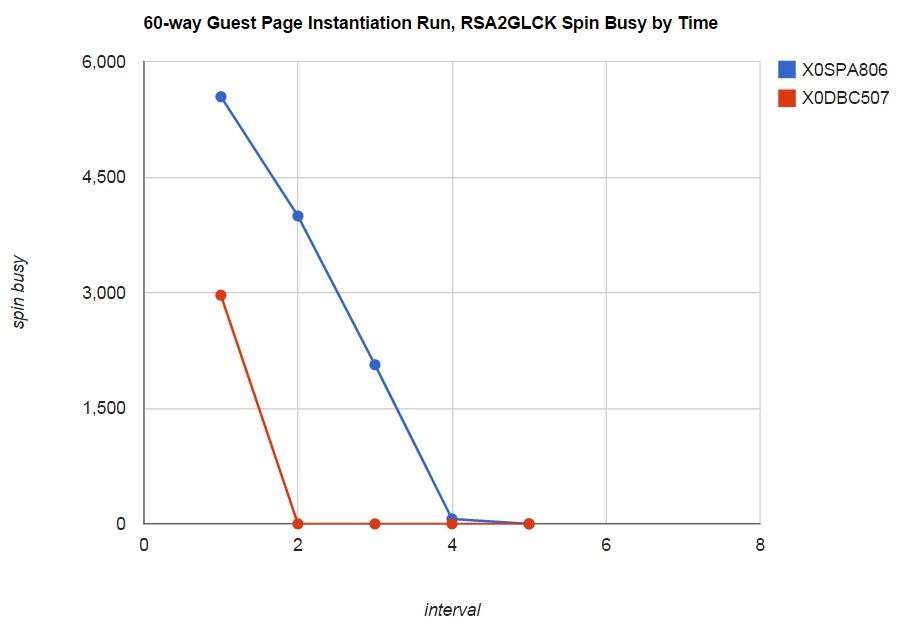

Prior to z/VM 6.4 there were two locks providing serialized access to the lists of available frames: one lock for below-2-GB frames and one lock for above-2-GB frames. Contention for these locks, particularly on the lock for frames above 2 GB (RSA2GLCK), was noticeable with various workloads. This contention was limiting the growth of real memory z/VM could support.

The primary change made in the frame manager for z/VM 6.4 was to organize central storage into available list zones. An available list zone represents a range of central storage, much like the below-2-GB available lists and the above-2-GB available lists represented a range of central storage in prior releases. Management of the available frames within a zone is serialized by a lock unique to that zone. Zone locks are listed as AVZAnnnn and AVZBnnnn in monitor record D0 R23 MRSYTLCK, where nnnn is the zone number. The number and size of zones is determined internally by z/VM and can depend on the maximum potential amount of central storage, the number of attached processors, and whether the zone represents central storage above 2 GB or below 2 GB.

Other improvements to the frame manager include:

- The ability to queue frame returns so a task returning a frame is not delayed when the zone lock is not available.

- Improved defer logic which will check the global Invalid But Resident (IBR) aging list for a satisfactory frame before deferring the requestor.

- Enhanced available list replenishment logic which can run simultaneously to the global IBR aging list replenishment.

- More appropriate thresholds for available frame requests.

Another area where improvements have been made is in Page Table Resource Manager (PTRM) page allocations. In heavy paging environments significant lock contention was observed with a single PTRM address space allocation lock. The contention is now avoided by using CPU address to spread PTRM allocations across the 128 PTRM address spaces.

All of these items combined have enabled a new z/VM 6.4 maximum supported central storage size of 2 TB.

Method

A scan of previous IBM measurements revealed the Sweet Spot Priming workload (which uses the Virtual Storage Exerciser) experienced the highest level of spin lock contention on the available lists locks. Based on that finding the Sweet Spot Priming workload was chosen to measure the effectiveness of the enhancement.

In addition, both the Sweet Spot Priming workload and the Apache Scaling workload were used to measure the scalability of workloads up to the new z/VM 6.4 maximum supported central storage size of 2 TB.

Sweet Spot Priming Workload Using VIRSTOEX

The Sweet Spot Priming workload was designed to place high demand on the memory management subsystem. It consists of four sets of users which "prime" their virtual memory by changing data in a predetermined number of pages. This may be viewed as analogous to a customer application reading a database into memory. The workload is designed to overcommit memory by approximately 28%. The four sets of users are logged on sequentially. Each group completes its priming before the next group is logged on. The first three sets of users do not cause paging during priming. For the first three sets of users, only elapsed time is of interest. Each user touches a fixed number of pages based on virtual machine size. The fourth set of users does cause paging during priming. For the fourth set of users, ETR is defined as thousands of pages written to paging DASD per second.

Sweet Spot Priming workload measurements were used to evaluate the reduced lock contention and illustrate the improved performance of the workload as a result. The number of CPUs was held constant while central storage size and the virtual memory size of the users were increased to maintain a constant memory overcommitment level.

A modified z/VM 6.3 Control Program was used to obtain measurements with central storage sizes larger than the z/VM 6.3 maximum supported central storage size of 1 TB.

Table 1 shows the Sweet Spot Priming workload configurations used.

| Table 1. Sweet Spot Priming Workload Configurations. | ||||||||

| Central Storage in GB | 256 | 512 | 768 | 1024 | 1280 | 1536 | 1792 | 2048 |

| IFL Cores | 64 | 64 | 64 | 64 | 64 | 64 | 64 | 64 |

| CM1x Users | 88 | 88 | 88 | 88 | 88 | 88 | 88 | 88 |

| CM2x Users | 88 | 88 | 88 | 88 | 88 | 88 | 88 | 88 |

| CM3x Users | 88 | 88 | 88 | 88 | 88 | 88 | 88 | 88 |

| CM4x Users | 88 | 88 | 88 | 88 | 88 | 88 | 88 | 88 |

| CMx Virtual Memory Size in GB | 6.5 | 11 | 15.5 | 20 | 24.5 | 29 | 33.5 | 38 |

| Notes: The number of CPUs was held constant while central storage size and virtual memory size of the users were increased to maintain a constant memory overcommitment level. CEC model 2964-NC9. Dedicated LPAR with 64 IFL cores in non-SMT mode. The VIRSTOEX program only touches virtual memory above 2 GB. | ||||||||

Apache Scaling Workload Using Linux

The Apache Scaling workload was used to illustrate a Linux-based webserving workload that scales up to a central storage size of 2 TB. The Apache Scaling workload has a small amount of memory overcommitment. The memory overcommitment was kept small to avoid a large volume of paging that would cause the DASD paging subsystem to become the limiting factor for the workload as it were scaled up with cores, memory, and AWM clients and servers.

To allow comparisons with central storage sizes above 1 TB a modified z/VM 6.3 Control Program was used to obtain measurements with central storage sizes larger than the z/VM 6.3 maximum supported central storage size of 1 TB.

Table 2 shows the Apache Scaling workload configurations used.

| Table 2. Apache Scaling Workload Configurations | ||||

| Central storage in GB | 512 | 1024 | 1536 | 2048 |

| IFL cores | 8 | 16 | 24 | 32 |

| AWM clients (1 GB) | 4 | 8 | 12 | 16 |

| AWM servers (30 GB) | 24 | 48 | 72 | 96 |

| Notes: AWM clients and servers are arranged in groups to keep the total number of client/server sessions manageable. Each AWM client has a session with 6 servers. CEC model 2964-NC9. Dedicated LPAR with IFL cores in non-SMT mode. | ||||

Results and Discussion

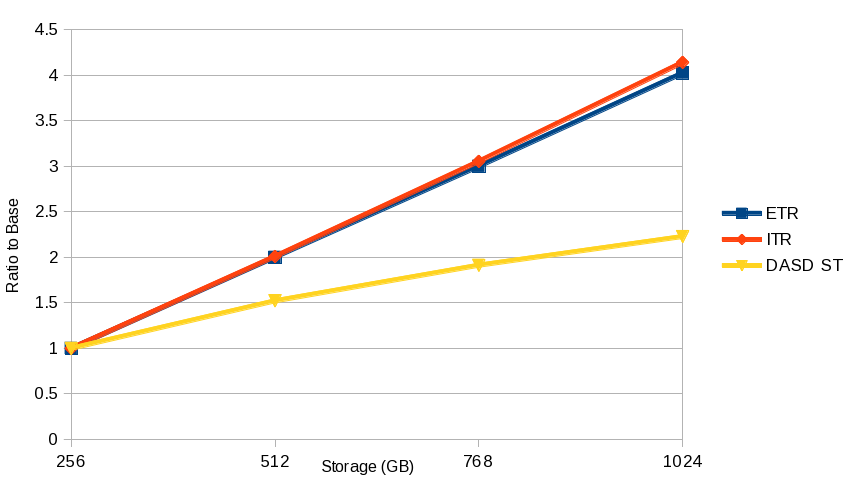

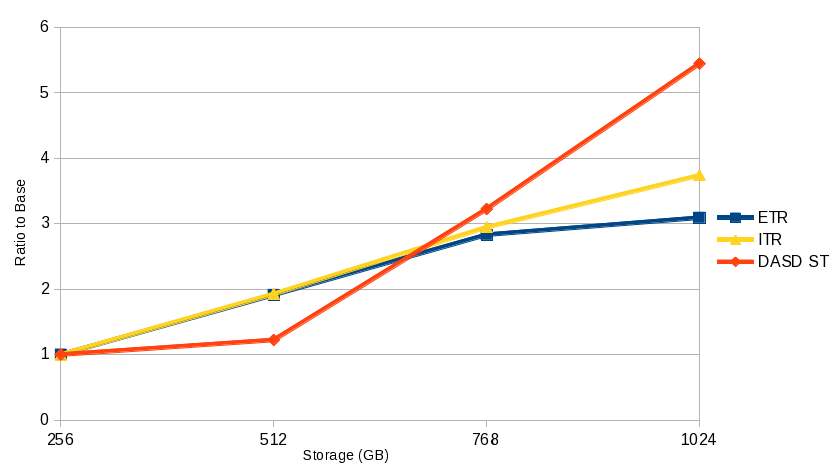

Sweet Spot Priming Workload Measurements Results

Table 3 contains selected results of z/VM 6.3 measurements. Table 4 contains selected results of z/VM 6.4 measurements. Table 5 contains comparisons of selected results of z/VM 6.4 measurements to z/VM 6.3 measurements.

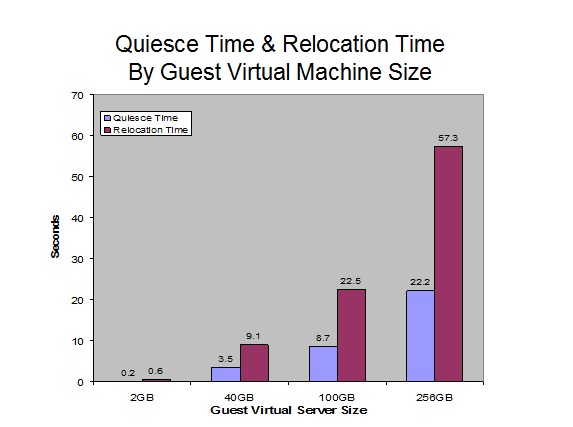

- Overall spin lock contention decreased from z/VM 6.3 to z/VM 6.4. This was the expected result of the enhancement. See Figure 1.

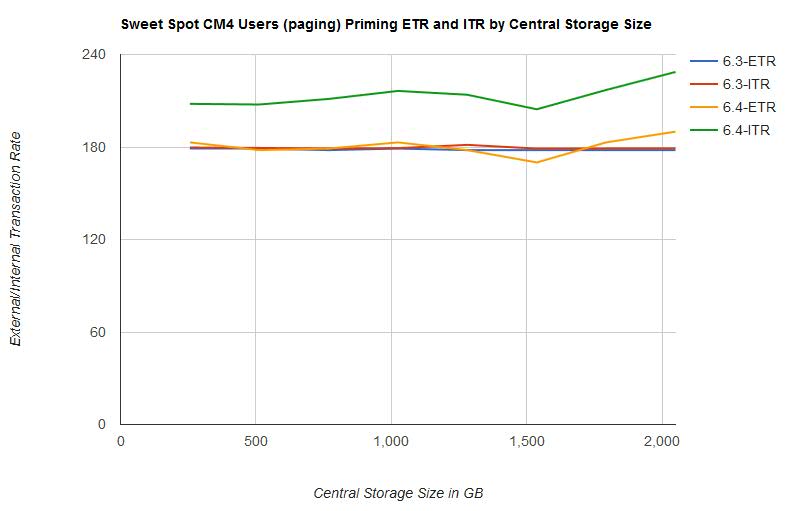

- ETR was relatively the same in both z/VM 6.3 and z/VM 6.4. This was the expected result. The workload is limited by test system DASD paging infrastructure. See Figure 4.

- ITR increased from z/VM 6.3 to z/VM 6.4. See Figure 4.

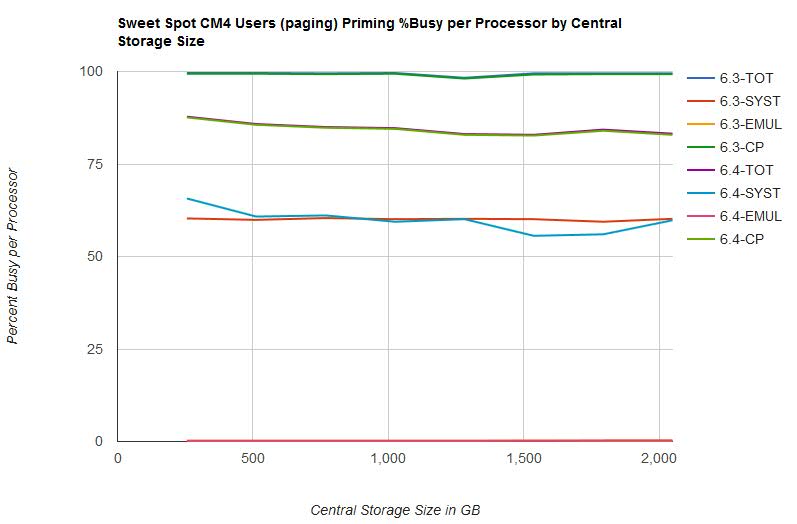

- Processor utilization decreased from z/VM 6.3 to z/VM 6.4. See Figure 5.

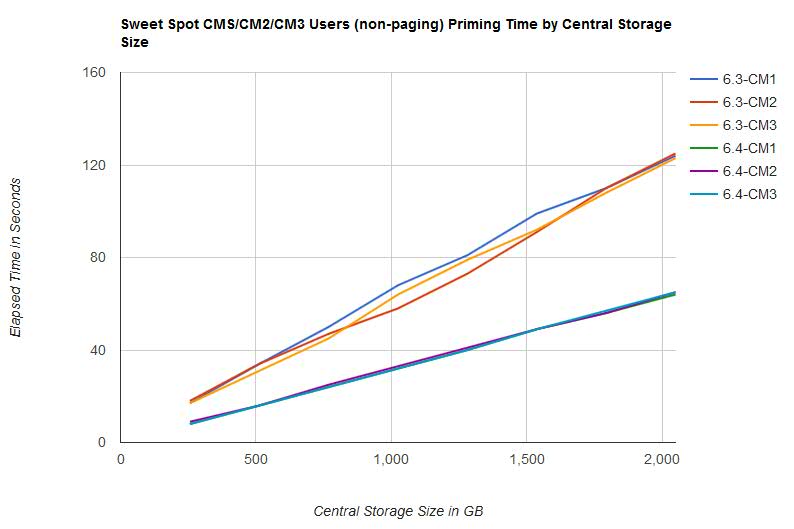

- Elapsed time for CM1, CM2, and CM3 users (non-paging) priming phases decreased from z/VM 6.3 to z/VM 6.4. Elapsed time scaled linearly as central storage size increased. See Figure 2.

- Elapsed time for CM4 users (paging) priming phase remained relatively the same from z/VM 6.3 to z/VM 6.4. The workload is limited by test system DASD paging infrastructure.

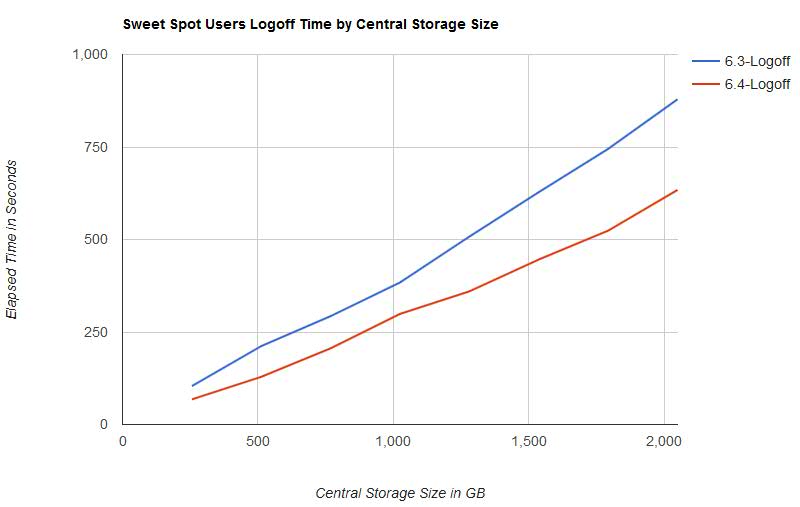

- Elapsed time for logoff of all users decreased from z/VM 6.3 to z/VM 6.4. Elapsed time scaled linearly as central storage size increased. See Figure 3.

Figure 1 illustrates the spin lock percent busy for the CM4 users (paging) priming phase by central storage size.

| Figure 1. Sweet Spot CM4 Users (paging) Priming Spin Lock %Busy by Central Storage Size. |

|

Figure 2 illustrates the elapsed time for the CM1, CM2, and CM3 users (non-paging) priming phases by central storage size.

| Figure 2. Sweet Spot CM1/CM2/CM3 Users (non-paging) Priming Time by Central Storage Size. |

|

Figure 3 illustrates the elapsed time for the logoff phase by central storage size.

| Figure 3. Sweet Spot Users Logoff Time by Central Storage Size. |

|

Figure 4 illustrates the external and internal transaction rate for the CM4 users (paging) priming phase by central storage size.

| Figure 4. Sweet Spot CM4 Users (paging) Priming ETR and ITR by Central Storage Size. |

|

Figure 5 illustrates the percent busy per processor for the CM4 users (paging) phase by central storage size.

| Figure 5. Sweet Spot CM4 Users (paging) Priming %Busy per Processor by Central Storage Size. |

|

Table 3 shows the Sweet Spot Priming workload results on z/VM 6.3.

| Table 3. Sweet Spot Priming Workload z/VM 6.3 results. | ||||||||

| Central Storage in GB | 256 | 512 | 768 | 1024 | 1280 | 1536 | 1792 | 2048 |

| Runid | SSXS6287 | SSXS6288 | SSXS6289 | SSXS628A | SSXS628B | SSXS628C | SSXS628D | SSXS628E |

| ETR | 179.02 | 178.65 | 178.25 | 178.59 | 178.35 | 178.07 | 178.24 | 178.23 |

| ITR | 179.7 | 179.4 | 179.1 | 179.3 | 181.4 | 179.0 | 179.1 | 179.1 |

| Total Busy per Processor | 99.6 | 99.6 | 99.5 | 99.6 | 98.3 | 99.5 | 99.5 | 99.5 |

| System Busy per Processor | 60.3 | 59.9 | 60.4 | 60.1 | 60.2 | 60.1 | 59.4 | 60.2 |

| Emulation Busy per Processor | 0.2 | 0.2 | 0.2 | 0.2 | 0.2 | 0.3 | 0.2 | 0.2 |

| CP Busy per Processor | 99.4 | 99.4 | 99.3 | 99.4 | 98.1 | 99.2 | 99.3 | 99.3 |

| T/V Ratio | 404.3 | 404.3 | 409.2 | 408.9 | 406.3 | 395.1 | 412.5 | 412.6 |

| Total Spin Lock Busy | 5432.05 | 5424.83 | 5401.44 | 5436.85 | 5287.71 | 5380.59 | 5403.33 | 5372.19 |

| RSA2GLCK Spin Lock Busy | 4829.77 | 4809.88 | 4767.82 | 4829.73 | 4609.50 | 4718.28 | 4770.21 | 4694.73 |

| CM1 Users Priming Time | 17 | 34 | 50 | 68 | 81 | 99 | 110 | 124 |

| CM2 Users Priming Time | 18 | 34 | 47 | 58 | 73 | 91 | 110 | 125 |

| CM3 Users Priming Time | 17 | 31 | 45 | 64 | 79 | 92 | 108 | 123 |

| CM4 Users Priming Time | 116 | 234 | 338 | 452 | 565 | 678 | 789 | 903 |

| Logoff Time | 104 | 212 | 293 | 384 | 508 | 628 | 745 | 879 |

| Notes: CEC model 2964-NC9. Dedicated LPAR with 64 IFL cores in non-SMT mode. | ||||||||

Table 4 shows the Sweet Spot Priming workload results on z/VM 6.4.

| Table 4. Sweet Spot Priming Workload z/VM 6.4 results. | ||||||||

| Central Storage in GB | 256 | 512 | 768 | 1024 | 1280 | 1536 | 1792 | 2048 |

| Runid | SSYS8154 | SSYS8155 | SSYS8156 | SSYS8151 | SSYS8157 | SSYS8158 | SSYS8159 | SSYS8150 |

| ETR | 182.64 | 178.16 | 179.50 | 183.30 | 177.78 | 169.57 | 182.95 | 190.30 |

| ITR | 208.0 | 207.6 | 211.2 | 216.4 | 213.9 | 204.5 | 217.0 | 228.7 |

| Total Busy per Processor | 87.8 | 85.8 | 85.0 | 84.7 | 83.1 | 82.9 | 84.3 | 83.2 |

| System Busy per Processor | 65.7 | 60.8 | 61.1 | 59.4 | 60.1 | 55.6 | 56.0 | 59.8 |

| Emulation Busy per Processor | 0.2 | 0.2 | 0.2 | 0.2 | 0.2 | 0.2 | 0.3 | 0.3 |

| CP Busy per Processor | 87.6 | 85.6 | 84.8 | 84.5 | 82.9 | 82.7 | 84.0 | 82.9 |

| T/V Ratio | 356.8 | 356.8 | 351.3 | 341.1 | 350.6 | 360.0 | 336.2 | 329.3 |

| Total Spin Lock Busy | 848.49 | 793.67 | 791.25 | 804.14 | 802.47 | 733.87 | 737.75 | 830.40 |

| AVZLOCKS Spin Lock Busy | 28.90 | 34.52 | 34.79 | 38.38 | 31.04 | 38.20 | 41.31 | 33.18 |

| CM1 Users Priming Time | 8 | 16 | 24 | 32 | 40 | 49 | 56 | 64 |

| CM2 Users Priming Time | 9 | 16 | 25 | 33 | 41 | 49 | 56 | 65 |

| CM3 Users Priming Time | 8 | 16 | 24 | 32 | 40 | 49 | 57 | 65 |

| CM4 Users Priming Time | 116 | 229 | 338 | 444 | 563 | 706 | 767 | 845 |

| Logoff Time | 68 | 129 | 206 | 299 | 360 | 446 | 524 | 634 |

| Notes: CEC model 2964-NC9. Dedicated LPAR with 64 IFL cores in non-SMT mode. | ||||||||

Table 5 shows the comparison of z/VM 6.4 results to z/VM 6.3 results.

| Table 5. Sweet Spot Priming Workload z/VM 6.4 results compared to z/VM 6.3 results. | ||||||||

| Central Storage in GB | 256 | 512 | 768 | 1024 | 1280 | 1536 | 1792 | 2048 |

| ETR | +2.0% | +0.3% | +0.7% | +2.6% | -0.3% | -4.8% | +2.6% | +6.8% |

| ITR | +15.7% | +15.7% | +17.9% | +20.7% | +17.9% | +14.2% | +21.2% | +27.7% |

| Total Busy per Processor | -11.8% | -13.9% | -14.6% | -15.0% | -15.5% | -16.7% | -15.3% | -16.4% |

| System Busy per Processor | +9.0% | +1.5% | +1.2% | -1.2% | -0.2% | -7.5% | -5.7% | -0.7% |

| Emulation Busy per Processor | 0.0% | 0.0% | 0.0% | 0.0% | 0.0% | -33.3% | +50.0% | +50.0% |

| CP Busy per Processor | -11.9% | -13.9% | -14.6% | -15.0% | -15.5% | -16.6% | -15.4% | -16.5% |

| T/V Ratio | -11.7% | -11.7% | -14.1% | -16.6% | -13.7% | -8.9% | -18.5% | -20.2% |

| Total Spin Lock Busy | -84.4% | -85.4% | -85.4% | -85.2% | -84.8% | -86.4% | -86.3% | -84.5% |

| Available List Spin Locks Busy | -99.4% | -99.3% | -99.3% | -99.2% | -99.3% | -99.2% | -99.1% | -99.3% |

| CM1 Users Priming Time | -52.9% | -52.9% | -52.0% | -52.9% | -50.6% | -50.5% | -49.1% | -48.4% |

| CM2 Users Priming Time | -50.0% | -52.9% | -46.8% | -43.1% | -43.8% | -46.2% | -49.1% | -48.0% |

| CM3 Users Priming Time | -52.9% | -48.4% | -46.7% | -50.0% | -49.4% | -46.7% | -47.2% | -47.2% |

| CM4 Users Priming Time | 0.0% | -2.1% | 0.0% | -1.8% | -0.4% | +4.1% | -2.8% | -6.4% |

| Logoff Time | -34.6% | -39.2% | -29.7% | -22.1% | -29.1% | -29.0% | -29.7% | -27.9% |

| Notes: All deltas are percentage difference of z/VM 6.4 measurements compared to z/VM 6.3 measurements. CEC model 2964-NC9. Dedicated LPAR with 64 IFL cores in non-SMT mode. | ||||||||

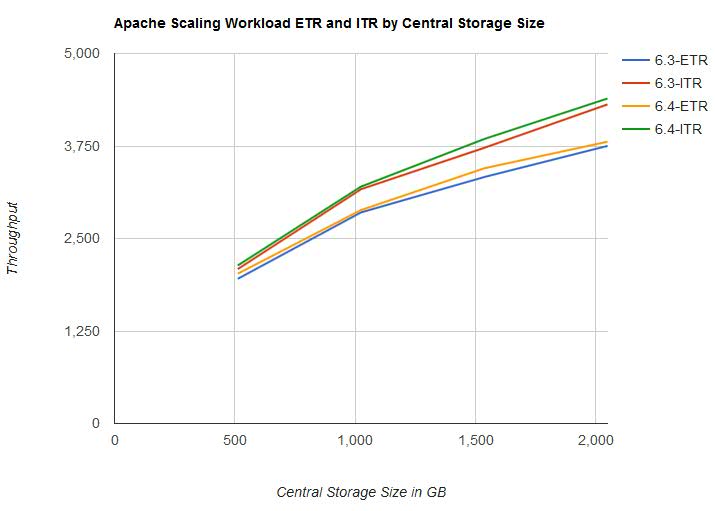

Apache Scaling Workload Measurements Results

Table 6 contains selected results of z/VM 6.3 measurements. Table 7 contains selected results of z/VM 6.4 measurements. Table 8 contains comparisons of the selected results of z/VM 6.4 measurements to z/VM 6.3 measurements.

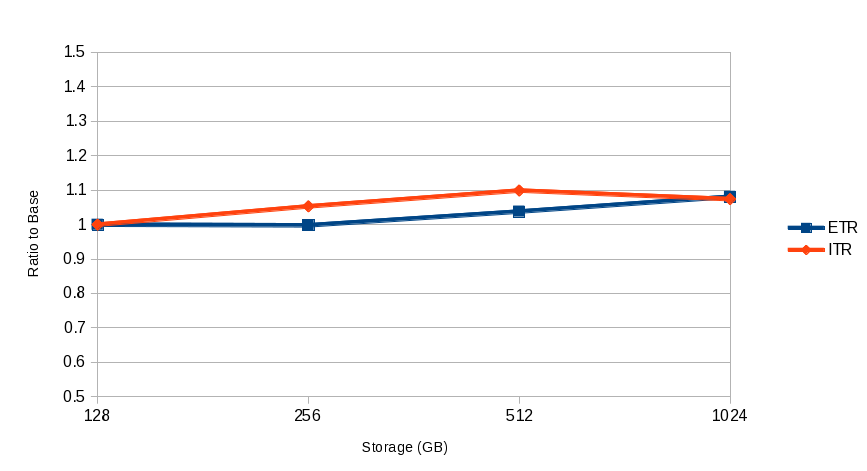

- Both ETR and ITR improved from z/VM 6.3 to z/VM 6.4 with the largest difference occurring between 1 TB and 2 TB of central storage. See Figure 6.

- Processor utilization was mostly unchanged from z/VM 6.3 to z/VM 6.4. There was a small variation at the lower increments of central storage but almost none from 1 TB to 2 TB. See Table 6, Table 7, and Table 8.

Figure 6 illustrates the external and internal transaction rate for the Apache Scaling workload by central storage size.

| Figure 6. Apache Scaling workload - ETR and ITR by Central Storage Size. |

|

Table 6 shows the Apache Scaling workload results on z/VM 6.3.

| Table 6. Apache Scaling workload z/VM 6.3 results. | ||||

| IFL Cores | 8 | 16 | 24 | 32 |

| Central Storage in GB | 512 | 1024 | 1536 | 2048 |

| Run ID | A51X628Z | A1TX628Z | A15X628Z | A2TX628Z |

| ETR | 1955.76 | 2853.14 | 3330.71 | 3751.85 |

| ITR | 2087.30 | 3166.60 | 3725.60 | 4312.50 |

| Total Busy per Processor | 93.7 | 90.1 | 89.4 | 87.0 |

| Emulation Busy per Processor | 68.6 | 66.3 | 61.9 | 59.9 |

| CP Busy per Processor | 25.1 | 23.8 | 27.5 | 27.1 |

| T/V Ratio | 1.37 | 1.36 | 1.44 | 1.45 |

| Notes: CEC model 2964-NC9. Dedicated LPAR with IFL cores in non-SMT mode. | ||||

Table 7 shows the Apache Scaling workload results on z/VM 6.4.

| Table 7. Apache Scaling Workload z/VM 6.4 results. | ||||

| IFL Cores | 8 | 16 | 24 | 32 |

| Central Storage in GB | 512 | 1024 | 1536 | 2048 |

| Run ID | A51Y715Z | A1TY715Z | A15Y715Z | A2TY715Z |

| ETR | 2024.75 | 2884.61 | 3449.17 | 3808.00 |

| ITR | 2135.80 | 3201.60 | 3845.20 | 4392.20 |

| Total Busy per Processor | 94.8 | 90.1 | 89.7 | 86.7 |

| Emulation Busy per Processor | 68.6 | 66.1 | 62.3 | 59.6 |

| CP Busy per Processor | 26.2 | 24.0 | 27.4 | 27.1 |

| T/V Ratio | 1.38 | 1.36 | 1.44 | 1.45 |

| Notes: CEC model 2964-NC9. Dedicated LPAR with IFL cores in non-SMT mode. | ||||

Table 8 shows the Apache Scaling workload results of z/VM 6.4 compared to z/VM 6.3.

| Table 8. Apache Scaling Workload z/VM 6.4 results compared to z/VM 6.3 results. | ||||

| IFL Cores | 8 | 16 | 24 | 32 |

| Central Storage in GB | 512 | 1024 | 1536 | 2048 |

| ETR | +3.5% | +1.1% | +3.6% | +1.5% |

| ITR | +2.3% | +1.1% | +3.2% | +2.0% |

| Total Busy per Processor | +1.2% | 0.0% | +0.3% | -0.3% |

| Emulation Busy per Processor | 0.0% | -0.3% | +0.6% | -0.5% |

| CP Busy per Processor | +4.4% | +0.8% | -0.4% | 0.0% |

| T/V Ratio | +0.7% | 0.0% | 0.0% | 0.0% |

| Notes: All deltas are percentage difference of z/VM 6.4 measurements compared to z/VM 6.3 measurements. CEC model 2964-NC9. Dedicated LPAR with IFL cores in non-SMT mode. | ||||

Summary and Conclusions

Memory Management Serialization Contention Relief provides performance improvements as central storage size is increased. The results of IBM measurements demonstrate spin lock contention is reduced and workloads scale up to the new z/VM 6.4 maximum supported central storage size of 2 TB.

Back to Table of Contents.

z/VM Paging Improvements

Abstract

z/VM 6.4 provides several paging enhancements. The Control Program (CP) was improved to increase both I/O payload sizes and the efficiency of page blocking. Also, CP can use the HyperPAV feature of the IBM System Storage DS8000 line of storage controllers for paging I/O. Further, CP can also use High Performance FICON (zHPF) transport-mode I/O for paging I/O. For amenable z/VM paging workloads, these enhancements can result in increased throughput or the equivalent throughput with fewer physical volumes.

IBM experiments using the command-mode paging driver resulted in a 42% transaction rate improvement. Adding HyperPAV aliases to a paging workload with I/Os queueing on the paging devices resulted in a 42% transaction rate improvement. Using transport-mode I/O resulted in a 98% transaction rate improvement. Using HyperPAV aliases and transport-mode I/Os resulted in a 234% transaction rate improvement.

Introduction

In z/VM 5.3 IBM introduced HyperPAV support for DASD volumes containing guest minidisks. z/VM 6.4 exploits HyperPAV for paging extents. Readers not familiar with HyperPAV or not familiar with z/VM's HyperPAV support should read IBM's HyperPAV technology description before continuing here.

In z/VM 6.2 IBM introduced zHPF support for guest operating system use. z/VM 6.4 exploits zHPF for paging I/O. Readers not familiar with zHPF or not familiar with z/VM's zHPF support for guests should read IBM's High Performance FICON description before continuing here. To use zHPF for paging I/Os, FICON Express8S or newer is required.

z/VM 6.4 also features enhancements to the paging subsystem improving logical page blocking and increasing I/O payload.

The command-mode I/O driver is the default paging I/O driver for z/VM 6.4. zHPF and HyperPAV aliases are optional and need to be enabled for use. Improvements were also made in the paging subsystem to increase the number of contiguous slots written on a single volume by one channel program, resulting in larger I/O payloads.

Method

Paging Evaluations

The new paging I/O options were evaluated with a Virtual Storage Exerciser (VIRSTOR) workload. The particulars of the workload held constant across all paging measurements were:

- z13, dedicated LPAR, eight IFL cores, non-SMT, 128 GB central storage

- Paging volumes on a single LCU of a DS8800

- Eight 18 GB virtual uniprocessor users running VIRSTOEX, a memory thrasher, with VIRSTOEX configured to cause heavy paging by looping through a 16 GB loop touching every page.

- VIRSTOR parameters: loops=1,cpus=01,i=4,v=4,w=50

Command-Mode Paging Driver Measurement

This measurement compares the command-mode paging driver to the z/VM 6.3 paging driver. Both runs use the same four paging extents.

Transport-Mode Paging Driver Measurement

This measurement compares the transport-mode paging driver to the command-mode paging driver. Both runs use the same four paging extents.

HyperPAV Alias Paging Measurement

This measurement demonstrates the effect of adding HyperPAV aliases to a paging workload capable of using them. Both runs use the same four paging extents. The comparison run has four HyperPAV aliases enabled for use with the paging volumes.

HyperPAV Alias Paging DASD Reduction Measurement

This measurement demonstrates the effect of using HyperPAV aliases to replace paging volumes to achieve the same parallelism. The base run uses eight paging extents. The comparison run uses four paging extents and four HyperPAV aliases enabled for use with the paging volumes.

Transport-Mode Paging Driver and HyperPAV Alias Paging Measurement

This measurement demonstrates the effect of using z/VM 6.4 with HyperPAV aliases and transport-mode both enabled for use by four paging volumes.

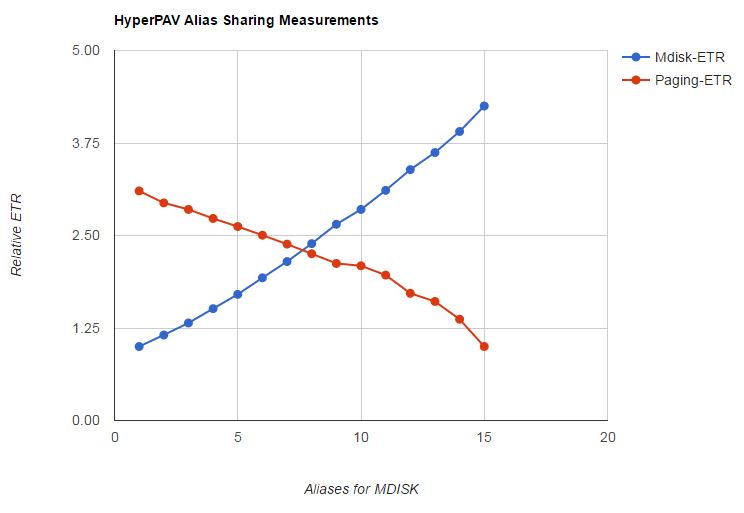

HyperPAV Alias Sharing Measurement

In z/VM 6.4 the CP SET CU command was enhanced to let an administrator specify a sharing policy the Control Program should observe when minidisk I/O and paging I/O are competing for the HyperPAV aliases of an LCU. The sharing policy implements a relative-share model matching the notion of relative CPU share as implemented by CP SET SHARE. In the present case of the sharing of HyperPAV aliases, minidisk I/O and paging I/O are the "users" and the HyperPAV aliases are the contended-for resource to be shared. When there is more demand for HyperPAV aliases than there are HyperPAV aliases available, the Control Program hands out the aliases to minidisk I/O and to paging I/O in accordance with their respective CU alias share settings.

To measure the effect of the CP SET CU command, IBM set up a hybrid, memory-constrained workload consisting of two groups of users. The first group had a high demand for minidisk I/O. Further, via CP SET RESERVED this first group was kept protected from central storage contention. The second group, memory thrashers, had a high demand for paging I/O. The real DASD volumes holding the minidisks and the real DASD volumes holding the paging space resided together in a single LCU along with some HyperPAV aliases. By using the CP SET CU command to vary the alias-share settings for minidisk I/O and paging I/O, IBM was able to observe whether the Control Program enforced the CU share settings correctly. IBM was also able to see the effect the CU share settings had on the performance of the two groups of users.

The particulars of the workload were:

- z13, dedicated LPAR, four cores, non-SMT, 8 GB central.

- A single LCU of a DS8800, said LCU equipped with four DASD volumes holding minidisks, two DASD volumes holding paging space, and sixteen HyperPAV aliases attached to SYSTEM.

- Thirty-two virtual uniprocessor guests running the IO3390 DASD I/O exerciser, each such guest protected from memory contention via CP SET RESERVED. In this group of guests, a transaction is a minidisk I/O. Notice IBM used more IO3390 guests than there were SYSTEM-attached HyperPAV aliases. This was done so MDISK alias demand would always be high enough to use all the aliases.

- Twelve virtual uniprocessor users running VIRSTOCX, a memory thrasher, with VIRSTOCX configured to cause heavy paging. In this group of guests, a transaction is 1000 references to pages. A reference might or might not cause a page fault. Notice IBM used fewer VIRSTOCX guests than the number of SYSTEM-attached HyperPAV aliases. This was done so PAGING would not have enough alias demand to use all of its alias entitlement when its alias entitlement was very high.

IBM did fifteen runs of this workload. Each run used a different pair of relative-share settings for MDISK and PAGING. The pairs of settings were chosen to cover the spectrum from heavy favor for MDISK, to equal weight, to heavy favor for PAGING. IBM recorded the ETR of the minidisk users and also the ETR of the paging users. IBM also used the D6 R28 MRIODHPP monitor records to observe whether the Control Program were respecting the CU share settings.

Results and Discussion

Command-Mode Paging Driver Measurement

Table 1 reports the result of the command-mode measurement.

| Table 1. Effect of command-mode paging driver. | ||||

| Run ID | V4DVX001 | V4DVY000 | Delta | Pct |

| CP level | 6.3.0 | 6.4.0 | ||

| Type and model | 2964-NC9 | 2964-NC9 | ||

| Processors | 8 | 8 | 0 | 0.0 |

| SYSGEN storage | 131072 | 131072 | 0 | 0.0 |

| Paging devices | 4 | 4 | 0 | 0.0 |

| ETR | 0.0310 | 0.0442 | 0.0132 | 42.6 |

| Total busy | 12.0 | 15.4 | 3.4 | 28.3 |

| Guest busy | 2.5 | 3.7 | 1.2 | 48.0 |

| Chargeable CP busy | 1.1 | 1.2 | 0.1 | 9.1 |

| Nonchargeable CP busy | 8.4 | 10.5 | 2.1 | 25.0 |

| Chargeable CP busy, /tx | 0.0355 | 0.0271 | -0.0084 | -23.7 |

| Nonchargeable CP busy, /tx | 0.2710 | 0.2376 | -0.0334 | -12.3 |

| Pages written to DASD, /sec | 31985 | 44459 | 12474 | 39.0 |

| Pages read from DASD, /sec | 32027 | 44466 | 12439 | 38.8 |

| Paging SSCH insts, /sec | 1487 | 1578 | 91 | 6.1 |

| Pages per SSCH | 43.0 | 56.4 | 13.4 | 31.1 |

| Page read blocking | 28 | 38 | 10 | 35.7 |

| Page write blocking | 27 | 32 | 5 | 18.5 |

| Page slot utilization, % | 11 | 15 | 4 | 36.4 |

| DASD connect time, /IO, ms | 2.250 | 1.970 | -0.280 | -12.4 |

| DASD disconnect time, /IO, ms | 0.019 | 0.006 | -0.013 | -68.4 |

| DASD pending time, /IO, ms | 0.135 | 0.139 | 0.004 | 3.0 |

| DASD service time, /IO, ms | 2.400 | 2.110 | -0.290 | -12.1 |

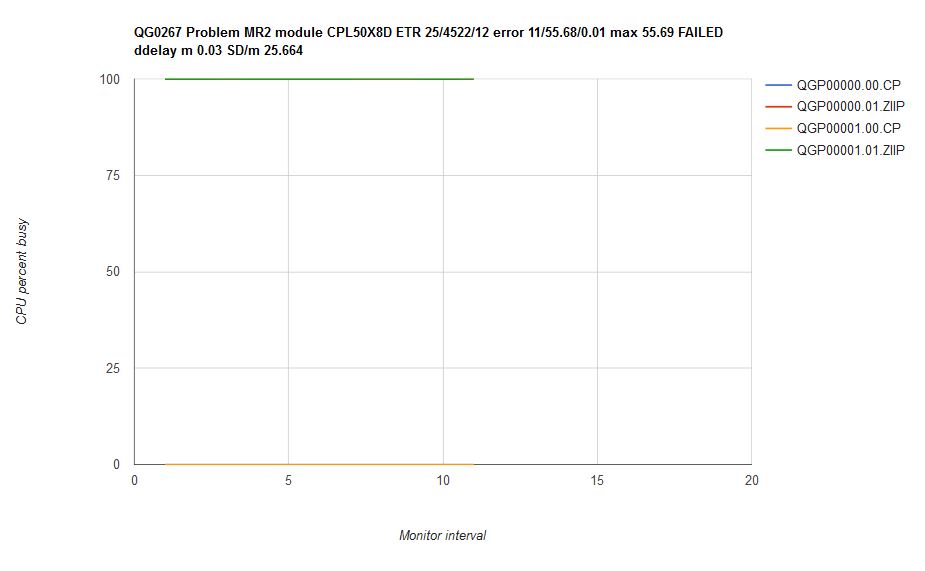

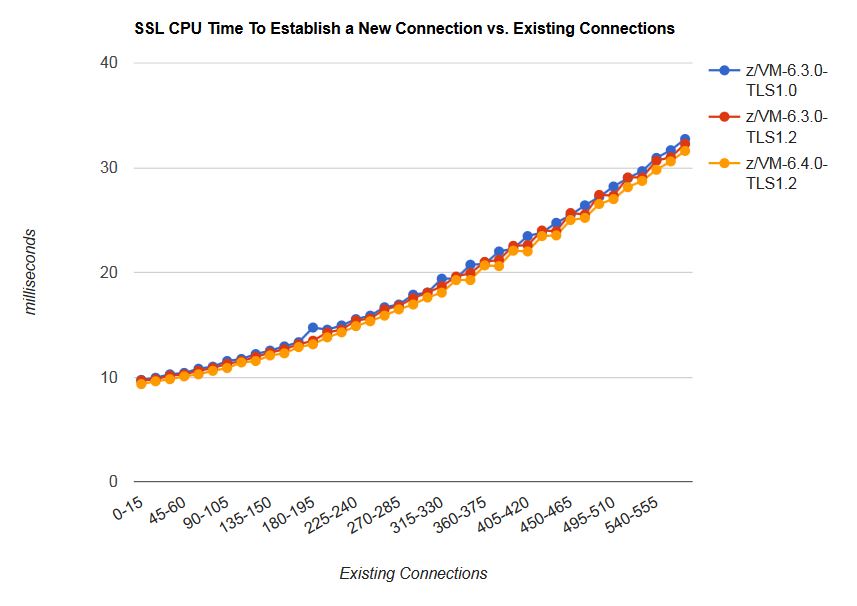

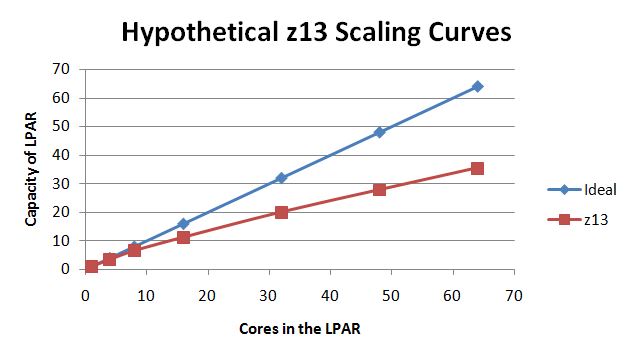

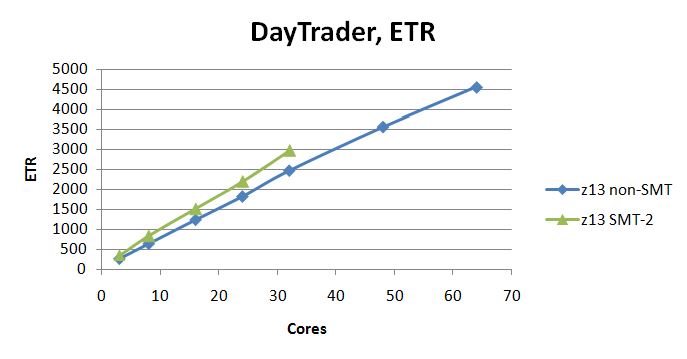

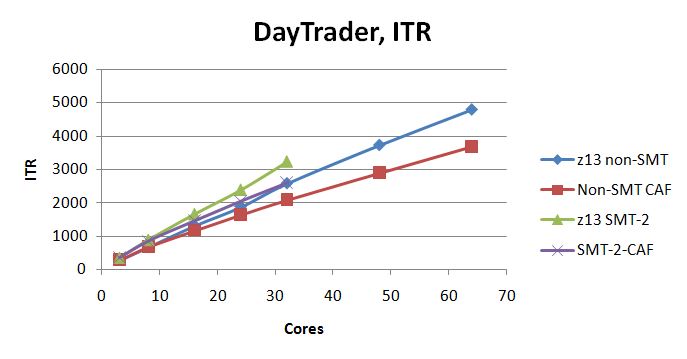

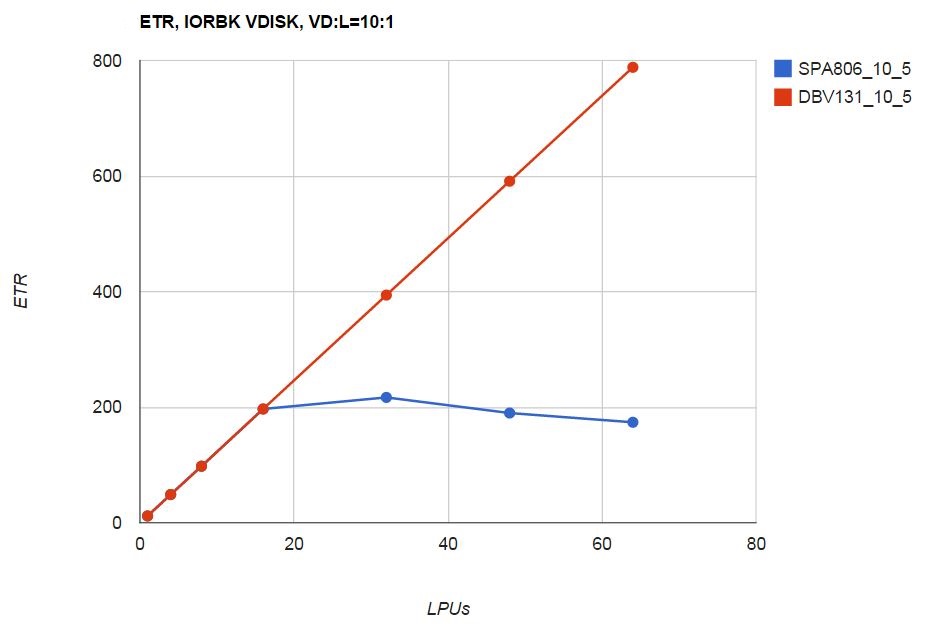

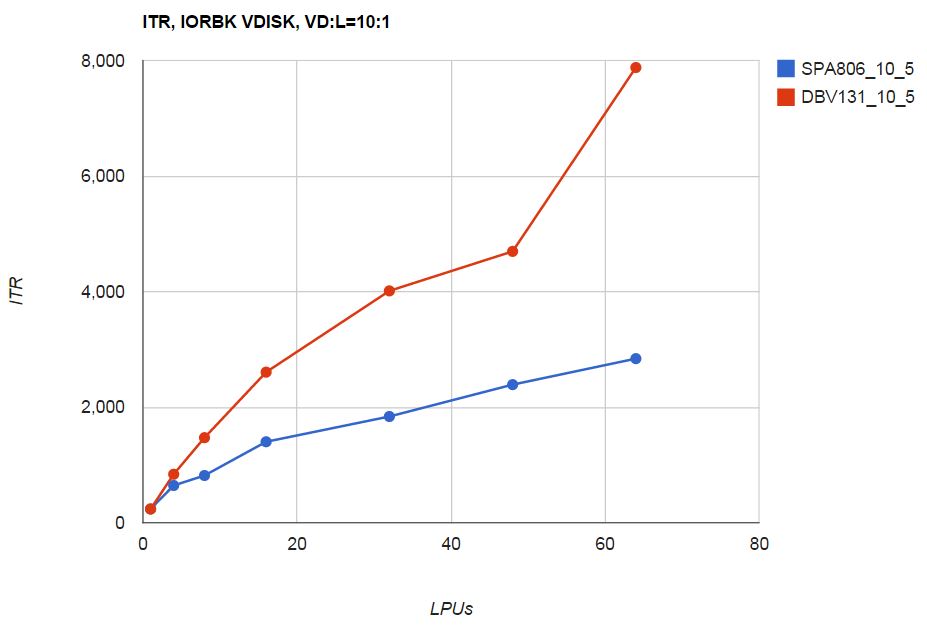

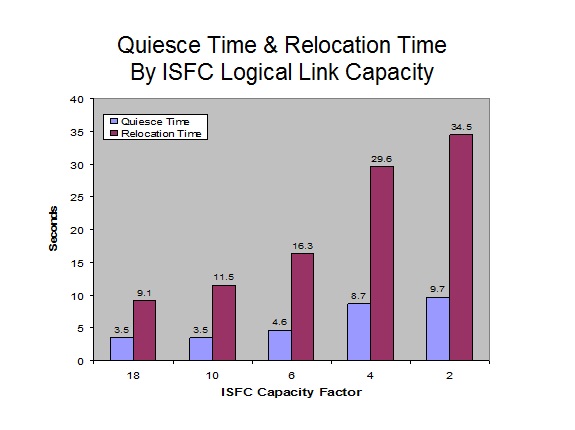

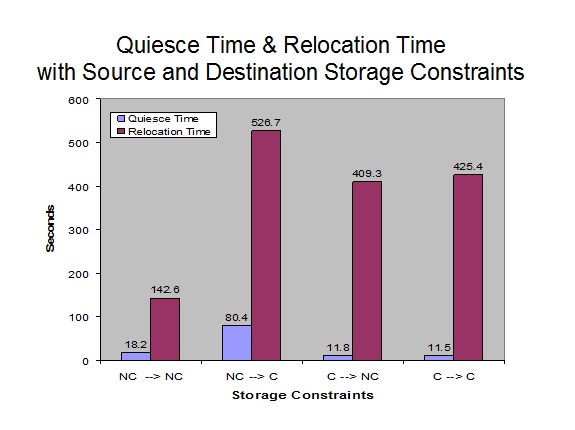

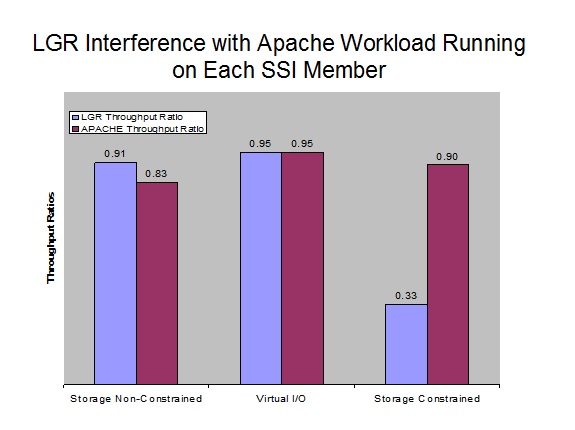

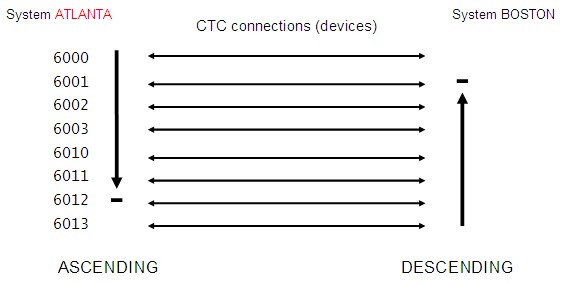

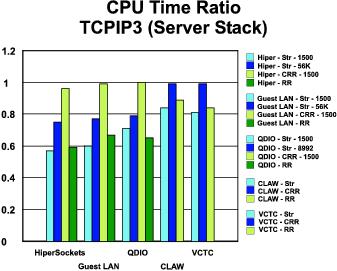

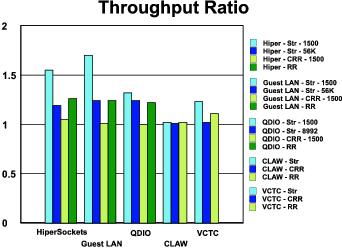

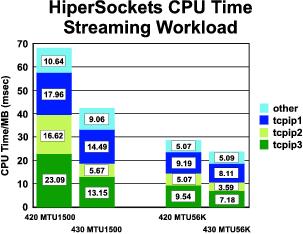

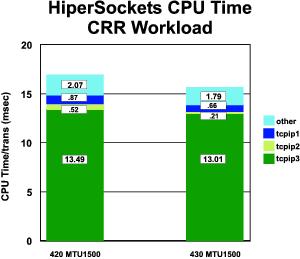

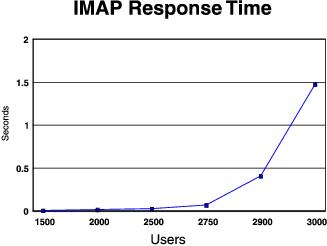

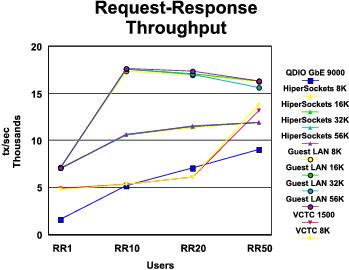

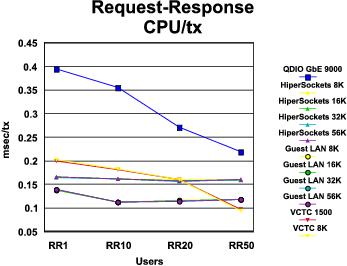

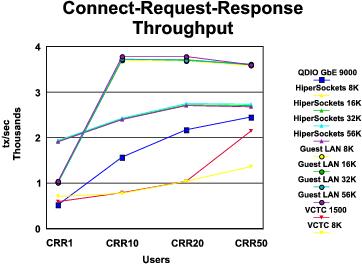

| Notes: z13, dedicated 8-core, non-SMT, 128 GB central. DS8800 with single LCU containing four DASD volumes holding paging extents, eight VIRSTOEX users, virtual uniprocessor, configured to page heavily. | ||||