Live Guest Relocation

Abstract

With z/VM 6.2, the z/VM Single System Image (SSI) cluster is introduced. SSI is a multisystem environment in which the z/VM member systems can be managed as a single resource pool. Running virtual servers (guests) can be relocated from one member to another within the SSI cluster using the new VMRELOCATE command. For a complete description of the VMRELOCATE command, refer to z/VM: CP Commands and Utilities Reference.

Live Guest Relocation (LGR) is a powerful tool that can be used to manage maintenance windows, balance workloads, or perform other operations that might otherwise disrupt logged-on guests. For example, LGR can be used to allow critical Linux servers to continue to run their applications during planned system outages. LGR can also enable workload balancing across systems in an SSI cluster without scheduling outages for Linux virtual servers. For information concerning setting up an SSI cluster for LGR, refer to z/VM: Getting Started with Linux on System z.

For live guest relocation, our experiments evaluated two key measures of relocation performance: quiesce time and relocation time.

-

Quiesce time is the

amount of time a relocating guest virtual machine is stopped.

Quiesce occurs during the final two passes through

storage to move all the guest's pages that have changed since the

previous pass. It is important to minimize the quiesce time because

the guest is not running for this length of time. Certain

applications may have a limit on the length of quiesce time that

they can tolerate and still resume running normally after relocation.

- Relocation time is the amount of elapsed time from when the VMRELOCATE command is issued to when the guest virtual machine is successfully restarted on the destination system. The relocation time represents the total time required to complete the relocation of a guest virtual machine. Relocation time can be important because a whole set of relocations might need to be accomplished during a fixed period of time, such as a maintenance window.

The performance evaluation of LGR surfaced a number of factors affecting quiesce time and relocation time. The virtual machine size of the guest being relocated, the existing work on the source system and destination system, storage constraints on the source and destination systems, and the ISFC logical link configuration all influence the performance of relocations.

The evaluation found serial relocations (one at a time) generally provide the best overall performance results. The IMMEDIATE option provides the most efficient relocation, if minimizing quiesce time is not a priority for the guest being relocated.

Some z/VM monitor records have been updated and additional monitor records have been added to monitor the SSI cluster and guest relocations. A summary of the z/VM monitor record changes for z/VM 6.2 is available here.

Introduction

With z/VM 6.2, the introduction of z/VM Single System Image clusters and live guest relocation further improves the high availability of z/VM virtual servers and their applications. LGR provides the capability to move guest virtual machines with running applications between members of an SSI cluster. Prior to z/VM 6.2, customers had to endure application outages in order to perform system maintenance or other tasks that required a virtual server to be shut down or moved from one z/VM system to another.

This article explores the performance aspects of LGR, specifically, quiesce time and the total relocation time, for Linux virtual servers that are relocated within an SSI cluster. The system configurations and workload characteristics are discussed in the context of the performance evaluations conducted with z/VM 6.2 systems configured in an SSI cluster.

Background

This background section provides a general overview of things you need to consider before relocating guests. In addition, there is a discussion about storage capacity checks that are done by the system prior to and during the relocation process. There is also an explanation of how relocation handles memory move operations. Finally, there is a discussion of the throttling mechanisms built into the system to guard against overrun conditions that can arise on the source or destination system during a relocation.

General Considerations Prior to Relocation

In order to determine whether there are adequate storage resources available on the destination system, these factors should be considered:

- Storage taken by private VDISKs that the guest owns.

- The size to which the guest could grow, including standby and reserved storage settings.

- The level of storage overcommitment on the destination system prior to relocation.

Relocation may increase paging space demands on the destination system. Adhere to existing guidelines regarding number of paging slots required, remembering to include the incoming guests in calculations. One guideline often quoted is that the total number of defined paging slots should be at least twice as large as the total virtual storage across all guests and VDISKs. This can be checked with the UCONF, VDISKS, and DEVICE CPOWNED reports of Performance Toolkit.

One simple paging space guideline that should be considered is to avoid running the system in such a fashion that DASD paging space becomes more than 50% full. The easiest way to check this is to issue CP QUERY ALLOC PAGE. This command will show the percent used, the slots available, and the slots in use. If adding the size of the virtual machine(s) to be relocated (a 4KB page = a 4 KB slot) to the slots in use brings the in use percentage to over 50%, the relocation may have an undesirable impact on system performance.

If in doubt about available resources on the destination system, issue VMRELOCATE TEST first. The output of this command will include appropriate messages concerning potential storage issues on the destination system that could result if the relocation is attempted.

It is important to note that the SET RESERVED setting for the guest (if any) on the source system is not carried over to the destination system. The SET RESERVED setting for the guest on the destination should be established after the relocation completes based on the available resources and workload on the destination system.

Similarly, it is important to consider the share value for the guest being relocated. Even though the guest's SET SHARE value is carried over to the destination system, it may need to be adjusted with respect to the share values of other guests running on the destination system.

For a complete list of settings that do not carry over to the destination system or that should be reviewed, consult the usage notes in the VMRELOCATE command documentation (HELP VMRELOCATE).

Certain applications may have a limit on the length of quiesce time (length of time the application is stopped) that they can tolerate and still resume running normally after relocation. Consider using the MAXQuiesce option of the VMRELOCATE command to limit the length of quiesce time.

Mandatory Storage Checking Performed During Relocation

As part of eligibility checking and after each memory move pass, relocation ensures the guest's current storage size fits into available space on the destination system. For purposes of the calculation, relocation assumes the guest's storage is fully populated (including the guest's private VDISKs) and includes an estimate of the size of the supporting CP structures. Available space includes the sum of available central, expanded, and auxiliary storage.

This storage availability check cannot be bypassed. If it fails, the relocation is terminated. The error message displayed indicates the size of the guest along with the available capacity on the destination system.

Optional Storage Checks Performed During Relocation

In addition to the mandatory test described above, by default the following three checks are also performed during eligibility checking and after each memory move pass. The guest's maximum storage size includes any standby and reserved storage defined for it.

- Will the guest's current storage size (including CP supporting structures) exceed auxiliary paging space on the destination system?

- Will the guest's maximum storage size (including CP supporting structures) exceed available space (central storage, expanded storage, and auxiliary storage) on the destination system?

- Will the guest's maximum storage size (including CP supporting structures) exceed auxiliary paging space on the destination system?

If any of these tests fail, the relocation is terminated. The error message(s) displayed indicates the size of the guest along with the available capacity on the destination system.

If you decide the above three checks do not apply to your installation (for instance, because there is an abundance of central storage and a less-than-recommended amount of paging space), you can bypass them by specifying the FORCE STORAGE option on the VMRELOCATE command.

Determining the Number of Passes Through Storage During Relocation

When relocating a guest, the number of passes made through the guest's storage (referred to as memory move passes) is a factor in the length of quiesce time and relocation time for the relocating guest.

The number of memory move passes for any relocation will vary from three to 18. The minimum of three -- called first, penultimate, and final -- can be obtained only by using the IMMEDIATE option on the VMRELOCATE command.

When the IMMEDIATE option is not specified, the number of intermediate memory move passes is determined by various algorithms based on the number of changed pages in each pass to attempt to reduce quiesce time.

- A relocation will complete in four passes if the number of pages moved in pass 2 is lower than the calculated quiesce threshold or the time value specified as the maximum quiesce time (MAXQuiesce option) is expected to be met.

- An application that is not making progress toward the quiesce threshold will complete in eight passes.

Live Guest Relocation Throttling Mechanisms

The relocation process monitors system resources and might determine a relocation needs to be slowed down temporarily to avoid exhausting system resources.

Conditions that can arise and cause a throttle on the source system are:

- A shortage of available frames for storing outbound guest page content,

- An excessive number of paging requests queued on the paging volumes,

- An excessive number of outbound messages queued on the ISFC logical link,

- A destination system backlog where the destination system signals the source system to suspend processing until the overrun condition on the destination system is relieved.

Conditions that can arise and cause a throttle on the destination system are:

- A shortage of available frames for storing inbound guest page content,

- An excessive number of paging requests queued on the paging volumes,

- Contention with the storage frame replenishment mechanism for serialization of the relocating guest's pages,

- An excessive number of inbound messages on the logical link memory move socket.

Also, because relocation messages need to be presented to the destination system in the order in which they are sent, throttling might occur for the purpose of ordering inbound messages arriving on the ISFC logical link.

Resource Consumption Habits of Live Guest Relocation

Live guest relocation has a high priority with regards to obtaining CPU cycles to do work. This is because it runs under SYSTEMMP in the z/VM Control Program. SYSTEMMP work is prioritized over all non-SYSTEMMP work.

However, from a storage perspective, the story is quite different. Existing work's storage demands take priority over LGR work. As a result, LGR performance is worse when the source system and/or destination system is storage constrained.

Factors that Affect the Performance of Live Guest Relocation

Several factors affect LGR's quiesce time and relocation time. These factors are summarized here; the discussion is expanded where they are encountered in our workload evaluations.

-

The virtual machine size of the guest being relocated.

The larger the size, the longer the quiesce time and relocation time. The guest's dynamic address translation table (DAT) structures are scanned for relocation. The size of the DAT structures is directly related to the size of the guest virtual machine.

-

Existing work on the source and destination systems.

Existing work can cause contention for resources used by LGR. Generally, the more work being performed on the source and destination systems, the longer it will take relocations to complete.

-

Resource use characteristics of the applications and the running

environment of the relocating guest.

Characteristics such as how often the guest's pages are changing, whether the guest's pages are in resident memory, or expanded memory, or on DASD paging packs affects the length of quiesce time and relocation time.

-

System constraints on the source and destination systems.

Storage constraints and CPU constraints will lengthen quiesce time and relocation time whenever LGR must contend for these resources.

-

Concurrent versus serial guest relocations.

Concurrent relocations create more contention for system resources. This contention typically results in longer quiesce times. With one-at-a-time relocations, there is generally less resource contention.

-

The configuration of the ISFC logical link between the source system

and the destination system.

The most efficient ISFC logical link configuration is when each CHPID has four to five real device addresses activated. In addition, the speed of the FICON CHPIDs is also a factor. For example, 8 Gigabit FICON CHPIDs will move data faster than 4 Gigabit FICON CHPIDs. For more information about ISFC logical link performance, refer to our ISFC article.

Method

The performance aspects of live guest relocation were evaluated with various workloads running with guests of various sizes, running various combinations of applications, and running in configurations with and without storage constraints. These measurements were done with two-member SSI clusters. With the two-member SSI cluster, both member systems were in partitions with four dedicated general purpose CPs (Central Processors) on the same z10 CEC (Central Electronics Complex). Table 1 below shows the ISFC logical link configuration for the two-member SSI cluster.

Table 1. ISFC logical link configuration for the two-member SSI cluster.

| SSI Member System | ISFC Logical Link (FICON CHPIDs) | ISFC Capacity Factor * | SSI Member System |

| GDLMPPB1 | 1-2Gb, 2-4Gb, 1-8Gb | 18 | GDLMPPB2 |

| Note: * ISFC capacity factor is the sum of speeds of the FICON CHPIDs between the SSI member systems. | |||

Measurements were also conducted to evaluate the effect of LGR on existing workloads on the source and destination systems. A four-member SSI cluster was used to evaluate these effects. The four-member SSI cluster was configured across two z10 CECs with three members on one CEC and one member on the other CEC. Each member system was configured with four dedicated general purpose CPs. Table 2 below shows the ISFC logical link configuration for the four-member SSI cluster.

Table 2. ISFC logical link configuration for the four-member SSI cluster.

| SSI Member System | ISFC Logical Link (FICON CHPIDs) | ISFC Capacity Factor * | SSI Member System |

| GDLEPPA1 | 2-2Gb, 2-4Gb | 12 | GDLMPPA2 |

| GDLEPPA1 | 1-2Gb, 1-4Gb | 6 | GDLMPPA3 |

| GDLEPPA1 | 1-2Gb, 1-4Gb | 6 | GDLMPPA4 |

| GDLEPPA2 | 2-2Gb, 2-4Gb | 12 | GDLMPPA3 |

| GDLEPPA2 | 1-2Gb, 1-4Gb | 6 | GDLMPPA4 |

| GDLEPPA3 | 1-2Gb, 1-4Gb | 6 | GDLMPPA4 |

| Note: * ISFC capacity factor is the sum of speeds of the FICON CHPIDs between the SSI member systems. | |||

Results and Discussion

Evaluation - LGR with an Idle Guest with Varying Virtual Machine Size

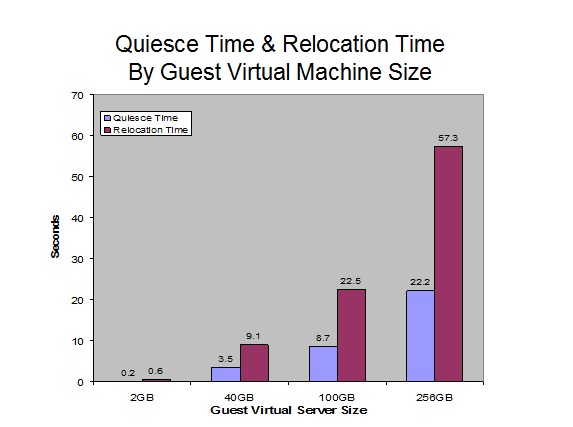

The effect of virtual machine size on LGR performance was evaluated using an idle Linux guest. The selected virtual machine storage sizes were 2G, 40G, 100G, and 256G.

An idle guest is the best configuration to use for this measurement. With an idle guest there are very few pages moved after the first memory move pass. This means an idle guest should provide the minimum quiesce time and relocation time for a guest of a given size. Further, results should scale uniformly with the virtual storage size and are largely controlled by the capacity of the ISFC logical link.

The measurement ran in a two-member SSI cluster connected by an ISFC logical link made up of four FICON CTC CHPIDs configured as shown in Table 1.

There was no other active work on either the source or destination system.

Figure 1 illustrates the scaling of LGR quiesce time and relocation time for selected virtual machine sizes for an idle Linux guest.

Quiesce time for an idle guest is dominated by the scan of the DAT tables and scales uniformly with the virtual storage size. Relocation time for an idle guest is basically the sum of the pass 1 memory move time and the quiesce time.

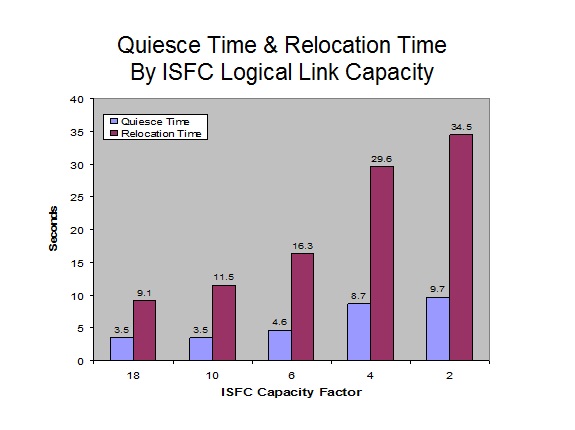

Evaluation - Capacity of the ISFC Logical Link

The effect of ISFC logical link capacity on LGR performance was evaluated using a 40G idle Linux guest. With an idle guest there are very few pages moved after the first memory move pass, so it is the best configuration to use to observe performance as a function of ISFC logical link capacity.

The evaluation was done in a two-member SSI cluster connected by an ISFC logical link. Five different configurations of the ISFC logical link were evaluated. Table 3 below shows the ISFC logical link configuration, capacity factor, and number of FICON CTCs for the five configurations evaluated.

Table 3. Evaluated ISFC Logical Link Configurations.

| ISFC Logical Link CHPIDs | ISFC Capacity Factor * | CTCs/FICON CHPID | Total CTCs |

| 1-2Gb, 2-4Gb, 1-8Gb | 18 | 4 | 16 |

| 1-2Gb, 2-4Gb | 10 | 4 | 12 |

| 1-2Gb, 1-4Gb | 6 | 4 | 8 |

| 1-4Gb | 4 | 4 | 4 |

| 1-2Gb | 2 | 4 | 4 |

| Note: * ISFC capacity factor is the sum of speeds of the FICON CTCs between the SSI member systems. | |||

There was no other active work on either the source or destination system.

Figure 2 shows the LGR quiesce time and relocation time for the ISFC logical link configurations that were evaluated. The chart illustrates the scaling of the quiesce time and relocation time as the ISFC logical link capacity decreases.

Figure 2. LGR Quiesce Time and Relocation Time as the

ISFC Logical Link Capacity Decreases.

LGR performance scaled uniformly with the capacity of the logical link. Relocation time increases as the capacity of the logical link is decreased. Generally, quiesce time also increases as the capacity of the logical link is decreased.

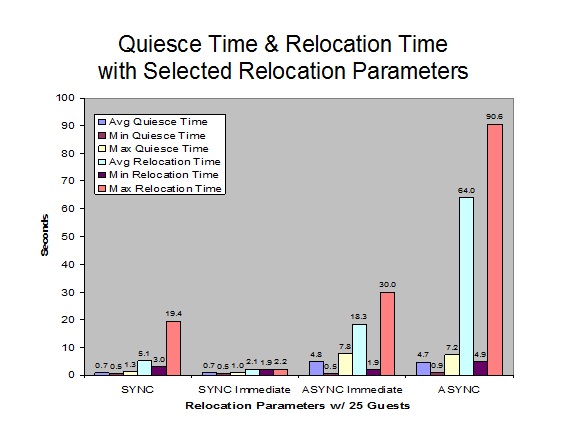

Evaluation - Relocation Options that Affect Concurrency and Memory Move Passes

The effect of certain VMRELOCATE command options was evaluated by relocating 25 identical Linux guests. Each guest was defined as virtual 2-way with a virtual machine size of 4GB. Each guest was running the PING, PFAULT, and BLAST applications. PING provides network I/O; PFAULT uses processor cycles and randomly references storage, thereby constantly changing storage pages; BLAST generates application I/O.

Evaluations were completed for four different combinations of relocation options, listed below. In each case, the VMRELOCATE command for the next guest to be relocated was issued as soon as the system would allow it.

- Synchronous: using the VMRELOCATE command SYNC option

- Synchronous immediate: using the VMRELOCATE command SYNC option and IMMEDIATE option

- Asynchronous immediate: using the VMRELOCATE command ASYNC option and IMMEDIATE option

- Asynchronous: using the VMRELOCATE command ASYNC option

The measurement ran in a two-member SSI cluster connected by an ISFC logical link made up of four FICON CTC CHPIDs configured as shown in Table 1.

There was no other active work on either the source or destination system.

Figure 3 shows the average, minimum, and maximum LGR quiesce time and relocation time across the 25 Linux guests with each of the four relocation option combinations evaluated.

The combinations were assessed on success measures that might be important in various customer environments. Table 4 shows the combinations that did best on the success measures considered. No single combination was best at all categories.

Table 4. Success Measures and VMRELOCATE Option Combinations

| Achievement | Synchronous | Synchronous immediate | Asynchronous immediate | Asynchronous |

| Best total relocation time for all users | X | |||

| Best individual relocation times | X | |||

| Best individual quiesce times | X | |||

| Least number of memory move passes | X | X | ||

| Best response times for PING | X |

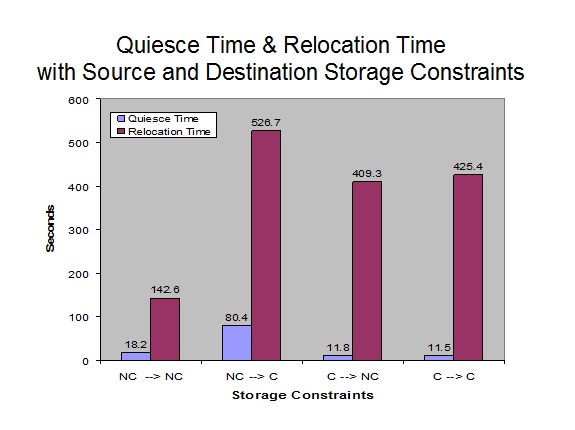

Evaluation - Storage Constraints

The effect of certain storage constraints was evaluated using a 100G Linux guest. The Linux guest was running the PFAULT application and changing 25% of its pages.

Four combinations of storage constraints were measured with this single large user workload:

- Non-constrained source system to non-constrained destination system (NC --> NC)

- Non-constrained source system to constrained destination system (NC --> C)

- Constrained source system to non-constrained destination system (C --> NC)

- Constrained source system to constrained destination system (C --> C)

To create these storage constraints, the following storage configurations were used:

- Non-constrained partitions were configured with 60G of central storage and 2G of expanded storage.

- Constrained partitions were configured with 22G of central storage and no expanded storage.

The measurement ran in a two-member SSI cluster connected by an ISFC logical link made up of four FICON CTC CHPIDs configured as shown in Table 1.

There was no other active work on either the source or destination system.

Figure 4 shows the LGR quiesce time and relocation time for the 100G Linux guest with each of the four storage-constrained combinations.

Figure 4. LGR Quiesce Time and Relocation Time with

Source and/or Destination Storage Constraints.

Relocation for the non-constrained source to non-constrained

destination

No Constraints

Compared to the idle workload, relocation time increased approximately 500% because of the increased number of memory move passes and the increased number of changed pages moved during each pass. Quiesce time increased approximately 100% because of the increased number of changed pages that needed to be moved during the quiesce passes.

| Metric | Idle | NC-->NC * | Delta | Pct Delta |

| Run Name | W0-SGLDD | W0-SGLDA | ||

| Quiesce Time (sec) | 8.71 | 18.16 | 9.45 | 108.5 |

| Relocation Time (sec) | 22.46 | 142.56 | 120.10 | 534.7 |

| Memory Move Passes | 4 | 8 | 4 | 100.0 |

| Total Pages Moved | 1297347 | 41942816 | 40645469 | 3133.0 |

| Note: * NC-->NC includes PFAULT running on the 100G Guest randomly changing 25% of its pages. | ||||

Non-Constrained Source to Constrained Destination (NC-->C)

Relocation for the non-constrained source to constrained destination took eight memory move passes as expected, with nearly 25G of changed pages moved in each of passes 2 through 6.

Compared to the non-constrained destination, relocation time increased more than 250% and quiesce time increased more than 300% because of throttling on the destination system while pages were being written to DASD. Since each memory move pass took longer, the application was able to change more pages and thus the total pages moved during the relocation was slightly higher.

| Metric | NC-->NC | NC-->C | Delta | Pct Delta |

| Run Name | W0-SGLDA | W0-SGLDF | ||

| Quiesce Time (sec) | 18.16 | 80.35 | 62.19 | 342.5 |

| Relocation Time (sec) | 142.56 | 526.69 | 384.13 | 269.5 |

| Memory Move Passes | 8 | 8 | 0 | 0 |

| Total Pages Moved | 41942816 | 46469235 | 4526419 | 10.8 |

Constrained Source

Relocation for the constrained source to non-constrained destination completed in the maximum 18 memory move passes. Because the application cannot change pages very rapidly (due to storage constraints on the source system), fewer pages need to be relocated in each pass, so progress toward a shorter quiesce time continues for the maximum number of passes.

Both measurements with the constrained source (C --> NC and C --> C) had similar relocation characteristics, so the constraint level of the destination system was not a significant factor.

Compared to the fully non-constrained measurement (NC --> NC), relocation time increased approximately 180%, but quiesce time decreased more than 30%. Fewer changed pages to move during the quiesce memory move passes accounts for the improved quiesce time.

| Metric | NC-->NC | C-->NC | Delta | Pct Delta |

| Run Name | W0-SGLDA | W0-SGLD8 | ||

| Quiesce Time (sec) | 18.16 | 11.84 | -6.32 | -34.8 |

| Relocation Time (sec) | 142.56 | 409.33 | 266.77 | 187.1 |

| Memory Move Passes | 8 | 18 | 10 | 125.0 |

| Total Pages Moved | 41942816 | 8914603 | -33028213 | -78.6 |

Evaluation -- Effects of LGR on Existing Workloads

The effect of LGR on existing workloads was evaluated using two 40G idle Linux guests.

The measurement ran in a four-member SSI cluster connected by ISFC logical links using FICON CTC CHPIDs configured as shown in Table 2.

One idle Linux guest was continually relocated between members one and two, while a second idle Linux guest was continually relocated between members three and four.

A base measurement for the performance of these relocations was obtained by running the relocations alone in the four-member SSI cluster.

An Apache workload provided existing work on each of the SSI member systems. Three different Apache workloads were used for this evaluation:

-

Non-constrained storage environment with Apache webserving and LGR

In this configuration Apache is able to use all the available processor cycles, so any cycles used by LGR have an effect on the Apache workload.

-

Non-constrained storage environment with virtual-I/O-intensive

Apache webserving and LGR

In this configuration Apache is limited by DASD response time, reducing its ability to consume processor cycles. So both processor cycles and storage are available for LGR.

-

Storage-constrained environment with Apache webserving and LGR

In this configuration LGR is affected by the shortage of available storage. Because of this, LGR does not use much in the way of processor cycles, leaving plenty of cycles available for Apache.

Base measurements for the performance of each of these Apache workloads were obtained by running them without the relocating Linux guests.

Comparison measurements were obtained by running each of the Apache workloads again with the addition of the idle Linux guest relocations included. In this way the effect of adding live guest relocations to the existing Apache workloads could be evaluated.

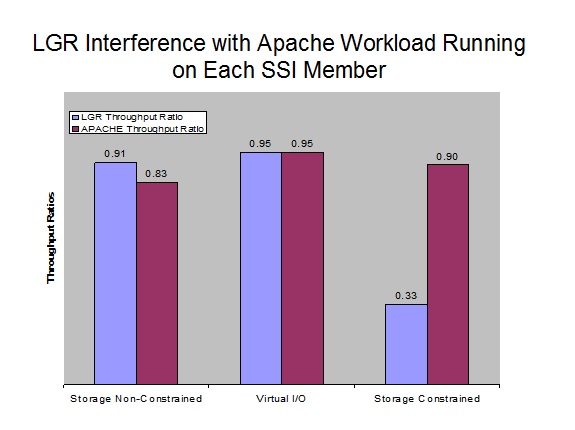

Figure 5 shows the throughput ratios for LGR and Apache with each of the three Apache workloads. It illustrates the impact to throughput for LGR and Apache in each case.

Figure 5. LGR Interference with an Existing Apache Workload

Running on Each SSI Member.

For the non-constrained storage environment with LGR and Apache webserving, LGR has the ability to use all of the processor cycles that it desires. This results in the Apache workload being limited by the remaining available processor cycles. With this workload, Apache achieved only 83% of its base rate while LGR achieved 91% of its base rate.

For the non-constrained storage environment with LGR and virtual-I/O-intensive Apache webserving, neither the LGR workload nor the Apache workload was able to use all of the processor cycles and storage available, so the impact to each workload is expected to be minimal and uniform. Both the LGR workload and the Apache workload achieved 95% of their base rate.

For the storage-constrained environment with LGR and Apache webserving, LGR has throttling mechanisms that reduce interference with existing workloads. Because of this, LGR is expected to encounter more interference in this environment than the Apache workload. The LGR workload achieved only 33% of its base rate while the Apache workload achieved 90% of its base rate.

Summary and Conclusions

A number of factors affect live guest relocation quiesce time and relocation time. These factors include:

- The virtual machine size of the Linux guest being relocated;

- Existing work on the source system and/or destination system;

- Resource use characteristics of the applications and the running environment of the relocating guest;

- System constraints on the source system and/or destination system;

- Serial versus concurrent relocations;

- The ISFC logical link configuration between the source and destination systems.

Serial relocations provide the best overall results. This is the recommended method when using LGR.

Doing relocations concurrently can significantly increase quiesce times and relocation times.

If minimizing quiesce time is not a priority for the guest being relocated, using the IMMEDIATE option provides the most efficient relocation.

Live guest relocations in CPU-constrained environments generally will not limit LGR since it runs under SYSTEMMP. This gives LGR the highest priority for obtaining processor cycles.

In storage-constrained environments, existing work on the source

and destination systems will take priority over live guest relocations.

As a result, when storage constraints are present,

LGR quiesce time and relocation time will typically be

longer.