Diagnose X'9C' Support

Abstract

z/VM 5.3 includes support for diagnose X'9C' -- a new protocol for guest operating systems to notify CP about spin lock situations. It is similar to diagnose X'44' but allows specification of a target virtual processor. Diagnose X'9C' provided a 2% to 12% throughput improvement over diagnose X'44' for various measured Linux guest configurations having processor contention. No benefit is expected in configurations without processor contention.

Introduction

This section of the report provides performance results for the new protocol, diagnose X'9C', that guest operating systems can use to notify CP about spin lock situations. This new support identifies the processor that is holding the desired lock and is more efficient than the previous protocol, diagnose X'44', which did not. The new z/VM 5.3 diagnose X'9C' support is compared to the existing diagnose X'44' support in various constrained configurations. The benefit provided by diagnose X'9C' is generally proportional to the constraint level in the base measurement. No benefit is expected in configurations without processor contention.

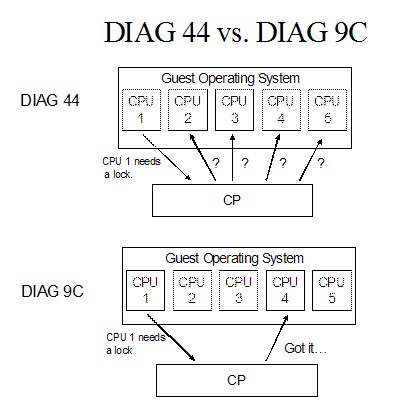

Figure 1 illustrates the difference between the diagnose X'44' and the diagnose X'9C' locking mechanisms. In the diagnose X'44' implementation, when a virtual processor needs a lock that is held by another virtual processor, CP is not informed of which other virtual processor holds the lock so it selectively schedules other virtual processors in an effort to find the virtual processor holding the lock to be released. In diagnose X'9C' implementation, however, the virtual processor holding the required lock is specified, so CP schedules only that processor. This allows for more efficient management of spin lock situations.

|

Diagnose X'9C' is also available for z/VM 5.2 in the service stream via PTF UM31642.

z9 processors provides diagnose X'9C' support to the operating systems running in an LPAR.

z9 processors have a new feature, SIGP Sense Running Status Order, that allows a guest operating system to determine if the virtual processor holding a lock is currectly dispatched on a real processor. This could provide additional benefit, since the guest does not need to issue a diagnose X'9C' if the virtual processor currently holding a lock is already dispatched.

In addition to providing diagnose X'9C' support to guest operating system, z/VM uses it for its own spin lock contention anytime the hipervisor on which it is running provides the support. z/VM also uses the SIGP Sense Running Status Order for its own lock contention anytime both the hardware and the hipervisor on which it is running provide support.

z/OS provided support for diagnose X'9C' in Release 1.7 but it is also available in the service stream for Release 1.6 via APAR OA12300. z/OS also uses the SIGP Sense Running Status Order when it is available.

Linux for System z provided support for diagnose X'9C' in SUSE SLES 10 but it does not use the SIGP Sense Running Status Order that is available on z9 processors.

Background

There have been several recent z/VM improvements for guest operating system spin locks.

-

Extended Diagnose X'44' Fast Path

describes the z/VM 5.2

extension of the diagnose

X'44'

fast path

from 2-way to n-way and

shows performance improvement results.

- Improved Processor Scalability describes the z/VM 5.3 improvements for the scheduler lock that is used by both diagnose X'44' and diagnose X'9C'.

In addition to z/VM changes, Linux for System z introduced a spin_retry variable in kernel 2.6.5-7.257 dated 2006-05-16 and is available in SUSE SLES 9 security update announced 2006-05-24, SUSE SLES 10, and RHEL5. Prior to the spin_retry variable, a diagnose X'44' or diagnose X'9C', was issued every time through the spin lock loop. The spin_retry variable specifies the number of times to complete the spin loop before issuing a diagnose X'44' or diagnose X'9C'. The default value is 1000 but the value can be changed by /proc/sys/kernel/spin_retry.

Method

The Apache workload was used to create a z/VM 5.3 workload for this evaluation.

Although not demonstrated in this report section, diagnose X'9C' does not appear to provide a performance benefit over fast path diagnose X'44'. Consequently, demonstrating the benefit of diagnose X'9C' requires a base workload containing non fast path diagnose X'44's. Creation of non fast path diagnose X'44's requires a set of n-way users that can create a high rate of diagnose X'44's plus a set of users that can create a large processor queue. Both conditions are necessary or a high percentage of the diagnose X'44's with be fast path.

Spin lock variations were created for the Apache workload by varying the number of client virtual processors, the number of servers, the number of server virtual processors, and the number of client connections to each server. Actual values for these parameters are shown in the data tables.

Information about the diagnose X'44' and diagnose X'9C' rates is provided on the PRIVOP screen (FCX104) in Performance Toolkit for VM. The fast path diagnose X'44' rate is provided on the SYSTEM screen (FCX102). Total rates and percentages shown in the tables are calculated from these values.

Processor contention is indicated by the PLDV % empty and queue depth on the PROCLOG screen (FCX144) in Performance Toolkit for VM. PLDV % empty represents the percentage of time the local processor vectors do not have a queue. Lower values mean increased processor contention. PLDV queue depth represents the number of VMDBKs that are in the local processor vector. Larger values mean increased processor contention. Values for the diagnose X'44' base measurements are shown in the data tables. Values for the diagnose X'9C' measurements are similar to the base measurement values and are shown in the data tables.

Most of the performance experiments were conducted in a z990 2084-320 LPAR configured with 30G central storage, 8G expanded storage, and 9 dedicated processors using 500 1M URL files in a non-paging environment. One set of the performance experiments was repeated in a z9 2094-733 LPAR configured with 30G central storage, 5G expanded storage, and 9 dedicated processors using 500 1M URL files in a non-paging environment.

Informal measurements, not included in this report, using a z/OS Release 1.7 guest with a z/OS paging workload produced a range of improvements similar to the Apache workload.

Results and Discussion

The Apache results are presented in four separate sections. The first three sections contain results from the z990 experiments while the fourth section contain results from the z9 experiments. The first section has direct comparisons of diagnose X'9C' and diagnose X'44' on z/VM 5.3. The second section has another set of direct comparisons of diagnose X'9C' and diagnose X'44' on z/VM 5.3 but the measurements have different values for the Linux spin_retry variable, thus demonstrating the effect of this Linux improvement on the benefit for diagnose X'9C'. The third section has a comparison of the z/VM 5.3 diagnose X'9C' support compared to the z/VM 5.2 diagnose X'9C' support and demonstrates the value of other z/VM 5.3 improvements. The fourth section has direct comparisons of diagnose X'9C' and diagnose X'44' on z/VM 5.3 by processor model.

Improvement versus contention

Table 1 has the Apache results presented in this section which are direct comparisons of diagnose X'9C' and diagnose X'44' on z/VM 5.3 using Linux SLES 10 SP1 for the clients, and SLES 9 SP2 for servers. Since the servers are SLES 9, they will continue to issue diagnose X'44' and not diagnose X'9C' so all of the diagnose X'9C' benefit comes from the clients. Client virtual processors were increased from 4 to 6 between the first and second set of data. Server virtual processors were increased from 1 to 2 between the first and second set of data. The number of servers was doubled between each set of data.The improvement provided by diagnose X'9C' increased as the processor contention level increased. It started at 2.1% in the first set of data, increased to 9.8% for the second set of data, and increased to 11.1% for the third set of data.

Table 1. Apache Diagnose X'9C' Workload Measurement Data

| DIAG 44 - Run ID | DIAG44GA | DIAG44GB | DIAG44GC |

| DIAG 9C - Run ID | DIAG9CGA | DIAG9CGB | DIAG9CGC |

| Virtual CPUs/client | 4 | 6 | 6 |

| Servers | 8 | 16 | 32 |

| Virtual CPUs/server | 1 | 2 | 2 |

| DIAG 44 - Total DIAG 44 Rate | 119868 | 110729 | 78542 |

| DIAG 44 - % Fast Path | 87 | 73 | 72 |

| DIAG 44 - PLDV % Empty | 62 | 34 | 22 |

| DIAG 44 - PLDV Queue | 1 | 2 | 3 |

| DIAG 9C - Total DIAGs (44,44FP,9C) | 100015 | 111904 | 74877 |

| DIAG 9C - % DIAG 9C | 100 | 86.8 | 86.2 |

| Percent Throughput Improvement with DIAG 9C | 2.1 | 9.8 | 11.1 |

| Notes: 9 Dedicated Processors; Processor Model 2084-320; 30G Central Storage; 8G Expanded Storage; Apache Workload; 2 SLES 10 Clients; SLES 9 Servers; 1G Client Virtual Storage; 1.5G Server Virtual Storage; 1 MB URL file size; 1 Client Connection per Server; Default (1000) Linux spin_retry; z/VM 5.3. | |||

Effect of Linux spin_retry variable

Table 2 has the Apache results presented in this section which are direct comparisons of diagnose X'9C' and diagnose X'44' on z/VM 5.3 using Linux SLES 10 SP1 for both the clients and servers so the diagnose X'9C' benefit comes from both the clients and the servers. Client virtual processors were increased from 6 to 9 between the measurements in this section and the last set of data in the previous section. Server virtual processors were increased from 2 to 6 between the measurements in this section and the last set of data in the previous section. The number of client connections to each server was increased from 1 to 3 between the measurements in this section and the last set of data in the previous section.With this increased processor contention, the improvement provided by diagnose X'9C' for the first set of data in this section was 12.1% -- higher than any of the percentages in the previous section.

Although we don't recommend changing the Linux spin_retry value, we evaluated the diagnose X'9C' benefit with a spin_retry value of zero and observed an improvement of 32.2%. However, actual throughput for both measurements using a spin_retry value of 0 are much lower than corresponding measurements using the default spin_retry of 1000.

Table 2. Full Support for Diagnose X'9C' and the Linux spin_retry Effect

| DIAG 44 - Run ID | DIAG44GI | DIAG44GH |

| DIAG 9C - Run ID | DIAG9CGE | DIAG9CGH |

| Linux spin_retry | 1000 | 0 |

| DIAG 44 - Total Diag 44 Rate | 48306 | 257264 |

| DIAG 44 - % Fast Path | 58 | 83 |

| DIAG 44 - PLDV % Empty | 14 | 24 |

| DIAG 44 - PLDV Queue | 4 | 4 |

| DIAG 9C - Total DIAGs (44,44FP,9C) | 40980 | 101702 |

| DIAG 9C - % DIAG 9C | 100 | 100 |

| Percent Throughput Improvement with DIAG 9C | 12.1 | 32.2 |

| Notes: 9 Dedicated Processors; Processor Model 2084-320; 30G Central Storage; 8G Expanded Storage; Apache workload; 2 SLES 10 Clients; 1G Client Virtual Storage; 9 Virtual CPU's/client; 32 SLES 10 Servers; 1.5G Server Virtual Storage; 6 Virtual CPUs/server; 1 MB URL file size; 3 Client Connections per Server; z/VM 5.3. | ||

Benefit of other z/VM 5.3 performance improvements

Table 3 has the Apache results presented in this section which are a comparison between z/VM 5.3 and z/VM 5.2 for a diagnose X'9C' workload. The z/VM 5.3 measurement is from the first set of data in the previous section and uses Linux SLES 10 SP1 for both the clients and servers. Clients have 9 virtual processors and servers have 6 virtual processors.The results with z/VM 5.3 improved 10.8% over z/VM 5.2, thus demonstrating the value of other z/VM 5.3 performance improvements, especially the scheduler lock improvement.

Table 3. Performance Comparison: z/VM 5.3 vs. z/VM 5.2

| z/VM 5.2 - Run ID | DIAG9CGJ |

| z/VM 5.3 - Run ID | DIAG9CGE |

| z/VM 5.2 - Total DIAGs (44,44FP,9C) | 38514 |

| z/VM 5.2 - % DIAG 9C | 100 |

| z/VM 5.3 - Total DIAGs (44,44FP,9C) | 40980 |

| z/VM 5.3 - % DIAG 9C | 100 |

| Percent Throughput Improvement with z/VM 5.3 | 10.8 |

| Notes: 9 Dedicated Processors; Processor Model 2084-320; 30G Central Storage; 8G Expanded Storage; Apache workload; 2 SLES 10 Clients; 1G Client Virtual Storage; 9 Virtual CPU's/client; 32 SLES 10 Servers; 1.5G Server Virtual Storage; 6 Virtual CPUs/server; 1 MB URL file size; 3 Client Connections per Server; z/VM 5.3. | |

Effect of processor model

Table 4 has the Apache results presented in this section which are direct comparisons of diagnose X'9C' and diagnose X'44' on z/VM 5.3 using Linux SLES 10 SP1 for both the clients and servers so the diagnose X'9C' benefit comes from both the clients and the servers. The z990 measurements are from a previous section. The z9 measurements use the same workload and a nearly identical configuration as the z990 measurements. The only configuration difference, 3G of expanded storage, is not expected to affect the results since there is no expanded storage activity during the measurements.The improvement provided by diagnose X'9C' on the z9 processor was 9.9%, which is lower than the 12.1% provided on the z990 processor. Since the z9 base measurement in Table 4 shows a 26% increase in the diagnose X'44' rate over the z990 measurement with an equivalent contention level, one would expect a larger percentage improvement on the z9 processor. Measurement details, not included in the table, show overall throughput increased 61% between the z990 measurement and the z9 measurement. This means that diagnose X'44' is a smaller percentage of the workload on the z9 and that therefore one should expect a smaller percentage improvement on the z9 processor. Since Linux for System z does not use the SIGP Sense Running Status Order on z9 processors, it is not a factor in the results.

Table 4. Benefit by processor model

| DIAG 44 - Run ID | DIAG44GI | DIAG44XA |

| DIAG 9C - Run ID | DIAG9CGE | DIAG9CXA |

| Processor Model | 2084-320 | 2094-733 |

| Expanded Storage | 8G | 5G |

| DIAG 44 - Total Diag 44 Rate | 48306 | 61115 |

| DIAG 44 - % Fast Path | 58 | 55 |

| DIAG 44 - PLDV % Empty | 14 | 14 |

| DIAG 44 - PLDV Queue | 4 | 5 |

| DIAG 9C - Total DIAGs (44,44FP,9C) | 40980 | 50898 |

| DIAG 9C - % DIAG 9C | 100 | 100 |

| Percent Throughput Improvement with DIAG 9C | 12.1 | 9.9 |

| Notes: 9 Dedicated Processors; 30G Central Storage; Apache workload; 2 SLES 10 Clients; 1G Client Virtual Storage; 9 Virtual CPU's/client; 32 SLES 10 Servers; 1.5G Server Virtual Storage; 6 Virtual CPUs/server; 1 MB URL file size; 3 Client Connections per Server; z/VM 5.3. | ||

Summary and Conclusions

Diagnose X'9C' provided a 2% to 12% improvement over diagnose X'44' for various constrained configurations.

Diagnose X'9C' shows a higher percentage improvement over diagnose X'44' when the Linux for System z spin_retry value is reduced but overall results are best with the default spin_retry value.

z/VM 5.3

provided a 10.8%

improvement over

z/VM 5.2

for a

diagnose X'9C' workload.