Enhanced Large Real Storage Exploitation

This section summarizes the results of a number of new measurements that were designed to demonstrate the performance benefit of the Enhanced Large Real Storage Exploitation support. This support includes:

- The CP virtual address space no longer has a direct identity mapping to real storage

- The CP control block that maps real storage (frame table) was moved above 2G

- Some additional CP modules were changed to 64-bit addressing

- Some CP modules (including those involved in Linux virtual I/Os) were changed to use access registers to reference guest pages that reside above 2G

These changes remove the need for CP to move user pages below the 2G line, thereby providing a large benefit to workloads that were previously constrained below the 2G line. Removing this constraint lets z/VM 5.2.0 use large amounts of real storage.

For more information about these improvements, refer to the Performance Improvements section. For guidelines on detecting a below-2G constraint, refer to the CP Regression section.

The benefit of these enhancements will be demonstrated using three separate sets of measurements. The first set will show the benefit of z/VM 5.2.0 for a below-2G-constrained workload in a small configuration with three processors and 3G of real storage. The second set will show that z/VM 5.2.0 continues to scale as workload, real storage, and processors are increased. The third set will demonstrate that all 128G of supported real storage can be efficiently supported.

Improvements in 3 GB of Real Storage

The Apache workload was used to create a z/VM 5.1.0 below-2G-constrained workload in 3G of real storage and to demonstrate the z/VM 5.2.0 improvements.

The following table contains the Apache workload parameter settings.

Apache workload parameters for measurements in this section

| Server virtual machines | 3 |

| Client virtual machines | 2 |

| Client connections per server | 1 |

| Number of 1M files | 5000 |

| Location of the files | Xstor MDC |

| Server virtual storage | 1024M |

| Server virtual processors | 1 |

| Client virtual processors | 1 |

This is a good example of the basic value of these enhancements and a demonstration of a z/VM 5.1.0 below-2G constraint without a large amount of storage above 2G.

Here is a summary of the z/VM 5.2.0 results compared to the z/VM 5.1.0 measurement.

- Transaction rate increased 13%

- Below-2G paging was eliminated

- Xstor page rate decreased by 89%

- User resident pages above 2G increased by 770%

- CP microseconds (µsec) per transaction decreased by 23%

- Virtual µsec per transaction decreased by 4.6%

- Total µsec per transaction decreased by 12%

The following table compares z/VM 5.1.0 and z/VM 5.2.0 measurements for this workload.

Apache workload selected measurement data

| z/VM Release | 5.1.0 | 5.2.0 |

| Run ID | 3GBS9220 | 3GBTA190 |

| Tx rate | 54.591 | 62.064 |

| Below-2G Page Rate | 83 | 0 |

| Xstor Total Rate | 29327 | 3209 |

| Resident Pages above 2G | 28373 | 246924 |

| Resident Pages below 2G | 503540 | 508752 |

| Total µsec/Tx | 62050 | 54490 |

| CP µsec/Tx | 25674 | 19769 |

| Emul µsec/Tx | 36377 | 34721 |

| 2064-116; 3 dedicated processors; 3G central storage; 8G expanded storage; 6 connections | ||

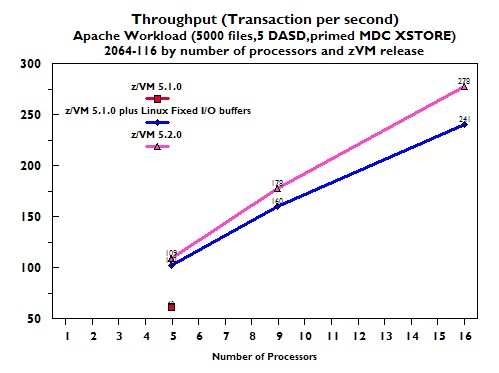

Scaling by Number of Processors

The Apache workload was used to create a z/VM 5.1.0 below-2G-constrained workload to demonstrate the z/VM 5.2.0 relief and to demonstrate that z/VM 5.2.0 would scale correctly as processors are added.

Since, in this example, the z/VM 5.1.0 below-2G constraint is created by Linux I/O, constraint relief can alternatively be provided by the Linux Fixed I/O Buffers feature. This minimizes the number of guest pages Linux uses for I/O at the cost of additional data moves inside the guest.

z/VM 5.1.0 preliminary experiments, not included in this report, had shown that a 5-way was the optimal configuration for this workload. Since the objective of this study was to show that z/VM 5.2.0 scales beyond z/VM 5.1.0, a 5-way was chosen as the starting point for this processor scaling study. z/VM 5.1.0 measurements of this workload with 9 processors or 16 processors could not be successfully completed because the below-2G-line constraint caused response times greater than the Application Workload Modeler (AWM) timeout value.

The following table contains the Apache workload parameter settings.

Apache workload parameters for measurements in this section

| 5-way | 9-way | 16-way | |

| Client virtual machines | 3 | 6 | 9 |

| Server virtual machines | 10 | 12 | 12 |

| 5000 files; 1 megabyte URL file size; Xstor MDC is primary location of the files; 1024M server virtual storage size; 1 server virtual processor; 1 client virtual processor; 1 client connection per server | |||

Figure 1 shows a graph of transaction rate for all the measurements in this section.

|

Here is a summary of the z/VM 5.2.0 results compared to the z/VM 5.1.0 measurements.

- With 5 processors, z/VM 5.2.0 provided a 73% increase in transaction rate over z/VM 5.1.0 with "Fixed I/O Buffers" OFF

- With 5 processors, z/VM 5.2.0 provided a 6.5% increase in transaction rate over z/VM 5.1.0 with "Fixed I/O Buffers" ON

- With 9 processors, z/VM 5.2.0 provided a 11% increase in transaction rate over z/VM 5.1.0 with "Fixed I/O Buffers" ON

- With 16 processors, z/VM 5.2.0 provided a 15% increase in transaction rate over z/VM 5.1.0 with "Fixed I/O Buffers" ON

On z/VM 5.2.0, as we increased the number of processors in the partition, transaction rate increased appropriately too. When we moved from five processors to nine processors, perfect scaling would have forecast an 80% increase in transaction rate, but we achieved a 72% increase, or a scaling efficiency of 90%. Similarly, when we moved from five processors to sixteen processors, we achieved a scaling efficiency of 73%. Both of these scaling efficiencies are better than the corresponding efficiencies we obtained on z/VM 5.1.0 with fixed Linux I/O buffers.

Here is a summary of the z/VM 5.2.0 results compared to the z/VM 5.1.0 with "Fixed I/O Buffers" OFF measurement.

- Transaction rate increased 73%

- Below-2G paging was eliminated

- Xstor/DASD paging was eliminated

- User resident pages above 2G increased by 3796%

- CP µsec per transaction decreased by 38.2%

- Virtual µsec per transaction decreased by 13.8%

- Total µsec per transaction decreased by 24.1%

Here is a summary of the z/VM 5.2.0 results compared to the z/VM 5.1.0 with "Fixed I/O Buffers" ON measurement.

- Transaction rate increased 6.5%

- Below-2G paging was eliminated

- Xstor/DASD paging was eliminated

- User resident pages above 2G increased by 51%

- CP µsec per transaction decreased by 2.1%

- Virtual µsec per transaction decreased by 7.0%

- Total µsec per transaction decreased by 5.3%

The following table compares z/VM 5.1.0, z/VM 5.1.0 with "Fixed I/O Buffers", and z/VM 5.2.0 for the 5-way measurements.

Apache workload selected measurement data

| z/VM Release | 5.1.0 | 5.1.0 | V.5.2.0 |

| Fixed I/O Buffers | No | Yes | No |

| Fixed I/O Buffers tuning | Na | Default | Na |

| Run ID | FIXS9222 | FIXS9223 | FIXT3290 |

| Tx rate | 62.734 | 102.444 | 109.083 |

| Below-2G Page Rate | 1983 | 118 | 0 |

| Xstor Total Rate | 48970 | 6562 | 0 |

| Xstor Migr Rate | 3604 | 0 | 0 |

| Total Pg to/from DASD | 8578 | 150 | 0 |

| Resident Pages above 2G | 76464 | 1966146 | 2979288 |

| Resident Pages below 2G | 463104 | 457132 | 182 |

| Total µsec/Tx | 70726 | 56676 | 53654 |

| CP µsec/Tx | 30079 | 18993 | 18598 |

| Emul µsec/Tx | 40647 | 37683 | 35055 |

| 2064-116; 5 dedicated processors, 30G central storage, 8G expanded storage; 30 connections | |||

Here is a summary of the z/VM 5.2.0 results compared to the z/VM 5.1.0 with "Fixed I/O Buffers" ON measurement.

- Transaction rate increased 15.3%

- Below-2G paging was eliminated

- Xstor/DASD paging was eliminated

- User resident pages above 2G increased by 57%

- CP µsec per transaction decreased by 9.5%

- Virtual µsec per transaction decreased by 11.2%

- Total µsec per transaction decreased by 10.6%

The following table compares z/VM 5.1.0 and z/VM 5.2.0 for the 16-way measurements.

Apache workload selected measurement data

| z/VM Release | 5.1.0 | 5.2.0 |

| Fixed I/O Buffers | Yes | No |

| Fixed I/O Buffers tuning | Default | Na |

| Run ID | FIXS9229 | FIXT3292 |

| Tx rate | 241.568 | 278.414 |

| Below-2G Page Rate | 933 | 0 |

| Xstor Total Rate | 19078 | 0 |

| Xstor Migr Rate | 0 | 0 |

| Total Pg to/from DASD | 1036 | 0 |

| Resident Pages above 2G | 2473126 | 3890865 |

| Resident Pages below 2G | 431460 | 198 |

| Total µsec/Tx | 73040 | 65291 |

| CP µsec/Tx | 25720 | 23288 |

| Emul µsec/Tx | 47319 | 42003 |

| 2064-116; 16 dedicated processors, 48G central storage, 14G expanded storage; 108 connections | ||

Scaling to 128G of Storage

The Apache workload was used to create a z/VM 5.2.0 storage usage workload and to demonstrate that z/VM 5.2.0 could fully utilize all 128G of supported real storage. Real storage was overcommitted in the 128G measurement and Xstor paging was the factor used to prove that all 128G was actually being used. A base measurement using the same Apache workload parameters was also completed in a much smaller configuration to prove that the transaction rate scaled appropriately. There are no z/VM 5.1.0 measurements of this Apache performance workload scenario.

Since the purpose of this workload is to use real storage, not to create a z/VM 5.1.0 below-2G constraint, the server virtual machines were defined large enough so that nearly all of the URL files could reside in the Linux page cache. The number of servers controlled the amount of real storage used and the number of clients controlled the total number of connections necessary to use all the processors.

The following table contains the Apache workload parameter settings.

Apache workload parameters for measurements in this section

| 22G | 128G | |

| Client virtual machines | 2 | 7 |

| Server virtual machines | 2 | 13 |

| 10000 files; 1 megabyte URL file size; 10240M server virtual storage size; Linux page cache is primary location of the files; 1 server virtual processor; 1 client virtual processor; 1 client connection per server | ||

The results show that all 128G of storage is being used with a lot of Xstor paging and all processors are at 100.0% utilization in the steady state intervals. Xstor paging increased by a higher percentage than other factors because real storage happens to be more overcommitted in the 128G measurement than in the 22G base measurement. Total µsec per transaction remained nearly identical despite a shift of lower CP µsec per transaction and higher virtual µsec per transaction. Transaction rate scaled at 99% efficiency compared to the number of processors and seems sufficient to prove efficient utilization of 128G.

Here is a summary of the 128G results compared to the 22G base results.

- Transaction rate increased 446%

- Xstor page rate increased by 1716%

- User resident pages above 2G increased by 531%

- CP µsec per transaction decreased by 10%

- Virtual µsec per transaction increased by 7%

- Total µsec per transaction remained nearly identical

The following table compares the 22G and the 128G measurements.

Apache storage usage workload selected measurement data

| Processors | 2 | 11 |

| Total Real | 22528 | 131072 |

| Total CP Xstor | 16384 | 31744 |

| Total connections | 4 | 91 |

| Run ID | 128T8157 | 128T8154 |

| Tx rate | 101.291 | 553.336 |

| Xstor Total Rate | 723 | 13139 |

| Resident Pages above 2G | 5187091 | 32755866 |

| Resident Pages below 2G | 6749 | 57618 |

| Total µsec/Tx | 19997 | 19990 |

| CP µsec/Tx | 8267 | 7436 |

| Emul µsec/Tx | 11731 | 12555 |

| PGMBK Frames | 46744 | 282k |

| SXS Available Pages | 516788 | 515725 |

| 2094-738; z/VM 5.2.0 | ||

z/VM 5.2.0 should be able to scale other applications to this level unless limited by some other factor.

Page Management Blocks (PGMBKs) still must reside below 2G and will become a limiting factor prior to using 256G of in-use virtual. For the 128G measurement, about 53% of the below-2G storage is being used for PGMBKs. See the Performance Considerations section for further discussion.

Available pages in the System Execution Space (SXS) do not appear to be approaching any limitation since they did not increase between the 22G and the 128G measurement. In both measurements, more than 90% of the SXS pages are still available.