Warning Track Interruptions

Abstract

In z/VM 7.3 with the PTF for APAR VM66678 applied, z/VM exploits a PR/SM feature called the Warning Track Interruption Facility (WTIF). Exploiting this feature helps work not to be left stranded on logical processors PR/SM has undispatched. In our measurements on a busy CPC we showed the PTF let z/VM convert nearly all of the harmful "stranded" time into a kind of wait that will not harm the workload. CPU cost for the handshaking was not measurable. How a given workload responds to no longer being stranded is very much a function of the workload.

Customers installing the CP PTF will want also to install the Perfkit PTF for its APAR VM66709. This PTF lets Perfkit calculate FCX304 PRCLOG %Susp correctly when WTIF is being exploited.

Introduction

In z/VM 7.3 with the PTF for APAR VM66678 applied, the z/VM Control Program (hence, "CP") exploits a PR/SM feature called the Warning Track Interruption Facility (WTIF). This facility lets an operating system running in a partition register with PR/SM to receive a "warning" interruption just before PR/SM undispatches a logical processor of the partition. The interruption arrives on the processor whose undispatch is imminent. The purpose of the warning interrupt is to give the operating system an opportunity to undispatch or clean up any work it has in progress on the imminently-dark logical processor, so that said work does not get stranded on a logical processor PR/SM has decided not to run for a while.

WTIF is available on all Z Systems processors IBM supports. Operating systems running in LPARs will always sense WTIF is present. However, CP does not virtualize WTIF for its guests. An operating system running in a guest of z/VM will sense WTIF is absent.

Two new externals control whether the exploitation is enabled. In the system configuration file, a new clause lets the installation select whether exploitation is enabled. If the new clause is absent, the exploitation is enabled. Also, a new operand on CP SET SRM can be used to toggle exploitation without taking an IPL.

Background

Operation

In a vertical-mode partition some logical processors might be entitled to less than a whole physical processor's worth of computing power. Subentitled logical processors are called vertical-mediums (VMs). The ability of a VM to run beyond its entitlement is gated by whether some other logical processor in the type-pool is using less than its entitlement. Thus we can expect that PR/SM might sometimes have to hold back a VM from using beyond its entitlement so as to let other logical processors use their entitlements. The situation is even worse for unentitled logical processors, called vertical-lows (VLs). Such logical processors are entitled to nothing and so will practically stall whenever PR/SM has no unused entitlement available to run them.

In both of the above undispatch cases, if the partition has enrolled for WTIF, PR/SM will deliver an interrupt to the logical processor it is about to undispatch, thereby telling the operating system that the logical processor is about to become unpowered. The operating system is to respond by undispatching any work it has in progress on the logical processor, returning said work to the operating system's dispatch queue, and then calling a PR/SM wait API on the darkening processor to indicate to PR/SM that the operating system is ready for said logical processor to become dark. This behavior of the operating system prevents work from becoming stuck on an unpowered logical processor, instead giving that work an opportunity to be dispatched somewhere else. To turn the lights back on, PR/SM simply returns to the caller, and the operating system goes on its way, usually in a beeline to its own dispatcher to pick up a new piece of work.

We should point out that CP can receive a warning interrupt on a logical processor only if the logical processor is running enabled for interrupts. Generally speaking, CP itself runs disabled for interrupts, enabling only when it sends a guest VCPU into SIE. Thus the kind of work likely to be rescued from a darkening logical processor is guest work. CP work generally will not be rescued from a darkening logical processor because said work generally runs disabled. That said, there are certain points in some long-running code paths where CP intentionally opens an interrupt window, so it can take an interrupt. If a warning interrupt is waiting, opening such a window will result in the interrupt being taken and processed, thereby saving the CP work from becoming stranded.

We should further point out that work that gets "rescued" from a darkening logical processor will not necessarily keep running immediately. When the PTF undispatches work from a logical processor that is going dark, all the PTF does is return that work to a CP dispatcher queue, so it can be run somewhere else when conditions permit. The point of the PTF is to remove a reason why work cannot advance, not to guarantee that work will advance. This is why whether the PTF will benefit a workload is very much a property of the workload, and of the configuration, and of the utilization extant in the configuration.

We should also point out that the PTF does not cause PR/SM to deliver more CPU power to the partition than it otherwise would. PR/SM is still going to turn out the lights on VMs and VLs now and then. All the PTF does is let CP gracefully handle those lights-off periods. Where previously PR/SM's dispatch behavior could leave guest work stranded on an unpowered logical processor, with the PTF PR/SM cannot.

The clients who will find the PTF most useful are the ones who are trying to run partitions equipped with VMs and VLs. Those logical processors are the ones most exposed to PR/SM undispatch. Said undispatches are more likely to be prolonged when the CPC is particularly busy, or, more to the point, when all the partitions are using most or all of their entitlements. In such situations there is little to no power available to run a VM beyond its entitlement or to run a VL at all. These are the situations where the PTF will be most useful, because the PTF will prevent guest work from being stranded on these erratically powered logical processors.

Externals

A new CP environment variable, CP.FUNCTION.SRM.WARNINGTRACK, has value 1 if the CP PTF is applied.

The PTF equips CP with a new command, CP SET SRM WARNINGTRACK {OFF|ON}. When WARNINGTRACK is set on, CP exploits WTIF in the partition. When it is set off, CP does not.

The PTF also equips CP to recognize a new statement, SRM WARNINGTRACK {OFF|ON}, in the system configuration file. If the statement is absent, CP comes up with exploitation enabled.

The PTF provides new fields in monitor. D0 R2 MRSYTPRP is updated to include cumulative counts of interesting happenings related to WTIF and to include accumulated time spent in the PR/SM wait API. D0 R19 MRSYTSYG is updated to include cumulative counts of the times SYSTEM and SYSTEMMP work were rescued from a darkening logical processor. D1 R4 MRMTRSYS includes indicators of whether the underlying PR/SM supports WTIF, and whether the running CP contains the exploitation PTF, and whether said exploitation is turned on. D2 R7 MRSCLSRM is cut whenever an operator command changes the setting of SRM WARNINGTRACK. D4 R2 MRUSELOF and D4 R3 MRUSEACT contain cumulative counts of the number of times the guest VCPU was rescued from a darkening logical processor.

Perfkit Considerations

Users of z/VM Performance Toolkit will recognize FCX304 PRCLOG %Susp as the column where a logical processor's involuntary wait, or "suspend time", is reported. Perfkit clients having installed the CP PTF will also want to install the PTF for Perfkit APAR VM66709. Without said PTF applied, Perfkit FCX304 PRCLOG %Susp includes the harmless wait time spent in the PR/SM wait API, which is NOT what we want to see. With the Perfkit PTF applied, %Susp does not include the time spent in the PR/SM wait API, which is what we want. In this way %Susp remains an indicator of potential harm.

Method

We devised an experiment to see the effect of CP exploiting WTIF. The experiment did not measure change in ETR or ITR, rather, it simply measured the degree to which the PTF changes harmful, stranding waits, called "suspend time", into innocuous waits that will not hold back the partition's workload.

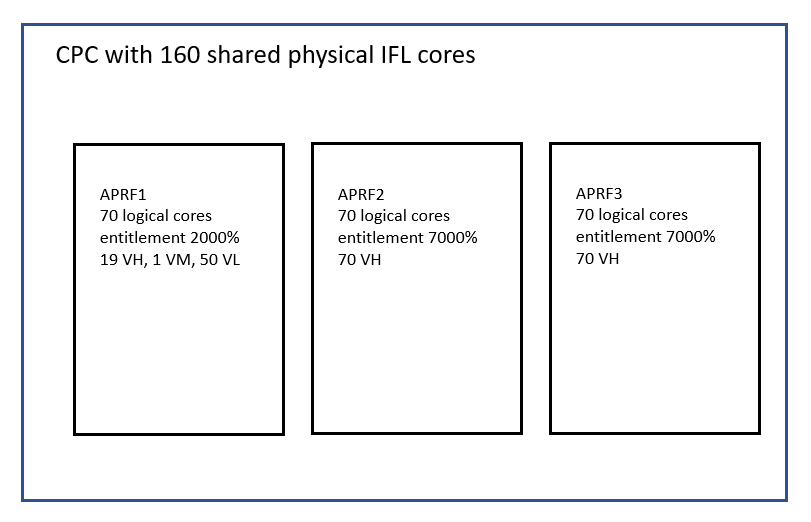

Figure 1 shows the CPC and partitions used for the experiment. The CPC had 160 shared physical IFL cores. All three LPARs were shared LPARs. LPARs APRF2 and APRF3 each had 70 logical IFL cores and weight 70. LPAR APRF1 had 70 logical IFL cores and weight 20. These weights gave the LPARs entitlements of 2000%, 7000%, and 7000% respectively. Thus APRF1 had 19 VH, 1 VM, and 50 VL, while APRF2 and APRF3 each had 70 VH.

| Figure 1. Configuration of CPC. |

|

| Notes: 3931-A01, 160 shared physical IFL cores, three shared LPARs configured as illustrated. |

The workload was built in such a way that APRF2 and APRF3 would "surge" in unison so as either to use their entitlements entirely or to be completely idle. In each of those two LPARs the surging changed polarity at the top of the minute. CPU consumption was done with CPU burners. With this configuration, in alternating minutes APRF2 and APRF3 in unison either used all of their entitlement or used none of it. We called a period when APRF2 and APRF3 were running flat-out a hot period. A cold period was one when APRF2 and APRF3 were completely idle.

In APRF1 we ran a CPU-burner workload large enough to use 70 cores' worth of power if PR/SM would but supply the power. Owing to the surging being done by the other two LPARs, in the cold minutes APRF1 would have all the power it wanted, while in the hot minutes APRF1 would be held back to its entitlement.

We ran this workload first in APRF1 with a CP that did not include the PTF. We ran a second time on a z/VM 7.3 that included the PTF, configured with CP SET SRM WARN OFF. The third run was done with the same CP but with CP SET SRM WARN ON. What we looked for were two things: first, whether the presence of the PTF with WARN OFF were benign, and second, whether using WARN ON converted harmful suspend time into harmless time spent in the PR/SM wait API.

For the first pair of runs, APRF1 was run in non-SMT mode. Thus for the APRF1 partition, CPU utilization and core utilization will be the same. This is because of how PR/SM does dispatch of a non-SMT logical processor belonging to a shared partition. While that logical processor is occupying a physical core and using one of its threads, no other logical processor is occupying the physical core or using the other thread.

For the second pair of runs, APRF1 was run in SMT-2 mode. When running in SMT-2 mode CP can use only 40 logical cores. We left APRF1 configured with 70 logical cores and CP used the first 40. We left the weight at 20 so the entitlement was still 2000% core-busy. Because of experimenter oversight, we did not configure enough CPU-burner guests in APRF1 to use 80 logical processors' worth of power. This meant during the cold periods APRF1's core utilization did not quite climb to 4000%. But the experiment still illustrated the effect of the PTF, so we did not bother to reconfigure the workload and repeat the run.

We collected MONWRITE data and reduced the D0 R2 records with a private tool that could report %Susp correctly when the PTF were present. We used a private tool because the Perfkit PTF was not yet available.

Results and Discussion

Non-SMT Findings

First, we compared the non-PTF run to the WARN OFF run. We found no consequential difference between the runs. This was what we expected. When WARN OFF is selected, the PTF is benign.

Next we compared the WARN ON run to the WARN OFF run. In this comparison we first looked for these things:

- During the cold periods, did the CPC stabilize at 7000% core-busy?

- During the hot periods, did the CPC run 16000% core-busy?

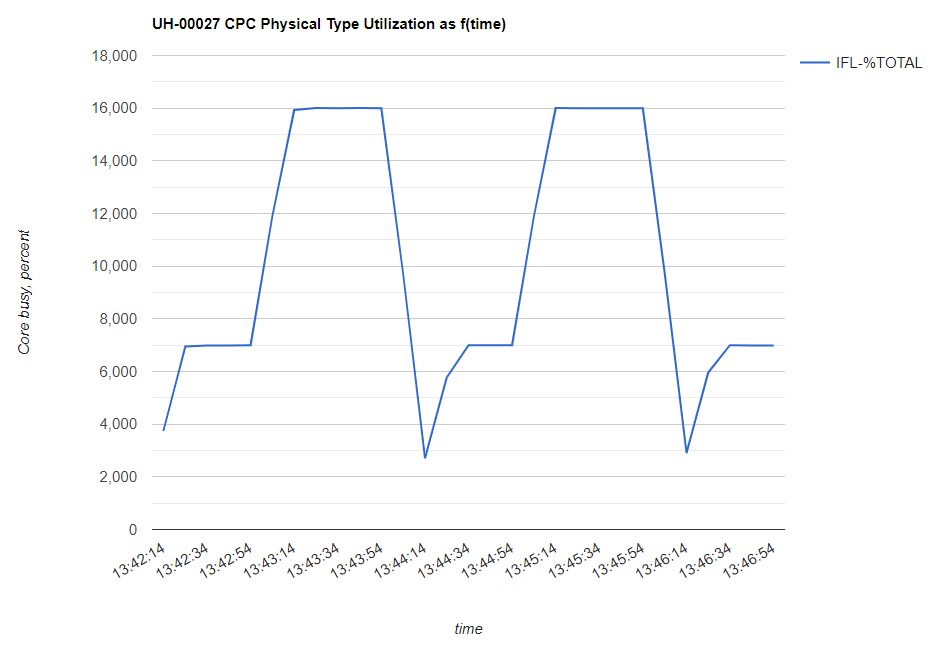

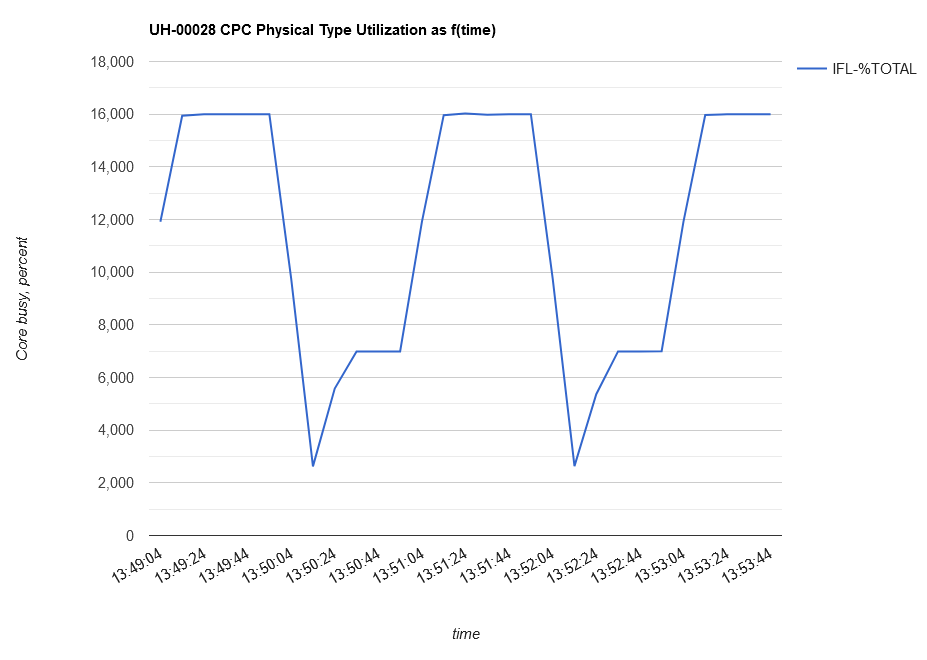

Chart 1 shows that the CPC's total core utilization varied between 2000% and 16000% according to the transitions between the hot and cold periods. The troughs have a sawtoothed appearance because when APRF2 and APRF3 go cold, it takes about 20 seconds for APRF1 to finish realizing there is plenty of entitled power available and to decide to unpark all of its 50 VLs. When that realization completes the trough line stabilizes at 7000%, as it should.

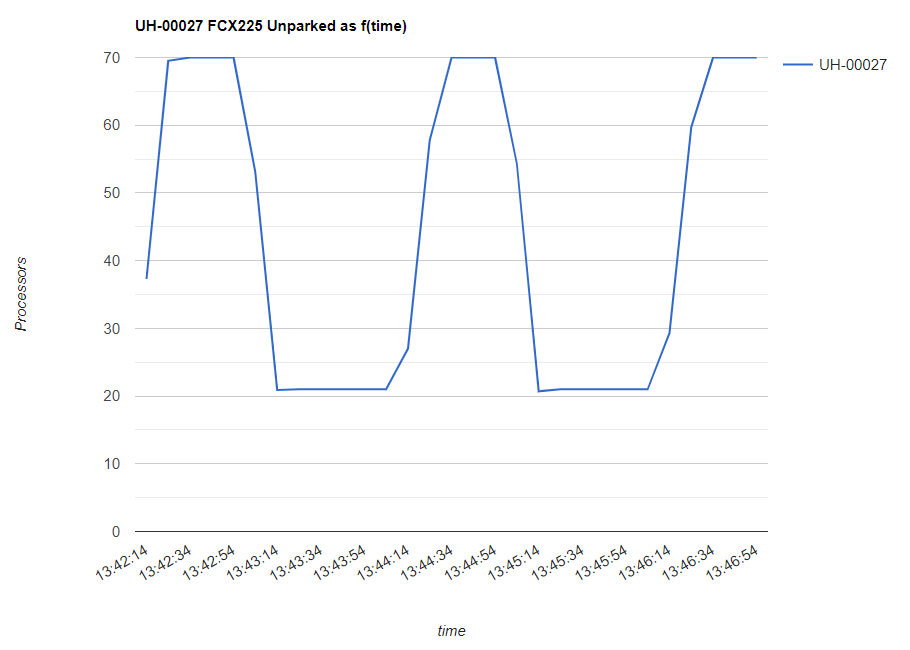

The beginning of the trough line does not drop all the way to 2000% because park/unpark occurs on two-second intervals but the monitor sample period in this data was 20 seconds. Chart 2 shows the unpark behavior of APRF1. By comparing unpark behavior to CPC utilization we can see that the APRF1 unpark curve does not climb immediately to 70. As APRF1 unparks more processors, its utilization can correspondingly increase.

| Chart 1. CPC hot-cold transitions, run UH-00027 (WARN OFF) and UH-00028 (WARN ON). | |

|

|

| Notes: 3931-A01, 160 shared physical IFL cores, three shared LPARs. CPC utilization runs from a high of 16000% to a low of 2000%. | |

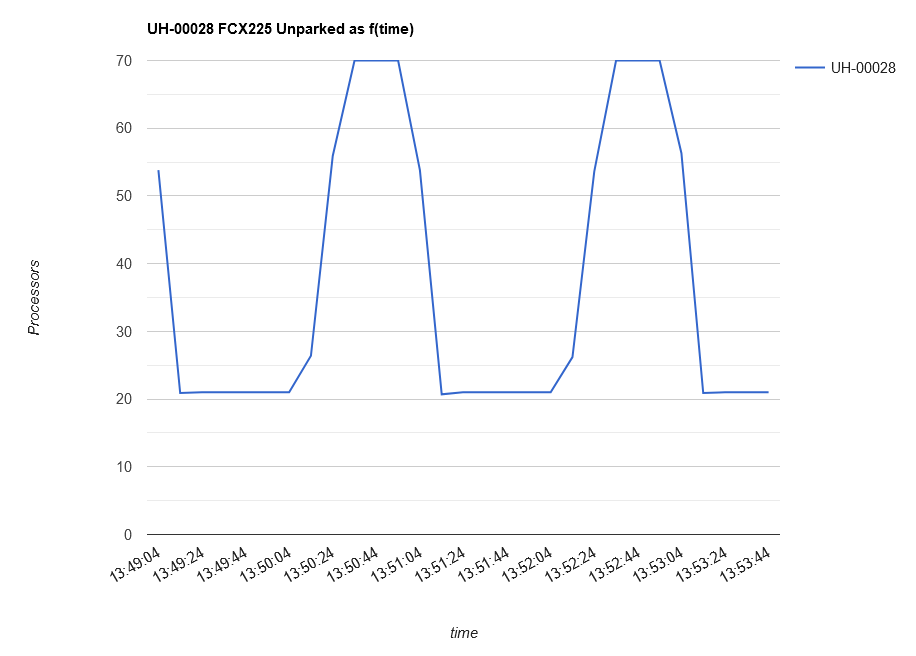

| Chart 2. APRF1 unpark behavior, WARN OFF and WARN ON. | |

|

|

| Notes: 3931-A01, 160 shared physical IFL cores, three shared LPARs. LPAR APRF1 varies from 21 processors unparked to 70 processors unparked. | |

Continuing our comparison, we next looked at this:

- During the cold periods, did APRF1 stabilize at 7000% busy, the maximum it could ever use?

- During the hot periods, did APRF1 stabilize at 2000% busy, its entitlement?

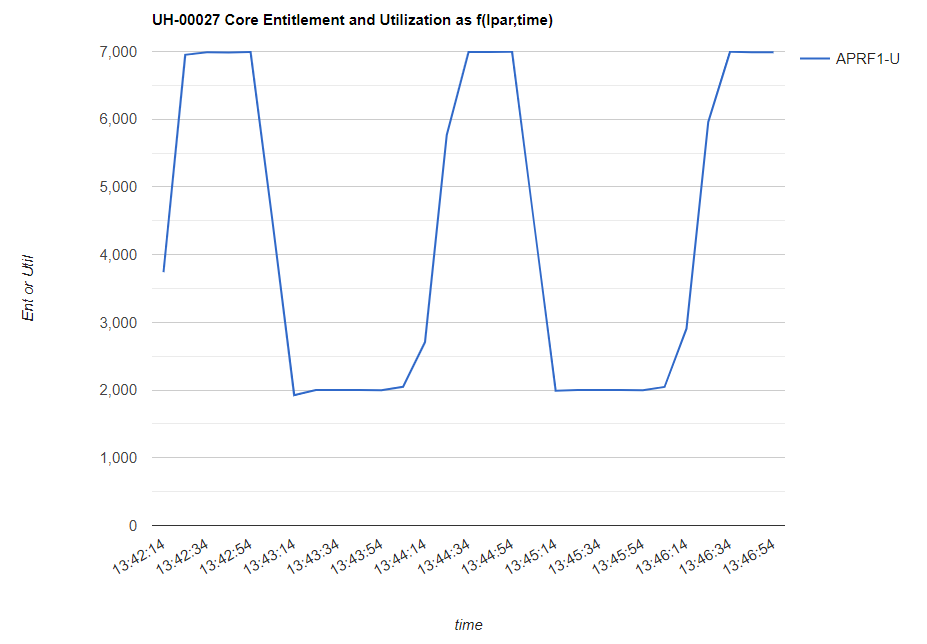

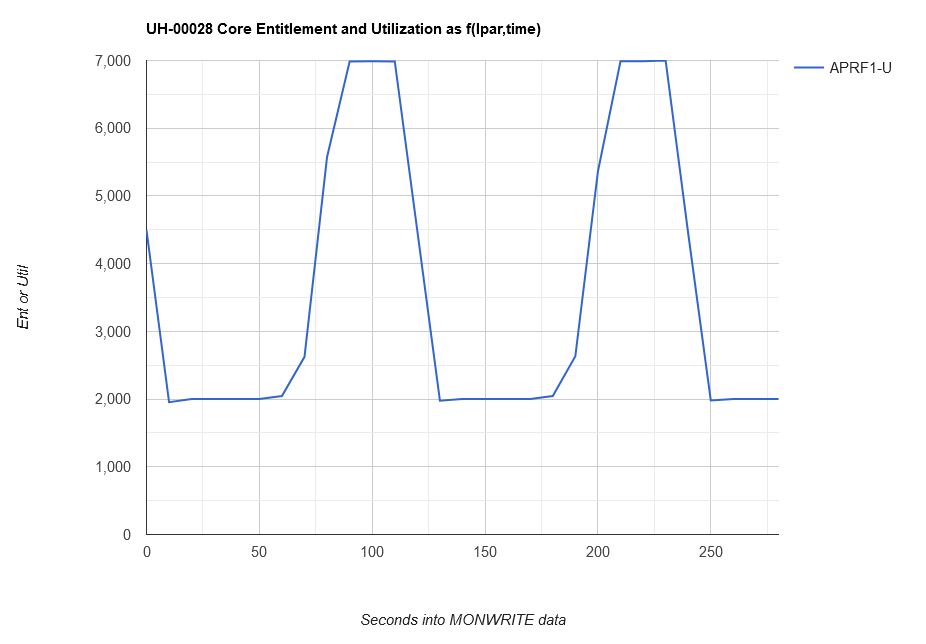

Chart 3 shows the core utilization for APRF1. We can see it stabilized at 2000% and 7000% according to the hot and cold periods. This was what it was supposed to do.

| Chart 3. APRF1 core utilization, WARN OFF and WARN ON. | |

|

|

| Notes: 3931-A01, 160 shared physical IFL cores, three shared LPARs. Core utilization for LPAR APRF1 runs from 2000% to 7000%. | |

Continuing our comparison, we next looked at this:

- With WARN OFF, what did a VL in APRF1 experience as regards suspend time?

- With WARN ON, did the suspend time convert to time spent in the PR/SM wait API?

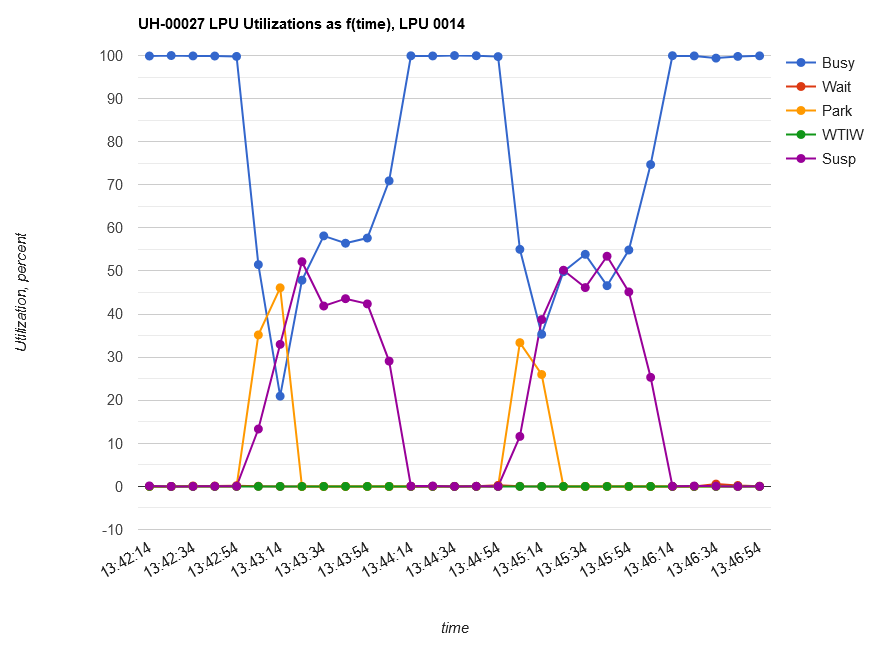

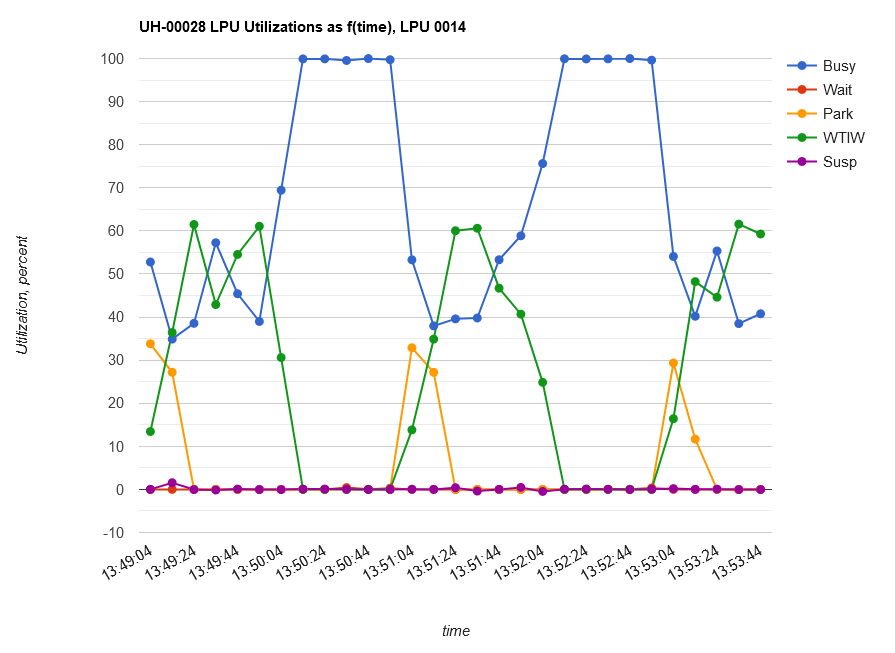

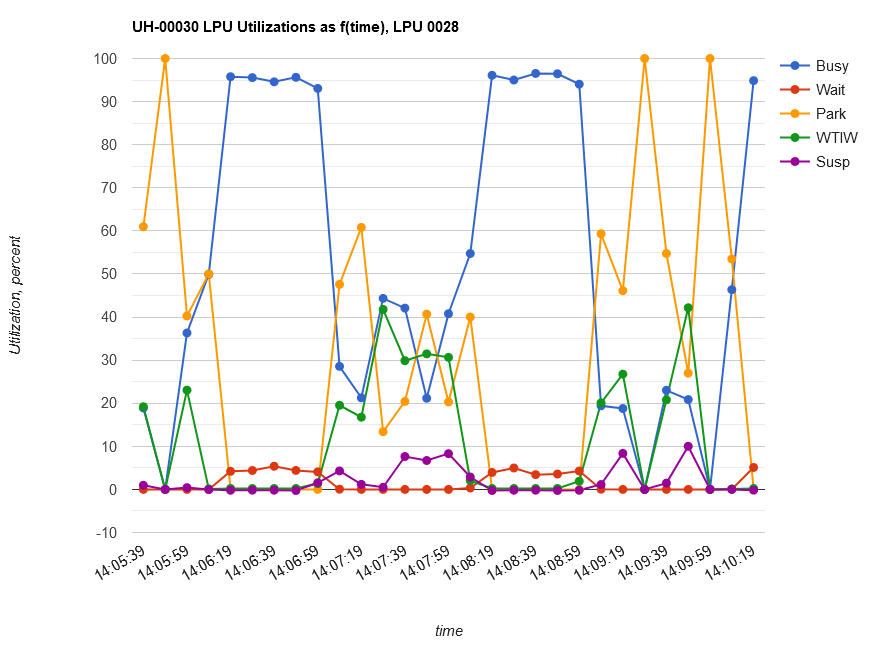

Chart 4 shows CPU utilization for the first VL in APRF1. We chose the first VL because owing to how CP park/unpark works, CP will tend to unpark its first VL even though power to run it might be scarce. This is the correct environment for seeing whether a VL experiences suspend time when unentitled power is not readily available.

The chart shows that during the cold period, the VL is unparked, completely powered, and completely busy. This is correct for a CPU-burner workload. When the hot period starts, z/VM first senses the sudden loss of unentitled power and for a short period runs with the first VL parked. As the situation stabilizes, the CP park/unpark algorithm unparks this first VL and CP tries to run work on it.

- For WARN OFF, the "Susp" line shows that PR/SM has other ideas, subjecting the VL to nonzero suspend time. This suspend time is time when the VL has work stranded upon it. The line labelled "WTIW", for "warning-track interrupt wait", is time the VL spends in the PR/SM wait API. Because WARN is OFF, WTIW is zero.

- For WARN ON, the chart shows suspend time replaced by time spent in the PR/SM wait API. Such time is guaranteed not to strand work.

| Chart 4. APRF1 logical processor utilization, WARN OFF and WARN ON. | |

|

|

| Notes: 3931-A01, 160 shared physical IFL cores, three shared LPARs. The PTF converted APRF1 CPU 0014's suspend time, harmful, to time spent in the PR/SM wait API, harmless. | |

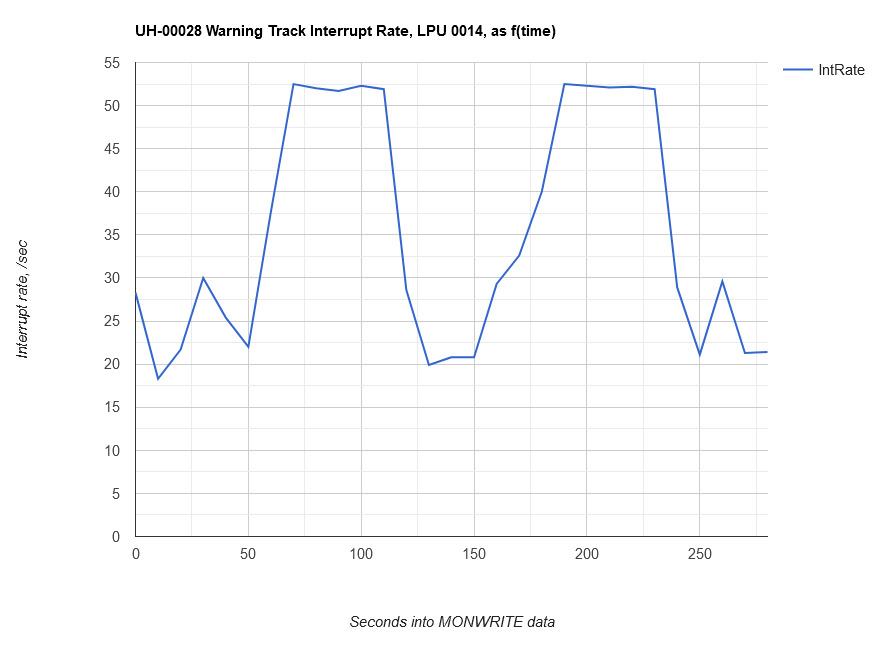

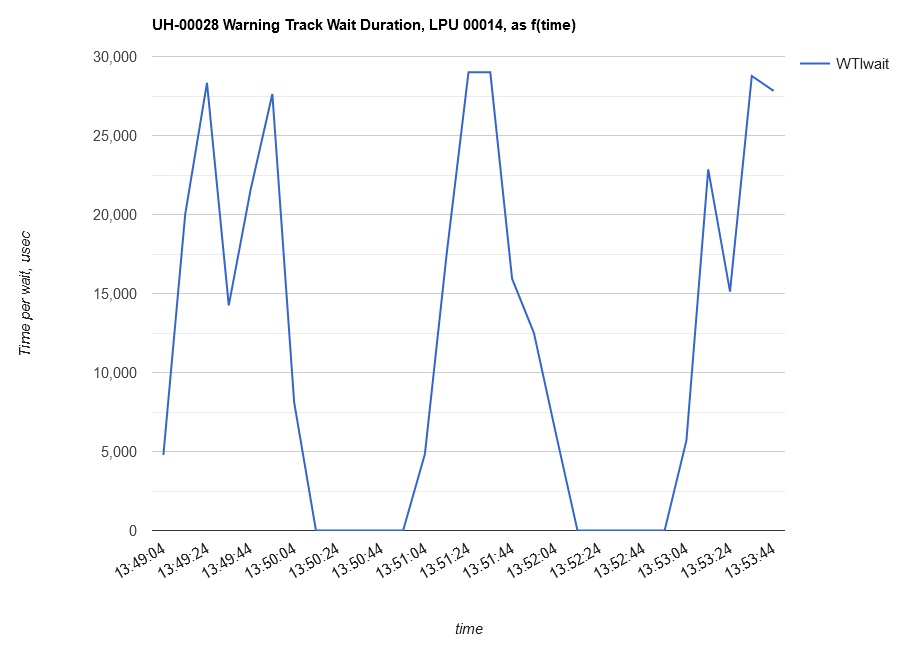

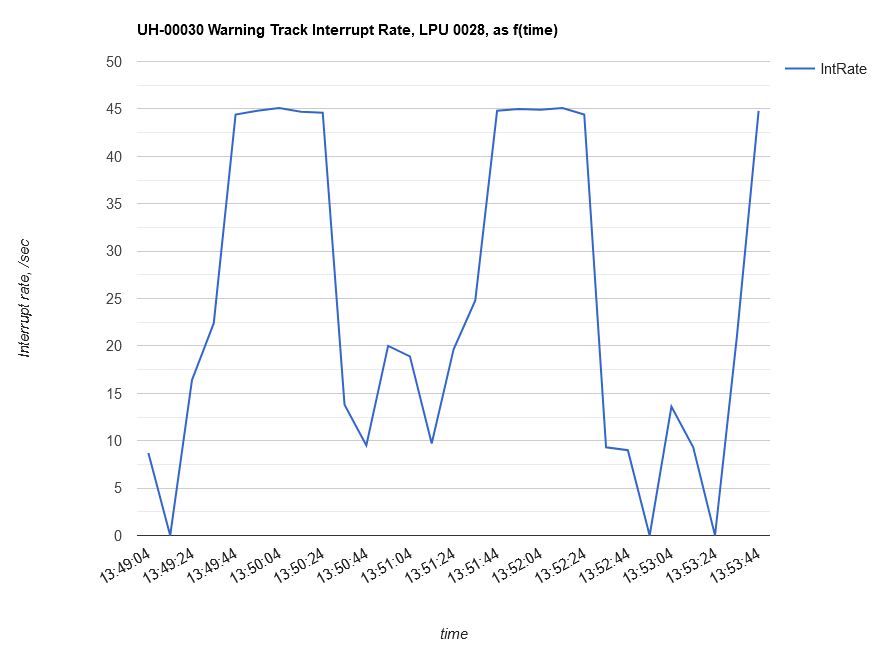

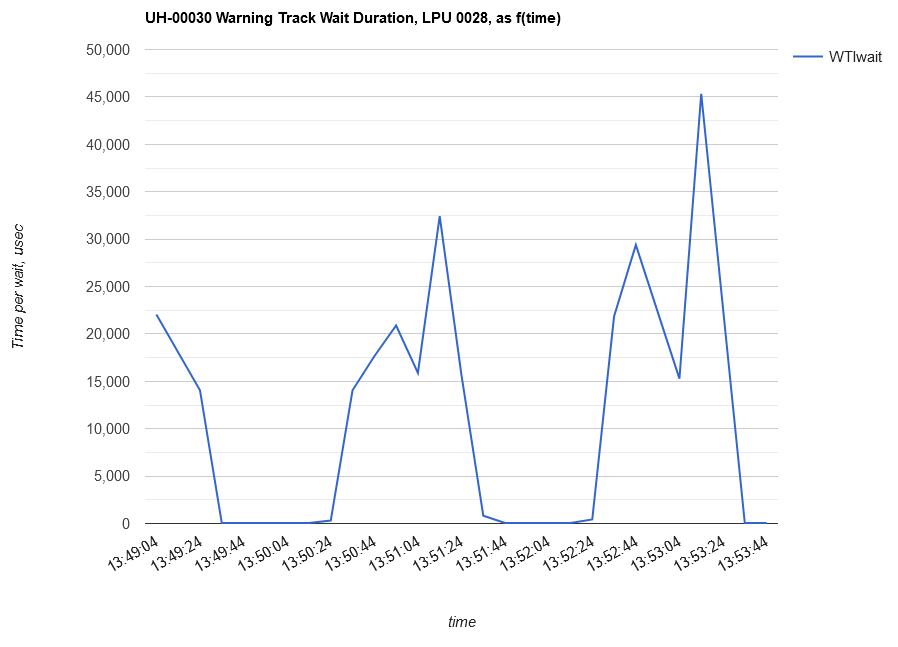

Chart 5 shows the rate at which PR/SM delivered warning interrupts to our first VL and the time spent in each associated PR/SM wait API call. We can see that during the cold periods the interrupts arrive at about 50/sec and the average time spent in a PR/SM wait API call is about 2 μsec. During the hot periods the interrupt rate decreases and the time spent in each PR/SM wait API call rises to about 30000 μsec. This makes sense. During the hot periods the VL has less access to CPU power, so the interrupt receipt rate would decrease and the time spent in the PR/SM wait API would rise.

| Chart 5. APRF1 logical processor PR/SM wait traits, WARN ON. | |

|

|

| Notes: 3931-A01, 160 shared physical IFL cores, three shared LPARs. During the cold period the VLs run freely and so the interrupt rate is higher and the duration of a call into the PR/SM wait API is lower. During the hot period, the VLs run sparingly and so the interrupt rate is lower and the duration of a call into the PR/SM wait API is higher. | |

The last question to answer is whether with WARN ON there is CP CPU overhead due to the processing of the warning track interrupts. To check this we examined FCX225 SYSSUMLG T/V. In the WARN OFF and WARN ON cases T/V was found to be 1.00 throughout each run. While it is certainly true CP uses CPU time to field the warning-track interrupts and do the dispatcher calls necessary to prevent stranding, the overhead was not measurable to two decimal places.

SMT-2 Findings

The findings for the SMT-2 APRF1 experiment were similar to what we saw for the non-SMT experiment. Total CPC utilization rose and fell, APRF1 core utilization rose and fell, APRF1 parked and unparked cores, total guest busy rose and fell, and CPU overhead for handshaking was not measurable, all very similar to the non-SMT experiment.

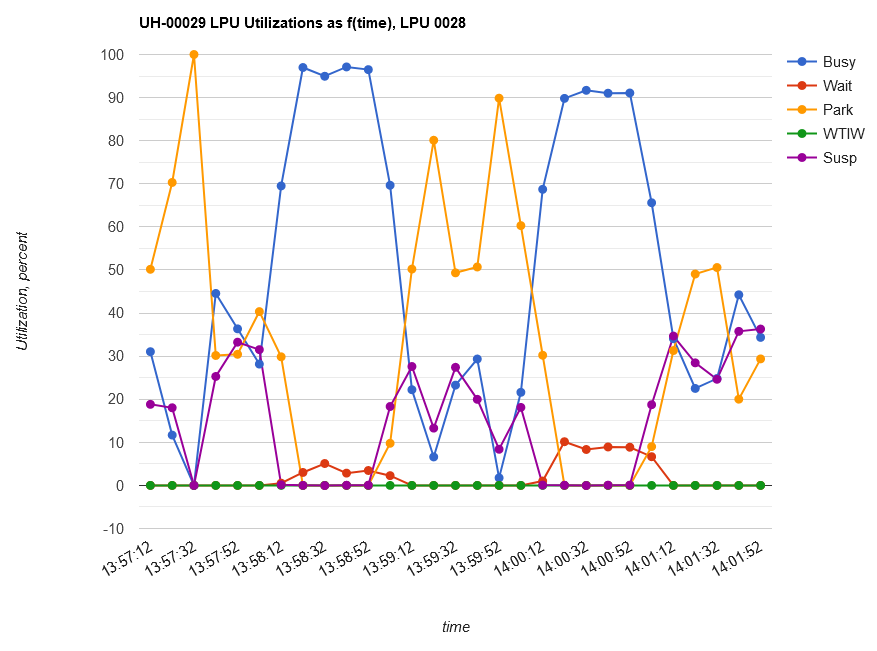

One difference we noted was in the suspend time conversion experience for CPU 0028, the first VL. Chart 6 illustrates this. With WARN ON some suspend time did not get converted to PR/SM wait API time.

| Chart 6. APRF1 logical processor utilization, SMT-2, WARN OFF and WARN ON. | |

|

|

| Notes: 3931-A01, 160 shared physical IFL cores, three shared LPARs. The PTF converted most of APRF1 CPU 0028's suspend time, harmful, to time spent in the PR/SM wait API, harmless. | |

Another difference we noted was in the interrupt rate and PR/SM wait API time for CPU 0028. Chart 7 illustrates this. The trend is the same as it was for non-SMT.

| Chart 7. APRF1 logical processor PR/SM wait traits, SMT-2, WARN ON. | |

|

|

| Notes: 3931-A01, 160 shared physical IFL cores, three shared LPARs. The trend is as it was for non-SMT. | |

Seeing the Effect in Client Workloads

There are metrics a client can examine to see whether the workload is getting benefit from the PTF. The first of these are transaction rate and transaction response time. Neither of these is conveyed in monitor data but clients will be keeping track of them for SLA compliance reasons if nothing else.

Conversion of suspend time to PR/SM wait API time is another such metric. When the time is so converted, a reason for workload blockage is eliminated.

Clients might be tempted to use the issuance rates of guest "panic APIs" such as guest use of Diag x'9C' or Diag x'44' as a metric for assessing the benefit of the PTF. Extreme caution should be used here. Just because work did not get stranded on a darkened logical processor does not mean the work is running. It could well be waiting in a CP dispatch queue, and dispatch contention is a real phenomenon. The trigger for a guest to use such APIs is that the guest has sensed that some virtual processor crucial to the progress of the guest's workload is not running. The reason why it is not running is irrelevant.

Other Thoughts

The PTF has potential to decrease CP's use of Diag x'9C'. The reasoning goes like this. When a guest issues Diag x'9C', if CP finds the target guest VCPU is in dispatch on a logical processor that PR/SM is not running at the moment, CP will issue Diag x'9C' to PR/SM to ask PR/SM to restart said logical. With the PTF in play, CP will never have a guest VCPU in dispatch on a logical processor PR/SM is not running. Thus there is a chance the PTF can decrease CP's use of Diag x'9C'.

Summary

The warning track PTF converts suspend time, which generally strands work,

to PR/SM wait API time, which definitely does not. Whether the rescued

work will in fact be run somewhere else is a property of the workload and

of the configuration. Accordingly, the benefit clients will experience will

vary.